your blocks, likes, lists, and just about everything except chats are PUBLIC

you can pin custom feeds; i like quiet posters, best of follows, mutuals, mentions

if your chronological feed is overwhelming, you can make and pin make a personal list of "unmissable" people

Because they’re putting spyware on all our computers at the moment. Which seems like fucking great timing.

Mamdani: I've spoken about --

Trump: Just say yes, it's easier than explaining.

For Those Who May Find Themselves on the Red Team: tylershoemaker.info/docs/shoemak...

For Those Who May Find Themselves on the Red Team: tylershoemaker.info/docs/shoemak...

Hope it's helpful to people exploring and preparing for the process.

Feedback is welcome!

www.dropbox.com/scl/fi/p7xdt...

Hope it's helpful to people exploring and preparing for the process.

Feedback is welcome!

www.dropbox.com/scl/fi/p7xdt...

jobs.wisc.edu/jobs/assista...

jobs.wisc.edu/jobs/assista...

📅 Ddl 12/22/25

🔬 Accessibility & Learning, plus Sustainability & Social Justice

🧑🏫 Associate/Full Prof*

🔗 umd.wd1.myworkdayjobs.com/en-US/UMCP/j...

*Assistant-level candidates: apply to departments, mentioning AIM in a cover letter

📅 Ddl 12/22/25

🔬 Accessibility & Learning, plus Sustainability & Social Justice

🧑🏫 Associate/Full Prof*

🔗 umd.wd1.myworkdayjobs.com/en-US/UMCP/j...

*Assistant-level candidates: apply to departments, mentioning AIM in a cover letter

The official paper link:

www.pnas.org/doi/10.1073/...

The official paper link:

www.pnas.org/doi/10.1073/...

Apply by *December 15* for full consideration.

Apply by *December 15* for full consideration.

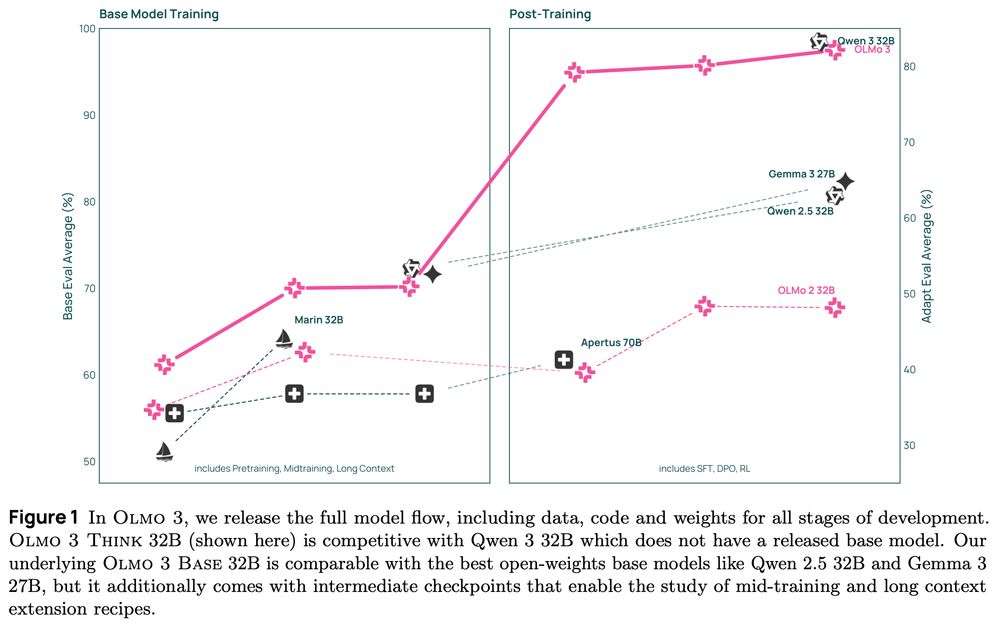

🐟Olmo 3 32B Base, the best fully-open base model to-date, near Qwen 2.5 & Gemma 3 on diverse evals

🐠Olmo 3 32B Think, first fully-open reasoning model approaching Qwen 3 levels

🐡12 training datasets corresp to different staged training

🐟Olmo 3 32B Base, the best fully-open base model to-date, near Qwen 2.5 & Gemma 3 on diverse evals

🐠Olmo 3 32B Think, first fully-open reasoning model approaching Qwen 3 levels

🐡12 training datasets corresp to different staged training

If you’re into NLP, transformers, or real-world text messiness, the full case study is here: buthonestly.io/programming/...

#nlp #machinelearning #sentimentanalysis #emotionanalysis

If you’re into NLP, transformers, or real-world text messiness, the full case study is here: buthonestly.io/programming/...

#nlp #machinelearning #sentimentanalysis #emotionanalysis

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

And I am a tenured professor at Harvard! How much more protected can I be?

Imagine how STUDENTS feel. Junior faculty. This quote nails it

And I am a tenured professor at Harvard! How much more protected can I be?

Imagine how STUDENTS feel. Junior faculty. This quote nails it

We’ve redesigned the “who can reply” settings to make them clearer and easier to use. You can also now save your choices as the default for future posts, giving you easier control over the conversations you start.

We’ve redesigned the “who can reply” settings to make them clearer and easier to use. You can also now save your choices as the default for future posts, giving you easier control over the conversations you start.

www.youtube.com/watch?v=B9hG...

www.youtube.com/watch?v=B9hG...

97 papers, ~1600 pages of computational humanities 🔥 Now published via the new Anthology of Computers and the Humanities, with DOIs for every paper.

🔗 anthology.ach.org/volumes/vol0...

And don’t forget: registration closes tomorrow (20 Nov)!

97 papers, ~1600 pages of computational humanities 🔥 Now published via the new Anthology of Computers and the Humanities, with DOIs for every paper.

🔗 anthology.ach.org/volumes/vol0...

And don’t forget: registration closes tomorrow (20 Nov)!