Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

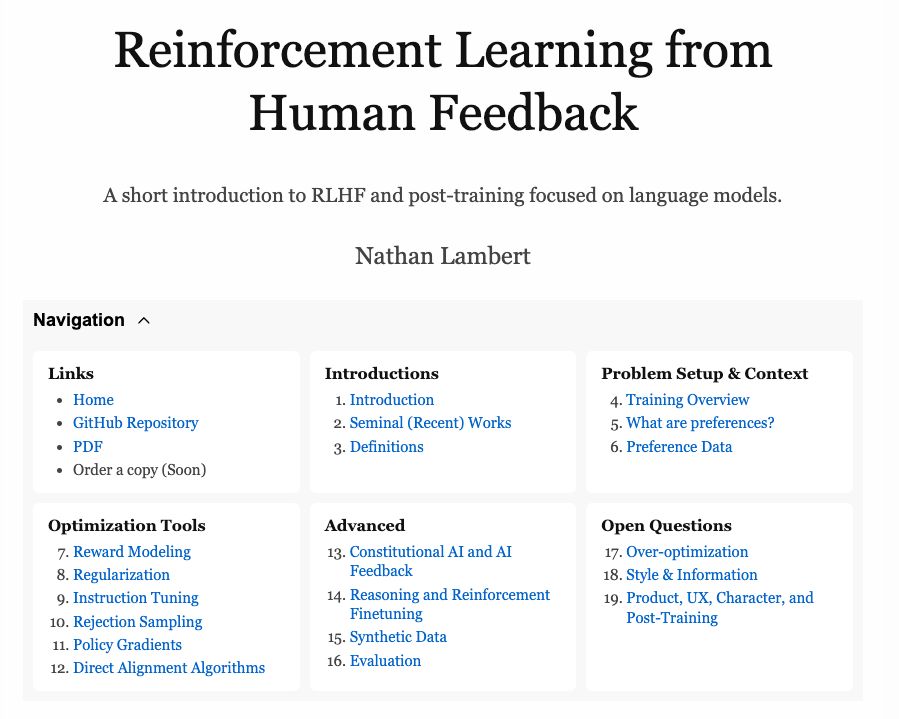

rlhfbook.com

On the dgx spark finetuning olmo 2 1b sft. Built by referencing the original repositories + TRL

On the dgx spark finetuning olmo 2 1b sft. Built by referencing the original repositories + TRL

Email me with "CMU Visit" in the subject if you're interested in chatting & why!

Email me with "CMU Visit" in the subject if you're interested in chatting & why!

On standing out and finding gems.

www.interconnects.ai/p/thoughts-o...

On standing out and finding gems.

www.interconnects.ai/p/thoughts-o...

Another level up when those search models get 10x faster.

Another level up when those search models get 10x faster.

Right now has REINFORCE, RLOO, PPO, GRPO, Dr. GRPO, GSPO, CISPO, standard RM, PRM, ORM.

github.com/natolambert/...

Right now has REINFORCE, RLOO, PPO, GRPO, Dr. GRPO, GSPO, CISPO, standard RM, PRM, ORM.

github.com/natolambert/...

Anthropic -> Claude's Constitution -> excellent (Claude focused)

OpenAI -> Model Spec -> excellent (developer/team focused)

xAI -> Elon's Tweets -> ...

DeepMind -> ???

Come on Google, need some transparency here.

Anthropic -> Claude's Constitution -> excellent (Claude focused)

OpenAI -> Model Spec -> excellent (developer/team focused)

xAI -> Elon's Tweets -> ...

DeepMind -> ???

Come on Google, need some transparency here.

The tools are getting so powerful that we need to change how we scope, manage, and approach our work.

A.k.a. not getting them to work is a skill issue.

www.interconnects.ai/p/get-good-a...

The tools are getting so powerful that we need to change how we scope, manage, and approach our work.

A.k.a. not getting them to work is a skill issue.

www.interconnects.ai/p/get-good-a...

github.com/natolambert/...

github.com/natolambert/...

Coming to the rlhf book soon.

Coming to the rlhf book soon.

These are the first diagrams it generated for me -- zero feedback yet, for the reward model chapter.

These are the first diagrams it generated for me -- zero feedback yet, for the reward model chapter.