#AI

I mostly tweet about #AI, #robots, #science, and #3dprinting

My thoughts and opinions are my own.

jballoch.com

I'm so thankful for my advisor @markriedl.bsky.social , my committee, my family, my lab, and all those who have supported me through this. Excited for this next chapter!

A couple of refs:

openreview.net/forum?id=YGh...

arxiv.org/abs/2110.02719

arxiv.org/abs/2110.15191

A couple of refs:

openreview.net/forum?id=YGh...

arxiv.org/abs/2110.02719

arxiv.org/abs/2110.15191

openpyro-a1.github.io

openpyro-a1.github.io

openpyro-a1.github.io

New talk! Forecasting the Alpaca moment for reasoning models and why the new style of RL training is a far bigger deal than the emergence of RLHF.

YouTube: https://buff.ly/41bVRPp

New talk! Forecasting the Alpaca moment for reasoning models and why the new style of RL training is a far bigger deal than the emergence of RLHF.

YouTube: https://buff.ly/41bVRPp

github.com/Emerge-Lab/g...

github.com/Emerge-Lab/g...

(h/t klowrey)

(h/t klowrey)

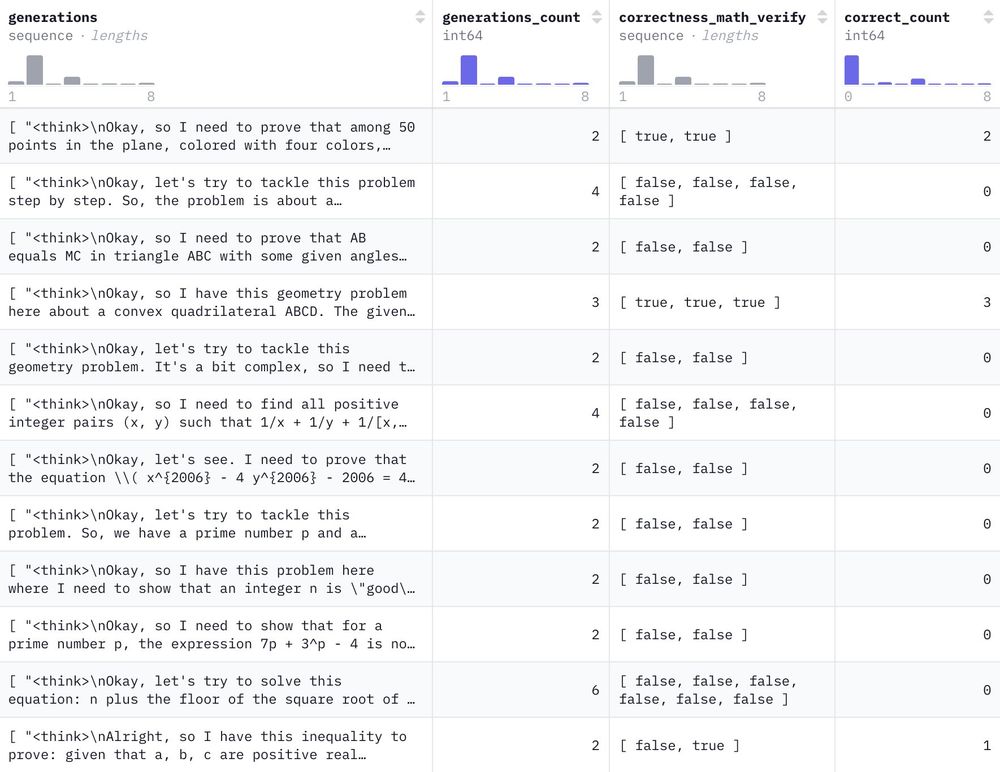

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

—Winston Churchill

—Winston Churchill

Stay skeptical my friends

Stay skeptical my friends

Its called SAM2Act—a multi-view robotic transformer-based policy that integrates a visual foundation model with a memory architecture for robotic manipulation.

Project page: sam2act.github.io

Open code: github.com/sam2act/SAM2...

Its called SAM2Act—a multi-view robotic transformer-based policy that integrates a visual foundation model with a memory architecture for robotic manipulation.

Project page: sam2act.github.io

Open code: github.com/sam2act/SAM2...

Not out of the woods, freezes and pulled-back grants are still in the works once they get their act together.

apnews.com/article/dona...