🇳🇴 Oslo 🏴 Edinburgh 🇦🇹 Graz

Here's the pre-viva talk if anyone's interested, my work was/is about quantifying the distributional distance between real and synthetic speech.

youtu.be/Ii-6buwAoCg

Happy Monday! Here's me thinking about speech tech, voices, and death thanks to the lovely @technomoralfutures.bsky.social

content notes: discussion of death, grief, online abuse

Happy Monday! Here's me thinking about speech tech, voices, and death thanks to the lovely @technomoralfutures.bsky.social

content notes: discussion of death, grief, online abuse

Here's the pre-viva talk if anyone's interested, my work was/is about quantifying the distributional distance between real and synthetic speech.

youtu.be/Ii-6buwAoCg

Here's the pre-viva talk if anyone's interested, my work was/is about quantifying the distributional distance between real and synthetic speech.

youtu.be/Ii-6buwAoCg

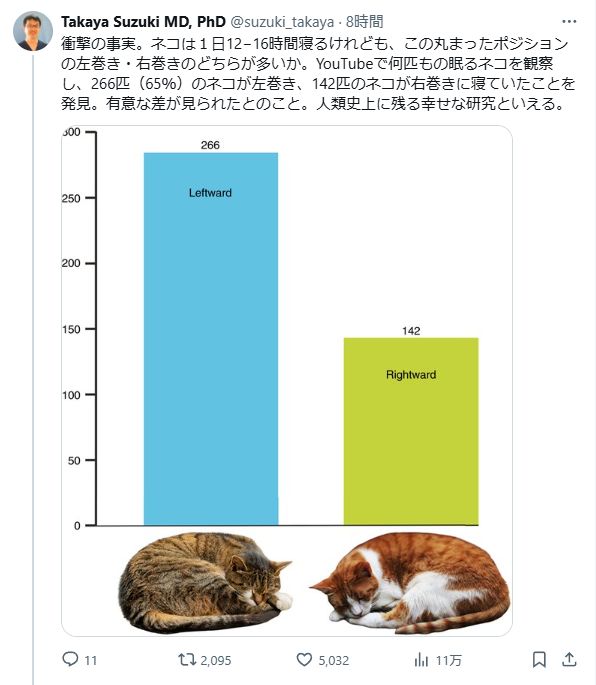

"My p-value is smaller than 0.05, so..."

Wrong answers only.

"My p-value is smaller than 0.05, so..."

Wrong answers only.

www.isca-archive.org/interspeech_...

I presented a TTS-for-ASR paper:

www.isca-archive.org/interspeech_...

And one on prosody reps: www.isca-archive.org/interspeech_...

There were many interesting questions & comments - if you have more and didn't get the chance feel free to send me a message.

I presented a TTS-for-ASR paper:

www.isca-archive.org/interspeech_...

And one on prosody reps: www.isca-archive.org/interspeech_...

There were many interesting questions & comments - if you have more and didn't get the chance feel free to send me a message.

App: interspeech.app.link?event=687602...

Paper: www.isca-archive.org/interspeech_...

App: interspeech.app.link?event=687602...

Paper: www.isca-archive.org/interspeech_...

Check it out here shreeharsha-bs.github.io/Hear-Me-Out/

1/2

Check it out here shreeharsha-bs.github.io/Hear-Me-Out/

1/2

So yeah everyone watch K-Pop Demon Hunters.

I'm not sure how useful this information is, but...it's yours now.

„Sometimes the question is raised as to whether we really want a TTS system to sound like a human at all.“

me: I wonder where this is going

later:

„no matter how good a system is, it will rarely be mistaken for a real person, and we believe this concern can be ignored.“

me: oh no

„Sometimes the question is raised as to whether we really want a TTS system to sound like a human at all.“

me: I wonder where this is going

later:

„no matter how good a system is, it will rarely be mistaken for a real person, and we believe this concern can be ignored.“

me: oh no

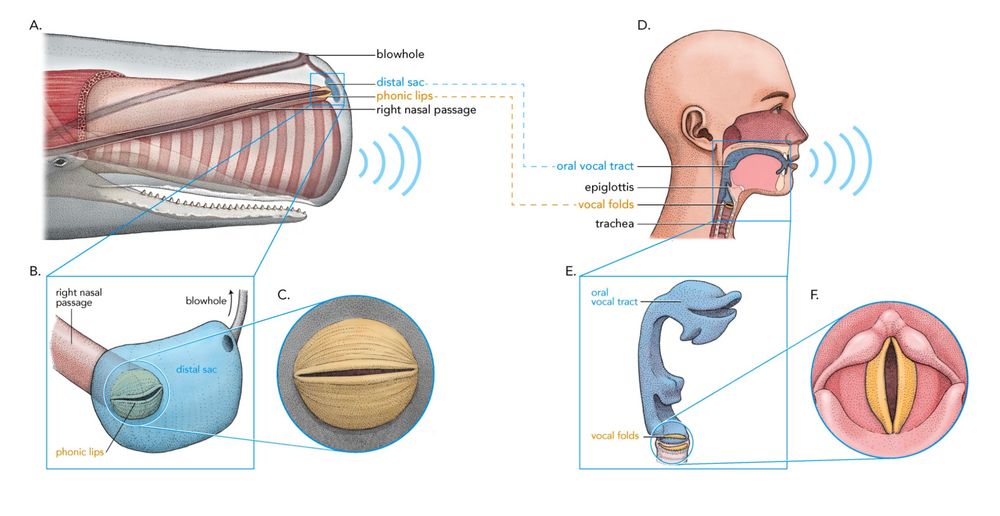

We previously discovered that sperm whales have analogues to human vowels.

In a new preprint, we analyze linguistic behavior of whale vowels.