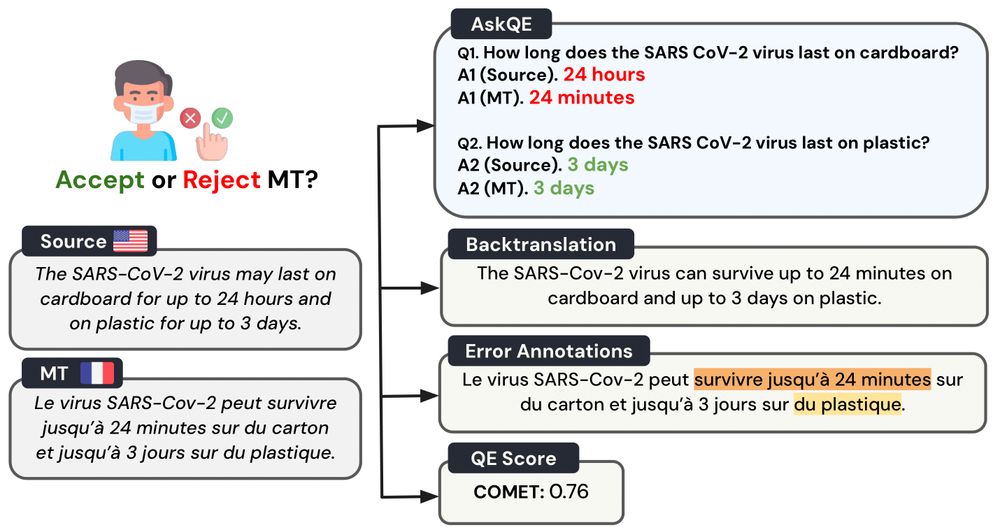

Introducing ❓AskQE❓, an #LLM-based Question Generation + Answering framework that detects critical MT errors and provides actionable feedback 🗣️

#ACL2025

Introducing ❓AskQE❓, an #LLM-based Question Generation + Answering framework that detects critical MT errors and provides actionable feedback 🗣️

#ACL2025

@crystinaz.bsky.social

@oxxoskeets.bsky.social

@dayeonki.bsky.social @onadegibert.bsky.social

@crystinaz.bsky.social

@oxxoskeets.bsky.social

@dayeonki.bsky.social @onadegibert.bsky.social

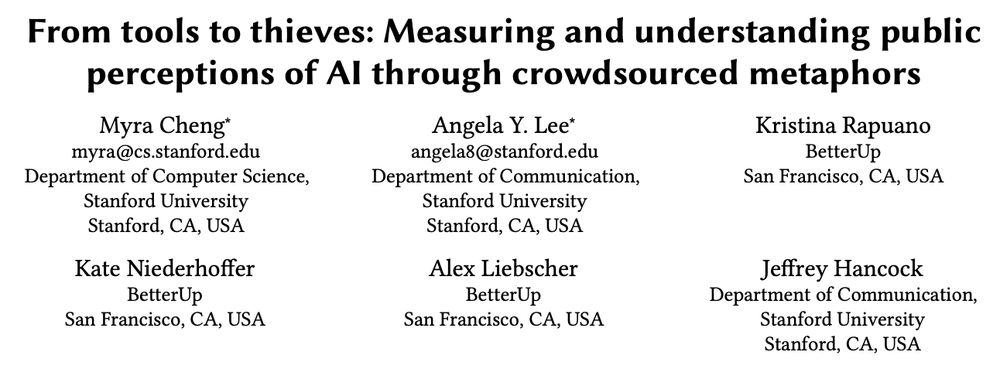

Check out our abstract here: angelhwang.github.io/doc/ic2s2_AI...

Inspired by our past work: arxiv.org/abs/2411.13032

Check out our abstract here: angelhwang.github.io/doc/ic2s2_AI...

Inspired by our past work: arxiv.org/abs/2411.13032

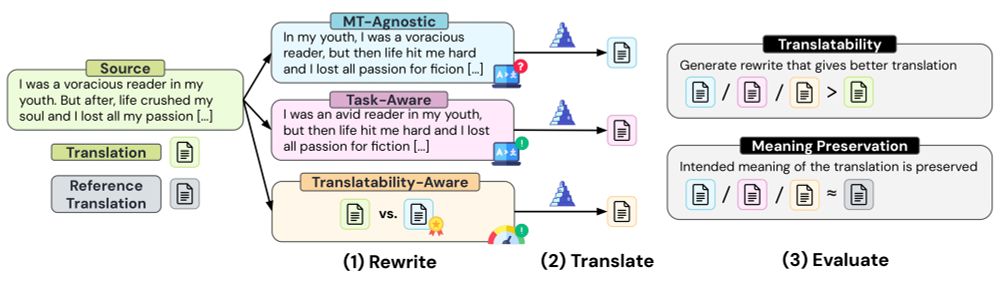

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

1/ We often assume that well-written text is easier to translate ✏️

But can #LLMs automatically rewrite inputs to improve machine translation? 🌍

Here’s what we found 🧵

We're thrilled to announce the first-ever Tokenization Workshop (TokShop) at #ICML2025 @icmlconf.bsky.social! 🎉

Submissions are open for work on tokenization across all areas of machine learning.

📅 Submission deadline: May 30, 2025

🔗 tokenization-workshop.github.io

We're thrilled to announce the first-ever Tokenization Workshop (TokShop) at #ICML2025 @icmlconf.bsky.social! 🎉

Submissions are open for work on tokenization across all areas of machine learning.

📅 Submission deadline: May 30, 2025

🔗 tokenization-workshop.github.io

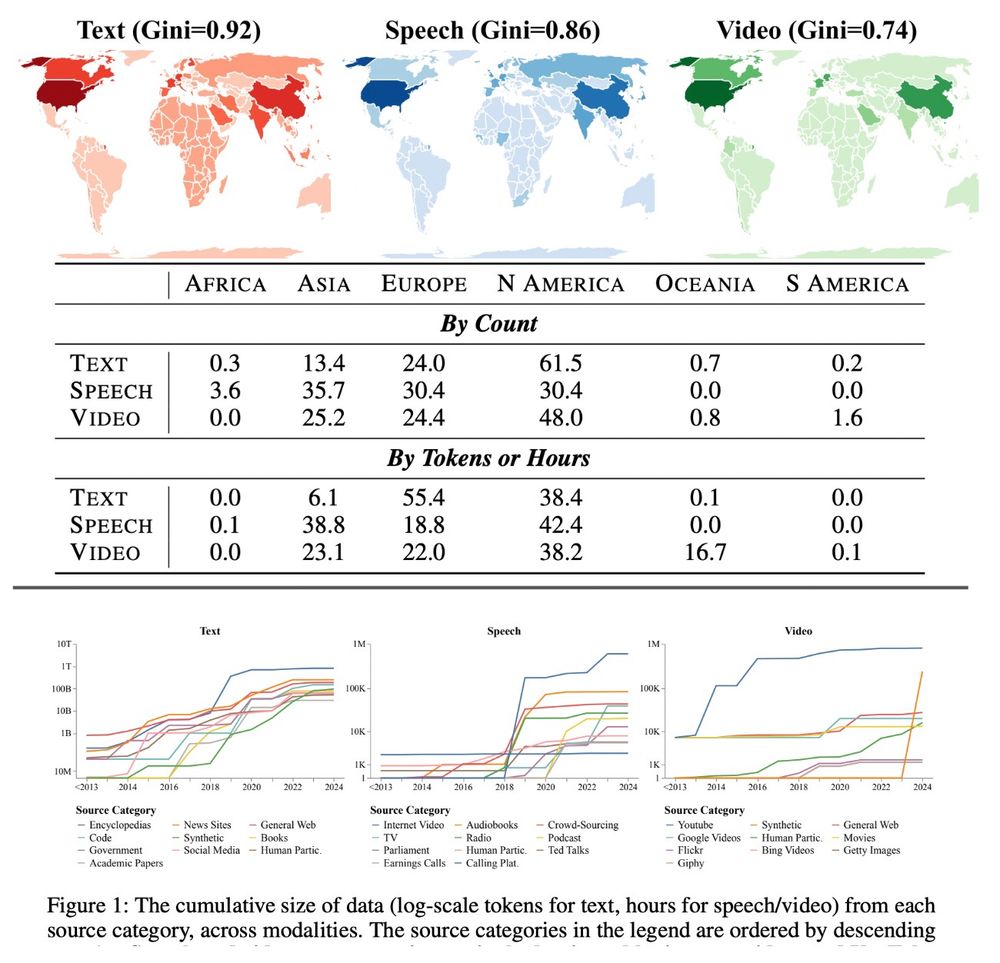

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697

And how can span annotation help us with evaluating texts?

Find out in our new paper: llm-span-annotators.github.io

Arxiv: arxiv.org/abs/2504.08697

- Ayodele Awokoya

- Wilker Aziz

- Marta Costa-Jussa

- Barry Haddow

- Amit Moryosse

- Sara Papi

- Jörg Tiedemann

- Marco Turchi

- Ayodele Awokoya

- Wilker Aziz

- Marta Costa-Jussa

- Barry Haddow

- Amit Moryosse

- Sara Papi

- Jörg Tiedemann

- Marco Turchi

Are we developing better systems or are we just gaming the metrics? And how do we address this?

Super (m)interesting! 👀

Are we developing better systems or are we just gaming the metrics? And how do we address this?

Super (m)interesting! 👀

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

✅ Humans achieve 85% accuracy

❌ OpenAI Operator: 24%

❌ Anthropic Computer Use: 14%

❌ Convergence AI Proxy: 13%

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

www2.statmt.org/wmt25/multil...

www2.statmt.org/wmt25/multil...

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

💰 $1 per question

🏆 Top-3 fastest + most accurate win $50

⏳ Questions take ~3 min => $20/hr+

Click here to sign up (please join, reposts appreciated 🙏): preferences.umiacs.umd.edu

This is work with @tomlim.bsky.social, @jlibovicky.bsky.social, and Alex Fraser.

arxiv.org/abs/2502.06468

#newpaper #NLP #NLProc

This is work with @tomlim.bsky.social, @jlibovicky.bsky.social, and Alex Fraser.

arxiv.org/abs/2502.06468

#newpaper #NLP #NLProc

"The decline in citation fidelity among senior researchers...[may indicate they] rely more on their established reputations or heuristics, potentially leading to less detailed engagement with individual citations."

Preprint: doi.org/10.48550/arX...

#AcademicSky 🧪

Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔

Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵

Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔

Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵