www.arxiv.org/abs/2502.14010

www.arxiv.org/abs/2502.14010

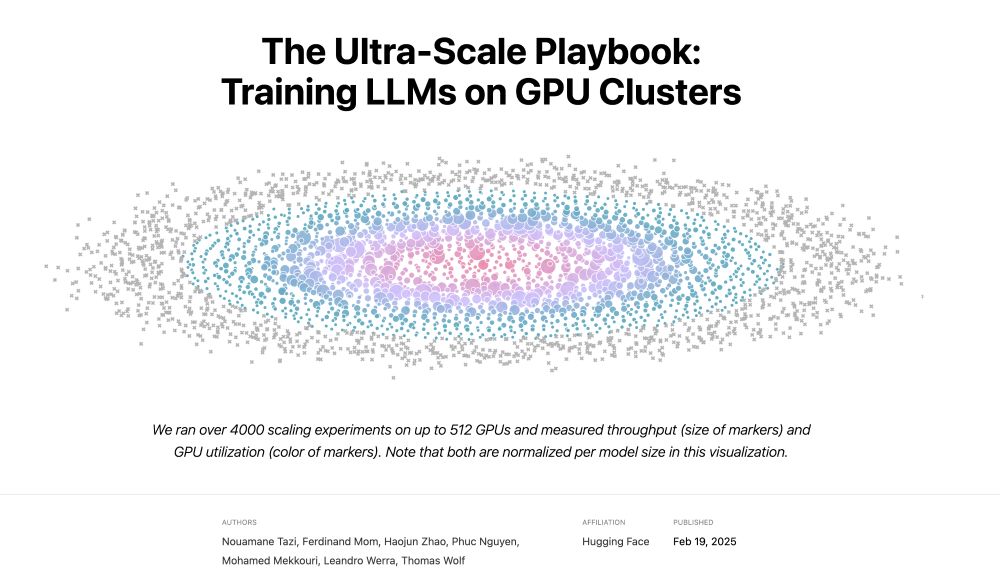

A book to learn all about 5D parallelism, ZeRO, CUDA kernels, how/why overlap compute & coms with theory, motivation, interactive plots and 4000+ experiments!

It achieves the same performance with 21.5% fewer tokens and better generalization! 🎯

📝: arxiv.org/abs/2502.08524

It achieves the same performance with 21.5% fewer tokens and better generalization! 🎯

📝: arxiv.org/abs/2502.08524

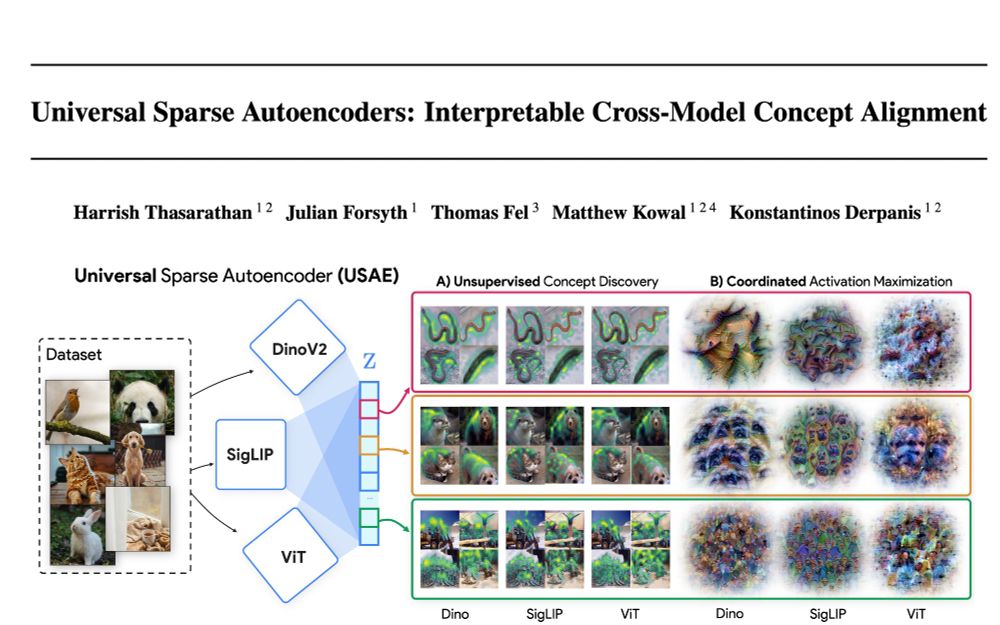

arxiv.org/abs/2502.03714

(1/9)

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

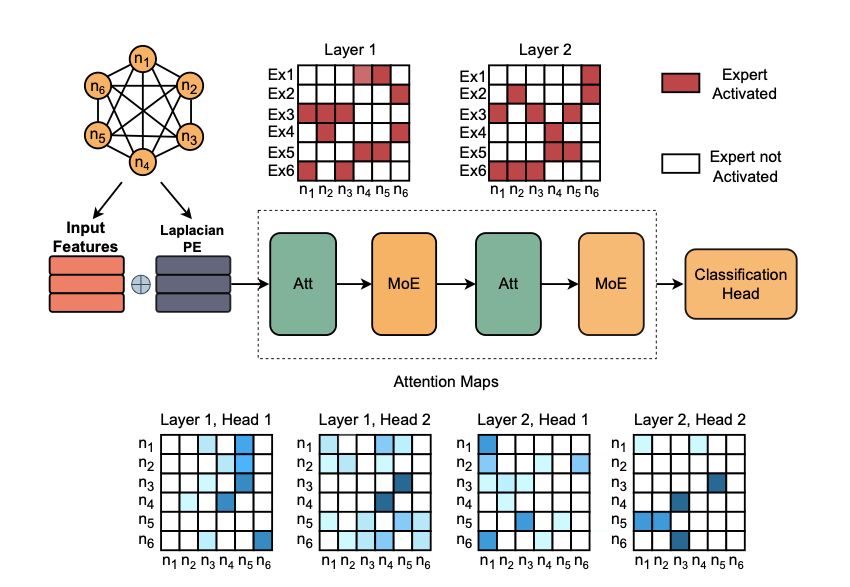

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432