Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

Come see our poster at 4pm on Tuesday in East Exhibition hall A-B, E-1208!

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

arxiv.org/abs/2502.03714

(1/9)

My first major conference paper with my wonderful collaborators and friends @matthewkowal.bsky.social @thomasfel.bsky.social

@Julian_Forsyth

@csprofkgd.bsky.social

Working with y'all is the best 🥹

Preprint ⬇️!!

HUGE shoutout to Harry (1st PhD paper, in 1st year), Julian (1st ever, done as an undergrad), Thomas and Matt!

@hthasarathan.bsky.social @thomasfel.bsky.social @matthewkowal.bsky.social

arxiv.org/abs/2502.03714

(1/9)

HUGE shoutout to Harry (1st PhD paper, in 1st year), Julian (1st ever, done as an undergrad), Thomas and Matt!

@hthasarathan.bsky.social @thomasfel.bsky.social @matthewkowal.bsky.social

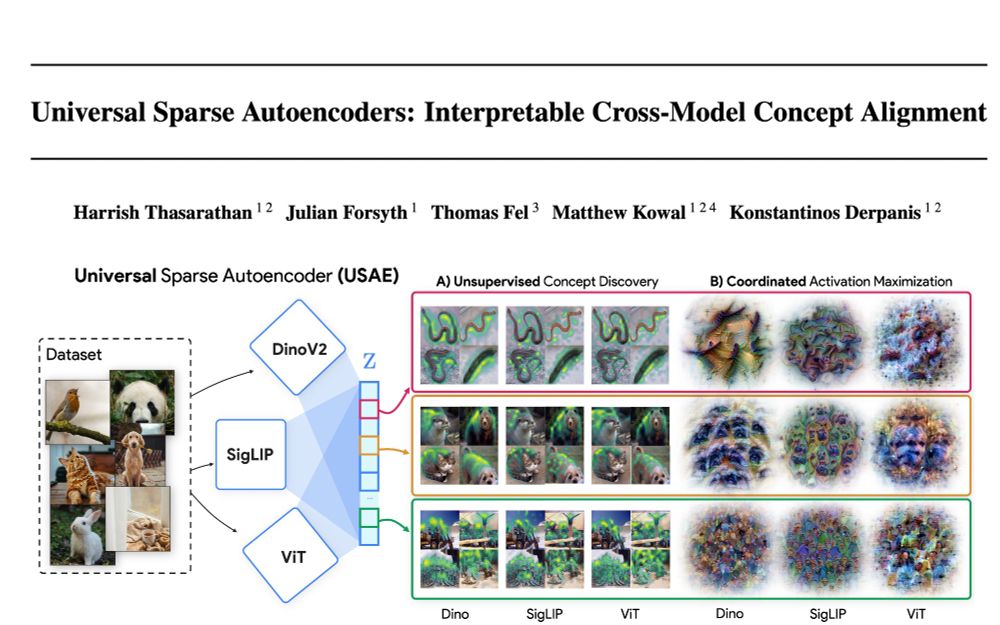

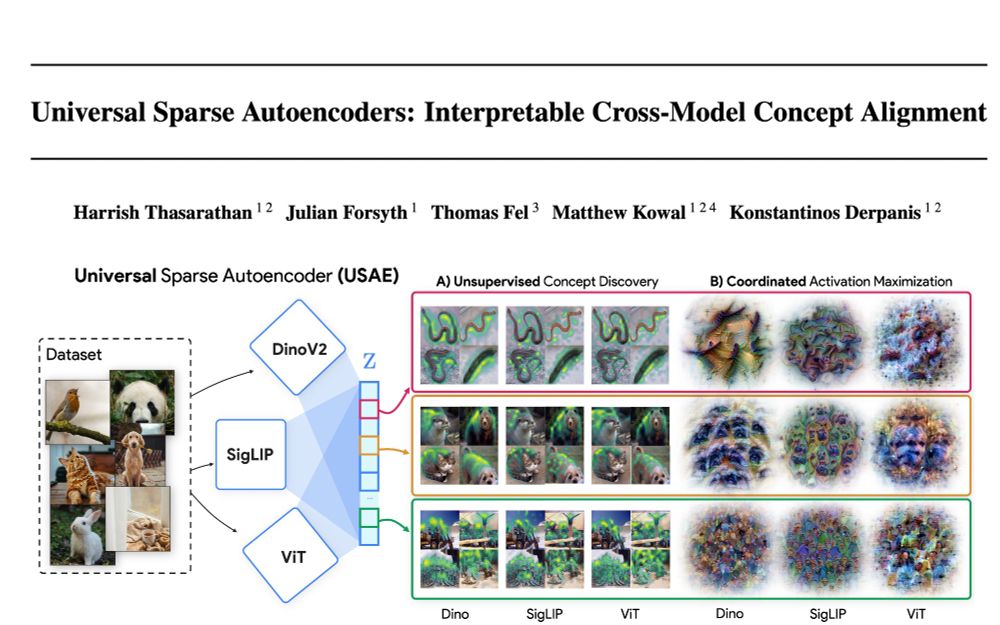

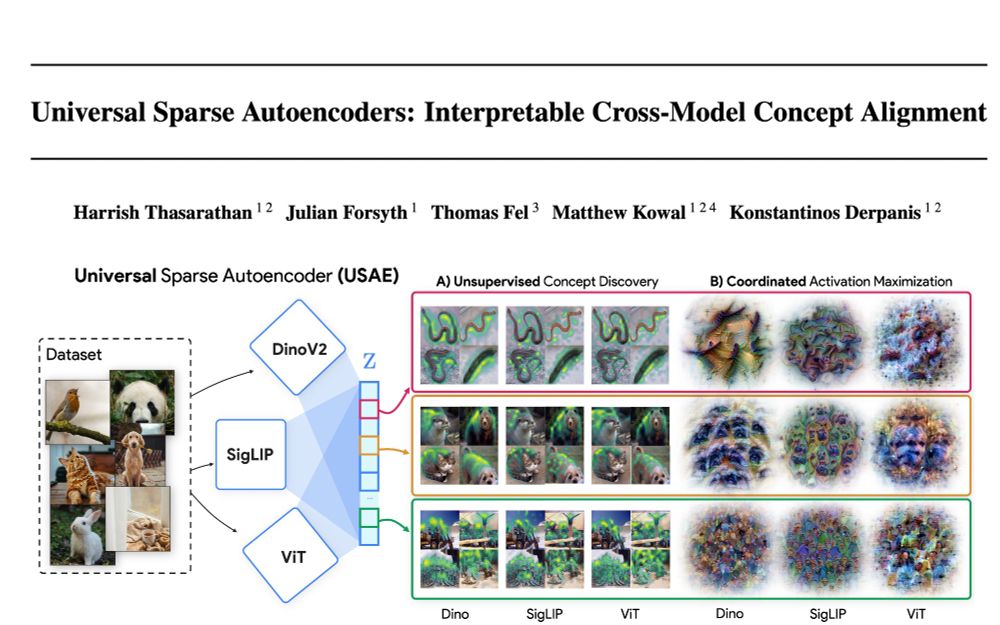

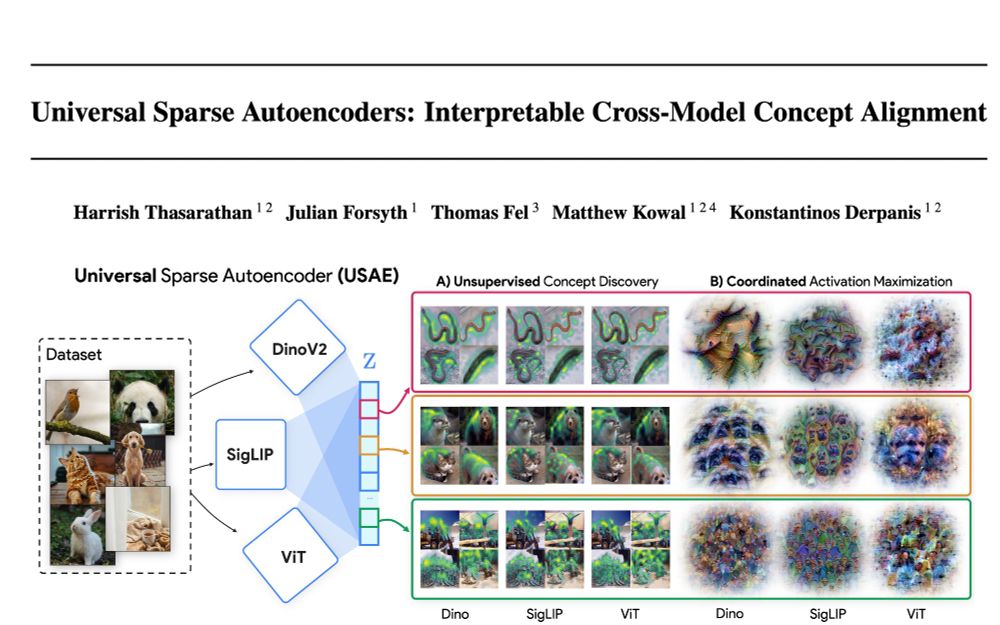

Representational Similarity via Interpretable Visual Concepts

arxiv.org/abs/2503.15699

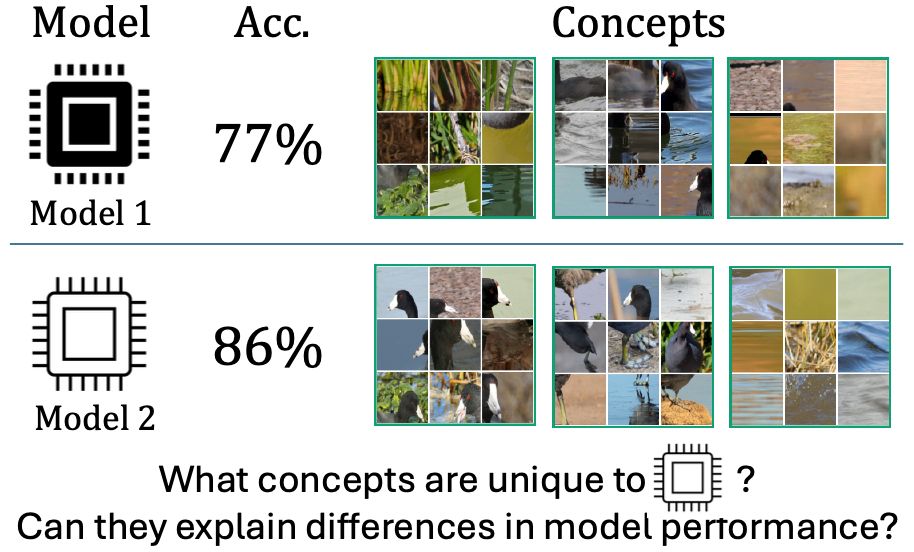

We all know the ViT-Large performs better than the Resnet-50, but what visual concepts drive this difference? Our new ICLR 2025 paper addresses this question! nkondapa.github.io/rsvc-page/

Representational Similarity via Interpretable Visual Concepts

arxiv.org/abs/2503.15699

arxiv.org/abs/2502.03714

(1/9)

arxiv.org/abs/2502.03714

(1/9)

arxiv.org/abs/2502.03714

(1/9)