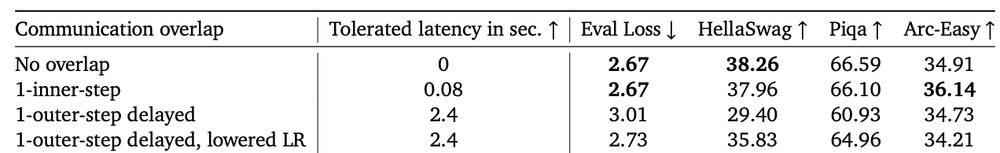

can we overlap communication with computation over hundred of steps?

-- yes we can

in this work led by @SatyenKale, we improve DiLoCo and use x1177 less bandwidth than data-parallel

can we overlap communication with computation over hundred of steps?

-- yes we can

in this work led by @SatyenKale, we improve DiLoCo and use x1177 less bandwidth than data-parallel

"asynchronous training where each copy of the model does local computation [...] it makes people uncomfortable [...] but it actually works"

yep, i can confirm, it does work for real

see arxiv.org/abs/2501.18512

"asynchronous training where each copy of the model does local computation [...] it makes people uncomfortable [...] but it actually works"

yep, i can confirm, it does work for real

see arxiv.org/abs/2501.18512

--> Streaming DiLoCo with Overlapping Communication.

TL;DR: train data-parallel across the world with low-bandwidth for the same performance: 400x less bits exchanged & huge latency tolerance

--> Streaming DiLoCo with Overlapping Communication.

TL;DR: train data-parallel across the world with low-bandwidth for the same performance: 400x less bits exchanged & huge latency tolerance

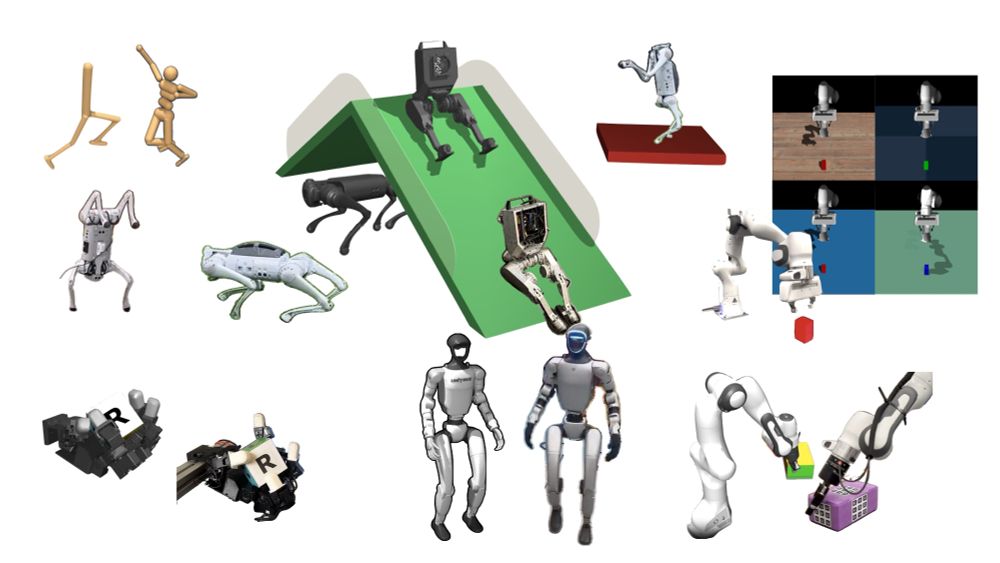

Combining MuJoCo’s rich and thriving ecosystem, massively parallel GPU-accelerated simulation, and real-world results across a diverse range of robot platforms: quadrupeds, humanoids, dexterous hands, and arms.

Get started today: pip install playground

Combining MuJoCo’s rich and thriving ecosystem, massively parallel GPU-accelerated simulation, and real-world results across a diverse range of robot platforms: quadrupeds, humanoids, dexterous hands, and arms.

Get started today: pip install playground

Here's a talk now on Youtube about it given by my awesome colleague John Schultz!

www.youtube.com/watch?v=JyxE...

Here's a talk now on Youtube about it given by my awesome colleague John Schultz!

www.youtube.com/watch?v=JyxE...

📃 Submission Portal: openreview.net/group?id=ICL...

🤗See you in Singapore!

For more details, check out the original thread ↪️🧵

📃 Submission Portal: openreview.net/group?id=ICL...

🤗See you in Singapore!

For more details, check out the original thread ↪️🧵

We'll host in ICLR 2025 (late April) a workshop on modularity, encompassing collaborative + decentralized + continual learning.

Those topics are on the critical path to building better AIs.

Interested? submit a paper and join us in Singapore!

sites.google.com/corp/view/mc...

We'll host in ICLR 2025 (late April) a workshop on modularity, encompassing collaborative + decentralized + continual learning.

Those topics are on the critical path to building better AIs.

Interested? submit a paper and join us in Singapore!

sites.google.com/corp/view/mc...

The first open-source world-wide training of a 10B model. The underlying ML distributed algo is DiLoCo (arxiv.org/abs/2311.08105) but they also built tons of engineering on top of it to make it scalable.

The first open-source world-wide training of a 10B model. The underlying ML distributed algo is DiLoCo (arxiv.org/abs/2311.08105) but they also built tons of engineering on top of it to make it scalable.

And with beautiful 3blue1brown's style of animation: https://github.com/3b1b/manim.

Original RoPE paper: arxiv.org/abs/2104.09864

And with beautiful 3blue1brown's style of animation: https://github.com/3b1b/manim.

Original RoPE paper: arxiv.org/abs/2104.09864

towardsdatascience.com/distributed-decentralized-training-of-neural-networks-a-primer-21e5e961fce1

DP's AllReduce, variants thereof + advanced methods as SWARM (arxiv.org/abs/2301.11913) and DiLoCo (arxiv.org/abs/2311.08105 )

towardsdatascience.com/distributed-decentralized-training-of-neural-networks-a-primer-21e5e961fce1

DP's AllReduce, variants thereof + advanced methods as SWARM (arxiv.org/abs/2301.11913) and DiLoCo (arxiv.org/abs/2311.08105 )

* Adding a true-seeking classifier probe on the token embeddings can have better performance than the actual generation

* Is something wrong going on in the decoding part?

* Those error detectors don't generalize across datasets

* Adding a true-seeking classifier probe on the token embeddings can have better performance than the actual generation

* Is something wrong going on in the decoding part?

* Those error detectors don't generalize across datasets

deepmind.google/public-polic...

deepmind.google/public-polic...

LLMs are prone to collude when they know they cannot be "caught"

LLMs are prone to collude when they know they cannot be "caught"

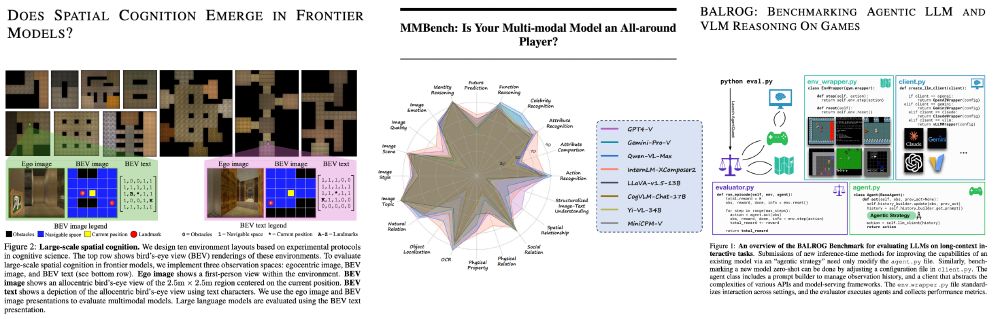

- arxiv.org/abs/2410.06468 (does spatial cognition ...)

- arxiv.org/abs/2307.06281 (MMBench)

- arxiv.org/abs/2411.13543 (BALROG) - additional points for the LOTR ref.

- arxiv.org/abs/2410.06468 (does spatial cognition ...)

- arxiv.org/abs/2307.06281 (MMBench)

- arxiv.org/abs/2411.13543 (BALROG) - additional points for the LOTR ref.

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

recently, @primeintellect.bsky.social have announced finishing their 10B distributed learning, trained across the world.

what is it exactly?

🧵

* Add a small MLP + classifier which predict a temperature per token

* They train the MLP with a variant of DPO (arxiv.org/abs/2305.18290) with the temperatures as latent

* low temp for math, high for creative tasks

* Add a small MLP + classifier which predict a temperature per token

* They train the MLP with a variant of DPO (arxiv.org/abs/2305.18290) with the temperatures as latent

* low temp for math, high for creative tasks

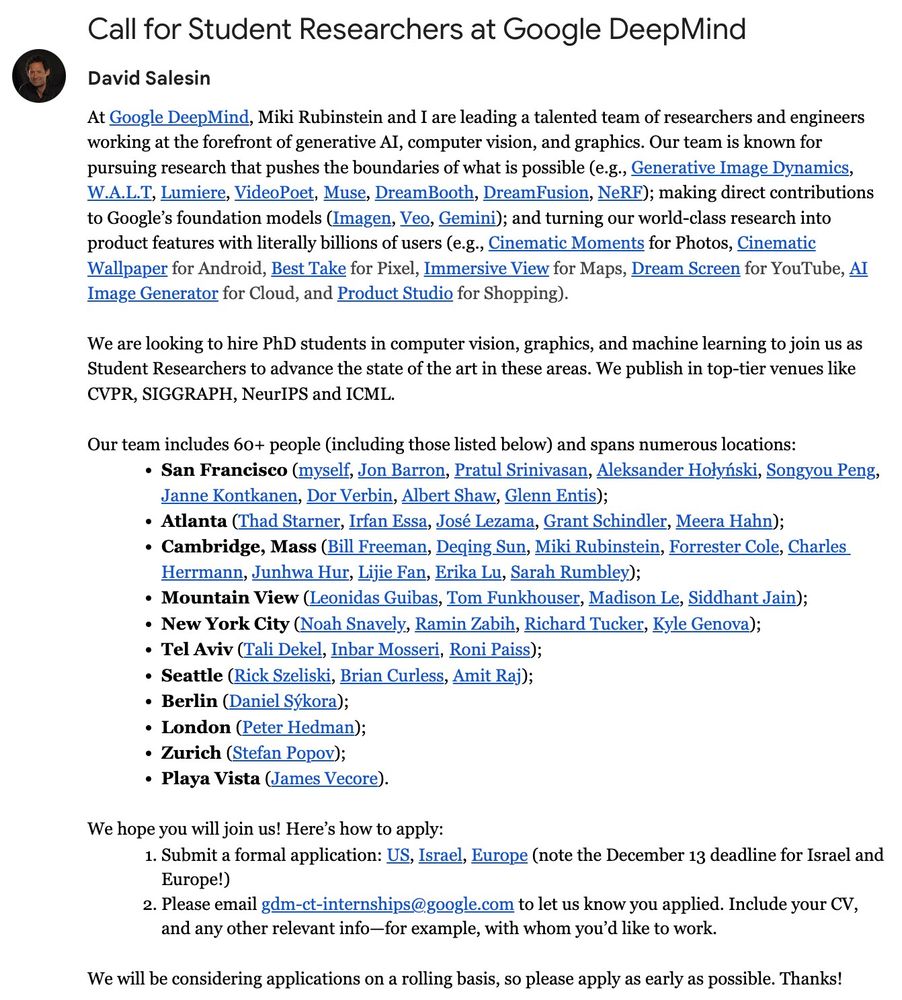

Research Scientist on the HEART team led by IasonGabriel: boards.greenhouse.io/deepmind/job...

Research Engineer on the SAMBA team led by Kristian Lum: boards.greenhouse.io/deepmind/job...

Research Scientist on the HEART team led by IasonGabriel: boards.greenhouse.io/deepmind/job...

Research Engineer on the SAMBA team led by Kristian Lum: boards.greenhouse.io/deepmind/job...

Similar to min-p (arxiv.org/abs/2407.01082), aims to cut too low probs for sampling.

while min-p is based on a % threshold of the max prob, Top-nσ notes that logits follow a gaussian, and aims to cut logits further than n-sigma away

Similar to min-p (arxiv.org/abs/2407.01082), aims to cut too low probs for sampling.

while min-p is based on a % threshold of the max prob, Top-nσ notes that logits follow a gaussian, and aims to cut logits further than n-sigma away