Prev: co-founder and CEO of Argilla (acquired by Hugging Face)

1️⃣ Generate from your documents, dataset, or dataset description.

2️⃣ Configure it.

3️⃣ Generate the synthetic dataset.

4️⃣ Fine-tune the retrieval and reranking models.

5️⃣ Build a RAG pipeline.

1️⃣ Generate from your documents, dataset, or dataset description.

2️⃣ Configure it.

3️⃣ Generate the synthetic dataset.

4️⃣ Fine-tune the retrieval and reranking models.

5️⃣ Build a RAG pipeline.

We've added a new chapter about the very basics of Argilla to the Hugging Face NLP course. Learn how to set up an Argilla instance, load & annotate datasets, and export them to the Hub.

Any feedback for improvements welcome!

We've added a new chapter about the very basics of Argilla to the Hugging Face NLP course. Learn how to set up an Argilla instance, load & annotate datasets, and export them to the Hub.

Any feedback for improvements welcome!

📊 Current stats:

- 115 languages represented

- 419 amazing contributors

- 24 languages with complete datasets

But we're not done yet! 🧵

📊 Current stats:

- 115 languages represented

- 419 amazing contributors

- 24 languages with complete datasets

But we're not done yet! 🧵

Prompt and wait for your dataset to push to Argilla or the Hub

Evaluate, review and fine-tune a model.

Blog:

Prompt and wait for your dataset to push to Argilla or the Hub

Evaluate, review and fine-tune a model.

Blog:

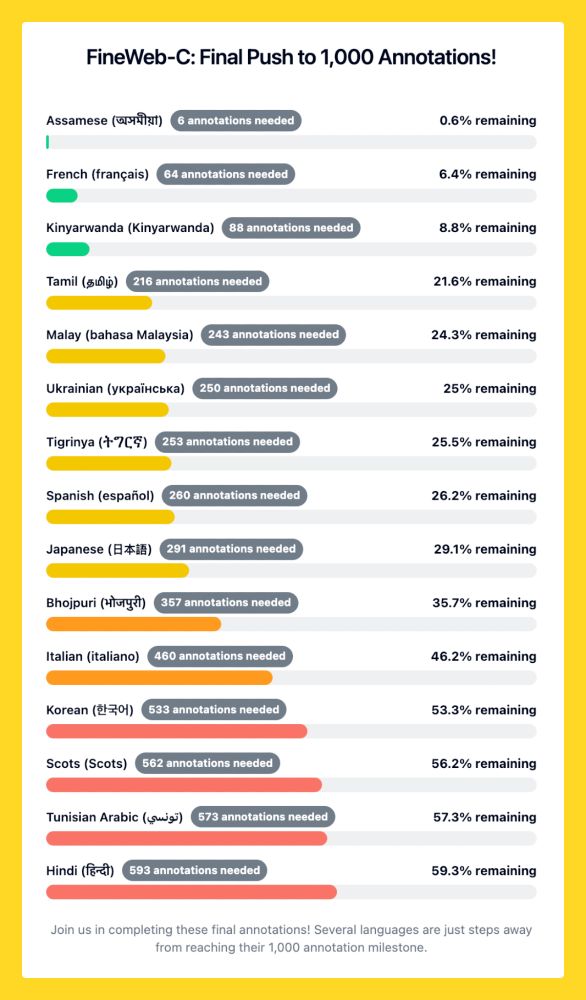

It's exciting to see the progress of many languages in FineWeb-C:

- Total annotations submitted: 41,577

- Languages with annotations: 106

- Total contributors: 363

It's exciting to see the progress of many languages in FineWeb-C:

- Total annotations submitted: 41,577

- Languages with annotations: 106

- Total contributors: 363

Many FineWeb-C languages are close to 1,000 annotations!

Assamese is 99.4% done, French needs 64 more annotations, Tamil: 216.

Please help us reach the goal: huggingface.co/spaces/data-...

Many FineWeb-C languages are close to 1,000 annotations!

Assamese is 99.4% done, French needs 64 more annotations, Tamil: 216.

Please help us reach the goal: huggingface.co/spaces/data-...

With Argilla 2.6.0, push your data to the Hub from the UI

Let’s make 2025 the year anyone can build more transparent and accountable AI—no coding or model skills needed.

With Argilla 2.6.0, push your data to the Hub from the UI

Let’s make 2025 the year anyone can build more transparent and accountable AI—no coding or model skills needed.

Let me show you how EASY it is to export your annotated datasets from Argilla to the Hugging Face Hub. 🤩

Take a look to this quick demo 👇

💁♂️ More info about the release at github.com/argilla-io/a...

#AI #MachineLearning #OpenSource #DataScience #HuggingFace #Argilla

Let me show you how EASY it is to export your annotated datasets from Argilla to the Hugging Face Hub. 🤩

Take a look to this quick demo 👇

💁♂️ More info about the release at github.com/argilla-io/a...

#AI #MachineLearning #OpenSource #DataScience #HuggingFace #Argilla

A no-code tool to create datasets with LLMs, making it a breeze, allowing ANYONE to create datasets and models in minutes and without any code.

Blog: https://buff.ly/4gybyoT

GitHub: https://buff.ly/49IDSmd

Space: https://buff.ly/3Y1S99z

A no-code tool to create datasets with LLMs, making it a breeze, allowing ANYONE to create datasets and models in minutes and without any code.

Blog: https://buff.ly/4gybyoT

GitHub: https://buff.ly/49IDSmd

Space: https://buff.ly/3Y1S99z

@hf.co

data-is-better-together-fineweb-c.hf.space/share-your-p...

@hf.co

data-is-better-together-fineweb-c.hf.space/share-your-p...

I've just contributed 100 examples to this dataset:

data-is-better-together-fineweb-c.hf.space/share-your-p...

Big thanks to @dvilasuero.hf.co, @nataliaelv.hf.co and team 🙌

I've just contributed 100 examples to this dataset:

data-is-better-together-fineweb-c.hf.space/share-your-p...

Big thanks to @dvilasuero.hf.co, @nataliaelv.hf.co and team 🙌

The community currently shares prompt templates in a wide variety of formats: in datasets, in model cards, as strings in .py files, as .txt/... 🧵

The community currently shares prompt templates in a wide variety of formats: in datasets, in model cards, as strings in .py files, as .txt/... 🧵

data-is-better-together-fineweb-c.hf.space/share-your-p...

data-is-better-together-fineweb-c.hf.space/share-your-p...

data-is-better-together-fineweb-c.hf.space/share-your-p...

If you want to contribute, sign in with @hf.co and find your language

data-is-better-together-fineweb-c.hf.space/share-your-p...

If you want to contribute, sign in with @hf.co and find your language

Join the FineWeb 2 Community Annotation Sprint to create an open training dataset with full transparency and human validation in many languages.

Review datasets in your language and help identify the best sources for training.

Join the FineWeb 2 Community Annotation Sprint to create an open training dataset with full transparency and human validation in many languages.

Review datasets in your language and help identify the best sources for training.

#AI #MachineLearning #Webhooks #TechUpdate

#AI #MachineLearning #Webhooks #TechUpdate

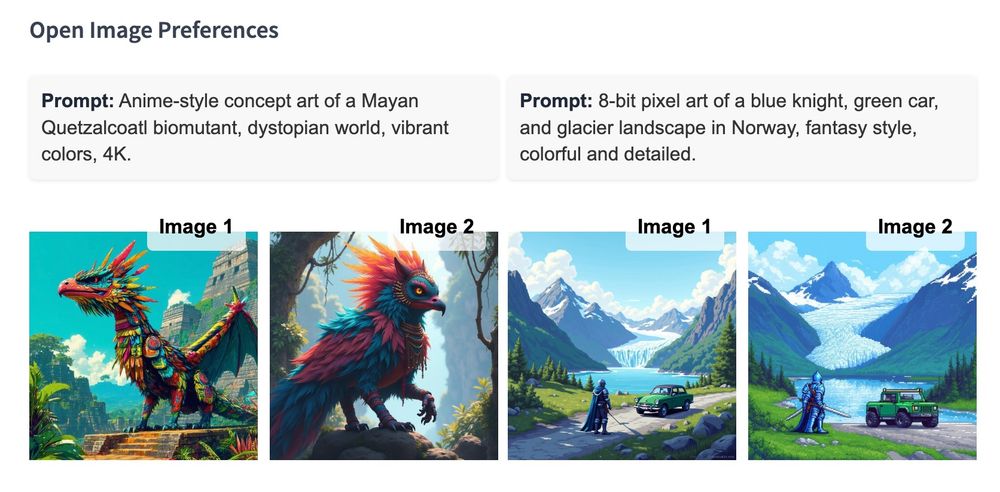

Blog: huggingface.co/blog/image-p...

Blog: huggingface.co/blog/image-p...

- Open-source dataset for text2image

- 10K samples manually evaluated by the HF community.

- Binarized format for SFT, DPO, or ORPO.

It comes with a nice blog post explaining the steps to pre-process and generate the data, along with the results.

- Open-source dataset for text2image

- 10K samples manually evaluated by the HF community.

- Binarized format for SFT, DPO, or ORPO.

It comes with a nice blog post explaining the steps to pre-process and generate the data, along with the results.

The result of months of work with the goal of advancing Multilingual LLM evaluation.

Built together with the community and amazing collaborators at Cohere4AI, MILA, MIT, and many more.

The result of months of work with the goal of advancing Multilingual LLM evaluation.

Built together with the community and amazing collaborators at Cohere4AI, MILA, MIT, and many more.

Let's get the highest quality data for open foundation models with all the nuances & diversity of each language, all with data provenance and transparency

Join us as language lead:

docs.google.com/forms/d/10XI...

Let's get the highest quality data for open foundation models with all the nuances & diversity of each language, all with data provenance and transparency

Join us as language lead:

docs.google.com/forms/d/10XI...

We are really close to getting leads for 100 languages! Can you help us cover the remaining 200?

We are really close to getting leads for 100 languages! Can you help us cover the remaining 200?

🧵>>

🧵>>

Argilla now has full support for webhooks, which means you can do some pretty cool stuff, like model training on the fly as annotations are created. 🤯

#MachineLearning #NLP #DataLabeling

Argilla now has full support for webhooks, which means you can do some pretty cool stuff, like model training on the fly as annotations are created. 🤯

#MachineLearning #NLP #DataLabeling

In case you spent the week reading GDPR legislation and missed everything. It’s all about vision language models and image preference datasets.

>> 🧵 Here are the models and datasets you can use in your projects.

In case you spent the week reading GDPR legislation and missed everything. It’s all about vision language models and image preference datasets.

>> 🧵 Here are the models and datasets you can use in your projects.