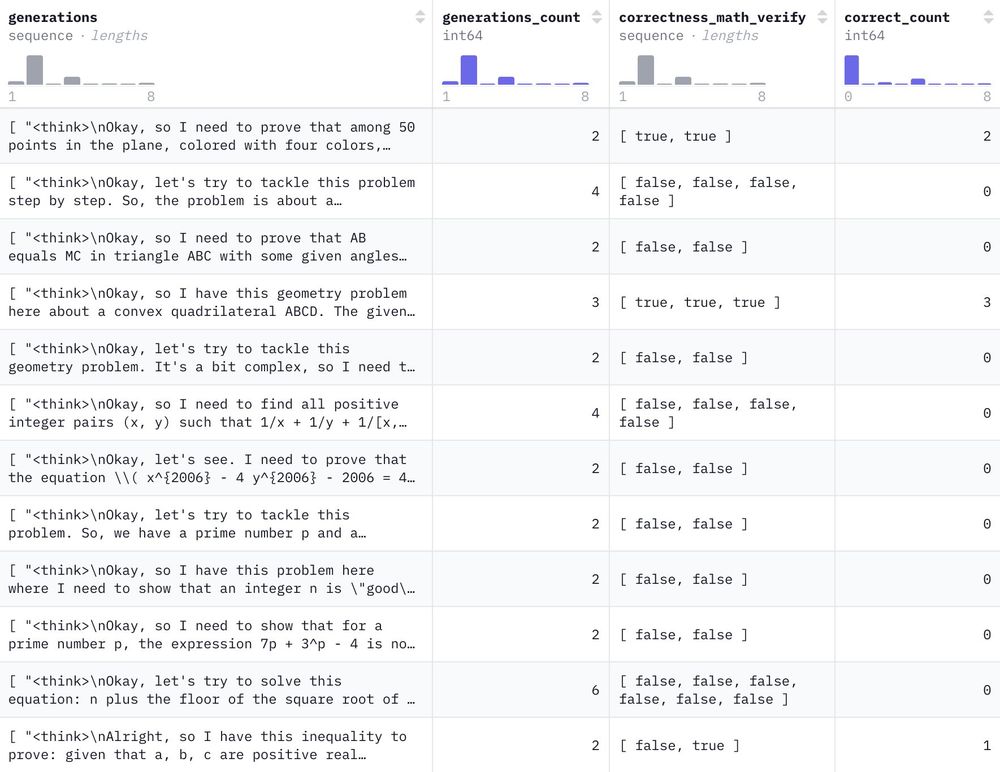

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

We commandeered the HF cluster for a few days and generated 1.2M reasoning-filled solutions to 500k NuminaMath problems with DeepSeek-R1 🐳

Have fun!

Hugging Face is openly reproducing the pipeline of 🐳 DeepSeek-R1. Open data, open training. open models, open collaboration.

🫵 Let's go!

github.com/huggingface/...

Hugging Face is openly reproducing the pipeline of 🐳 DeepSeek-R1. Open data, open training. open models, open collaboration.

🫵 Let's go!

github.com/huggingface/...

Follow along: github.com/huggingface/...

Follow along: github.com/huggingface/...

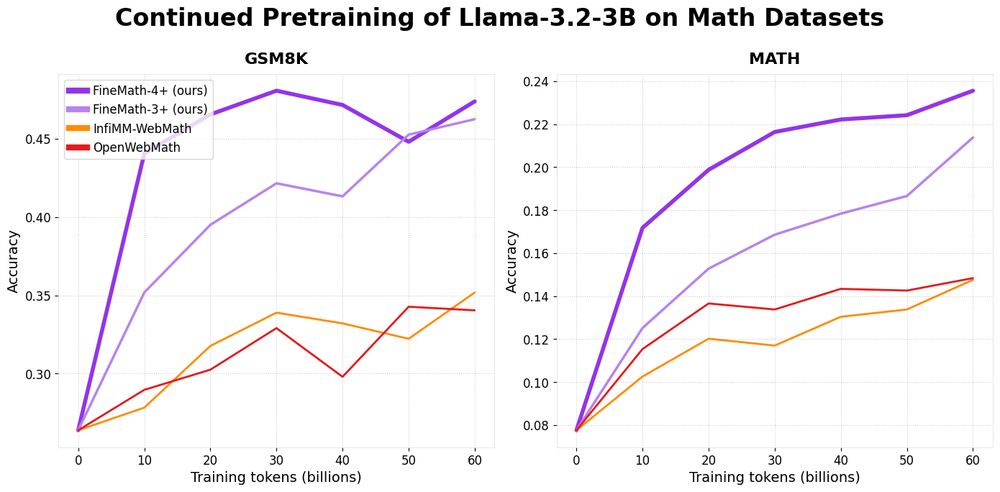

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

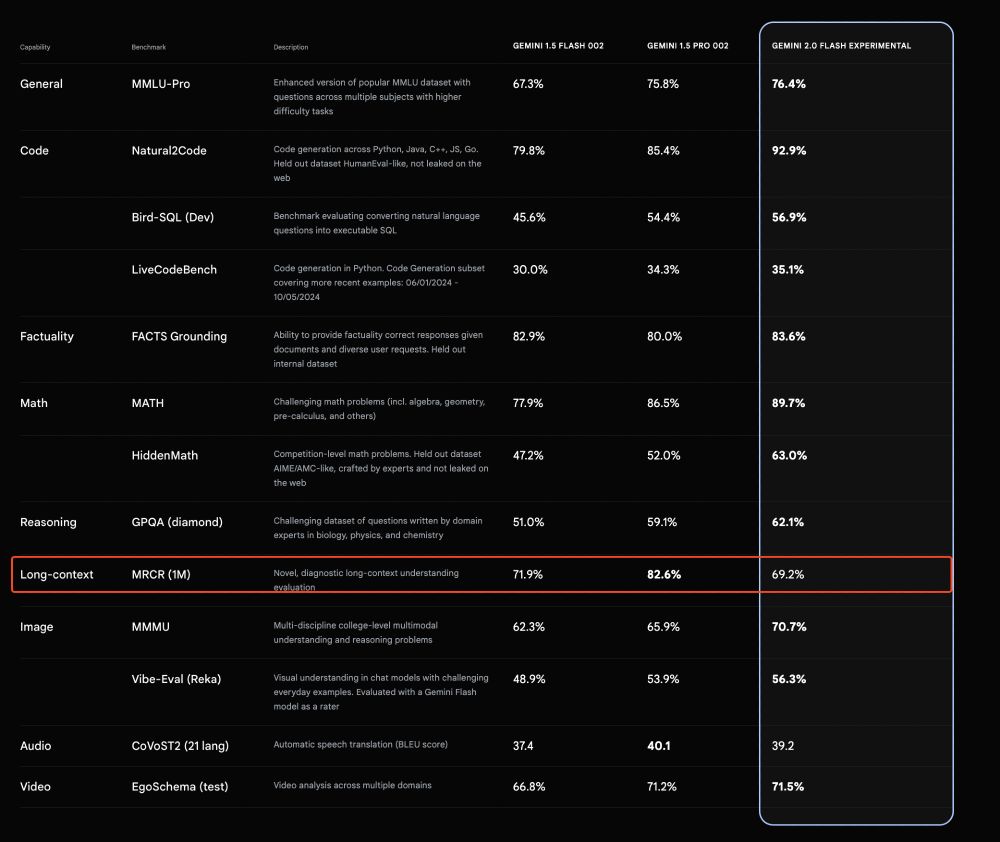

One odd thing is that the model seems to lose some ability with long contexts compared to Flash 1.5. If any google friends could share insights, I'd love to hear them!

One odd thing is that the model seems to lose some ability with long contexts compared to Flash 1.5. If any google friends could share insights, I'd love to hear them!

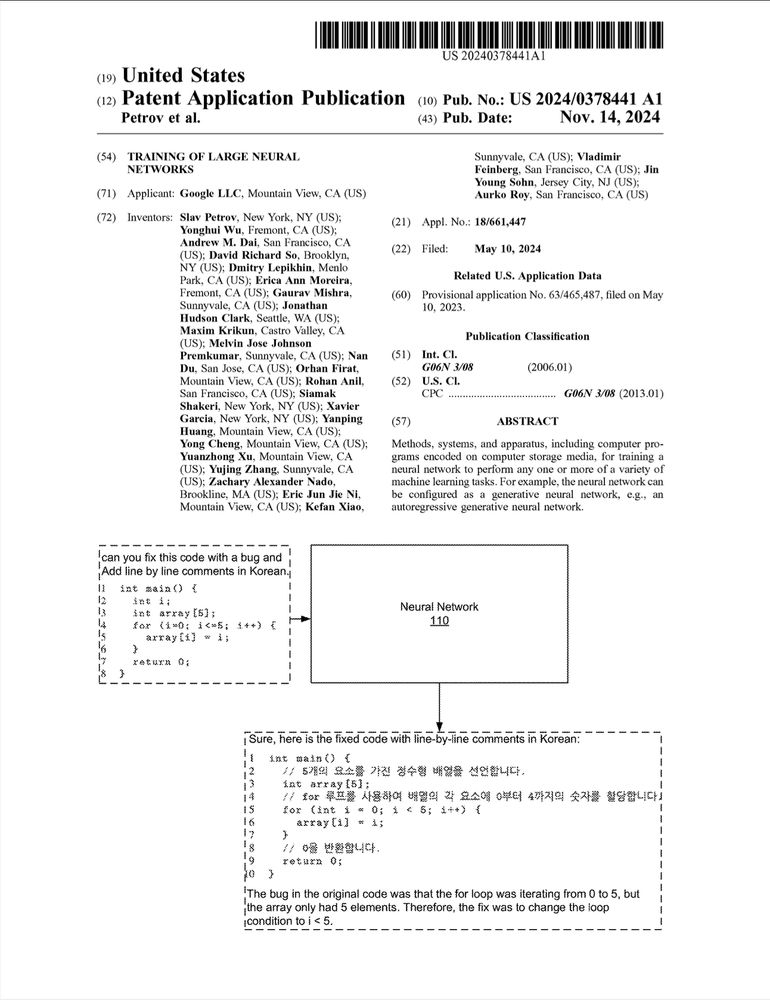

I don't know if this give much information but by going quickly through it seems that:

- They are not only using "causal language modeling task" as a pre-training task but also "span corruption" and "prefix modeling". (ref [0805]-[0091])

I don't know if this give much information but by going quickly through it seems that:

- They are not only using "causal language modeling task" as a pre-training task but also "span corruption" and "prefix modeling". (ref [0805]-[0091])

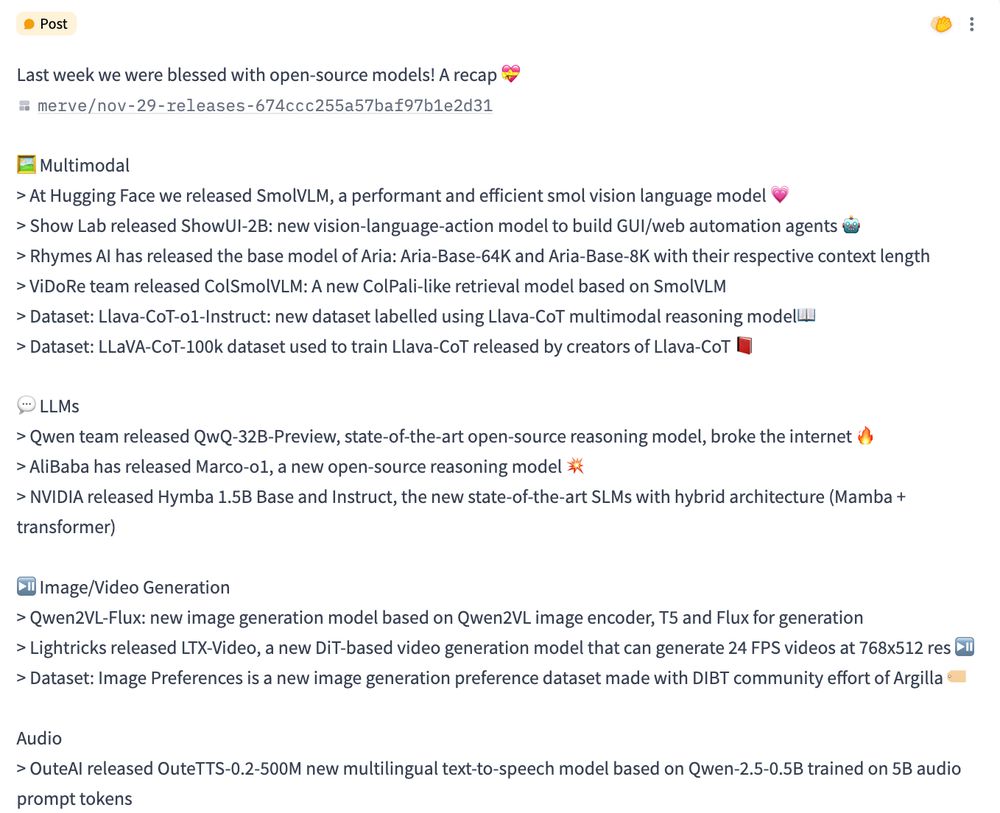

Here's a recap, find the text-readable version here huggingface.co/posts/merve/...

Here's a recap, find the text-readable version here huggingface.co/posts/merve/...

Check @andimara.bsky.social's Smol Tools for summarization and rewriting. It uses SmolLM2 to summarize text and make it more friendly or professional, all running locally thanks to llama.cpp github.com/huggingface/...

Check @andimara.bsky.social's Smol Tools for summarization and rewriting. It uses SmolLM2 to summarize text and make it more friendly or professional, all running locally thanks to llama.cpp github.com/huggingface/...

Any thoughts or cool ideas?

Any thoughts or cool ideas?

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

Powered by 🤗 Transformers.js and ONNX Runtime Web!

How many tokens/second do you get? Let me know! 👇

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

If you are:

* Driven

* Love OSS

* Interested in distributed PyTorch training/FSDPv2/DeepSpeed

Come work with me!

Fully remote, more details to apply in the comments

There was a mistake, a quick follow up to mitigate and an apology. I worked with Daniel for years and is one of the persons most preoccupied with ethical implications of AI. Some replies are Reddit-toxic level. We need empathy.

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

US: apply.workable.com/huggingface/...

EMEA: apply.workable.com/huggingface/...

To do this, we're redesigning the upload and download infrastructure on the Hub. This post describes how, check the thread for details 🧵

huggingface.co/blog/rearchi...

To do this, we're redesigning the upload and download infrastructure on the Hub. This post describes how, check the thread for details 🧵

huggingface.co/blog/rearchi...

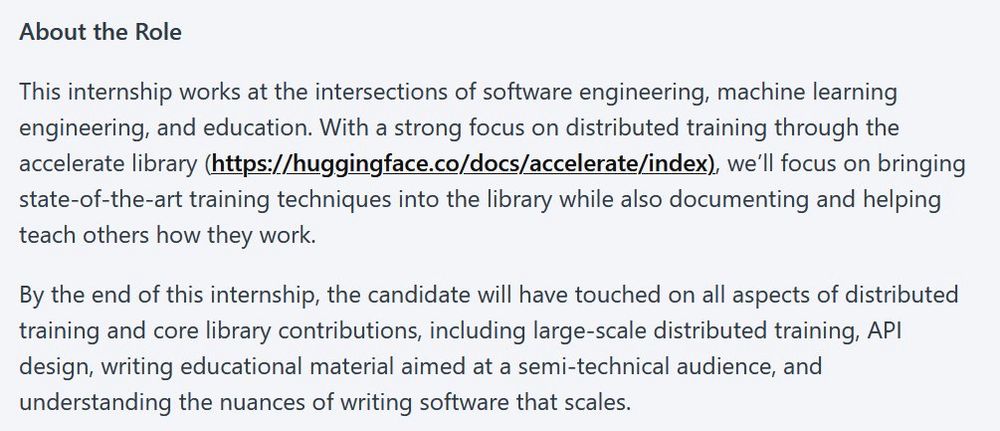

It uses a preliminary 16k context version of SmolLM2 to tackle long-context vision documents and higher-res images.

And yes, we’re cooking up versions with bigger context lengths. 👨🍳

Try it yourself here: huggingface.co/spaces/Huggi...

It uses a preliminary 16k context version of SmolLM2 to tackle long-context vision documents and higher-res images.

And yes, we’re cooking up versions with bigger context lengths. 👨🍳

Try it yourself here: huggingface.co/spaces/Huggi...

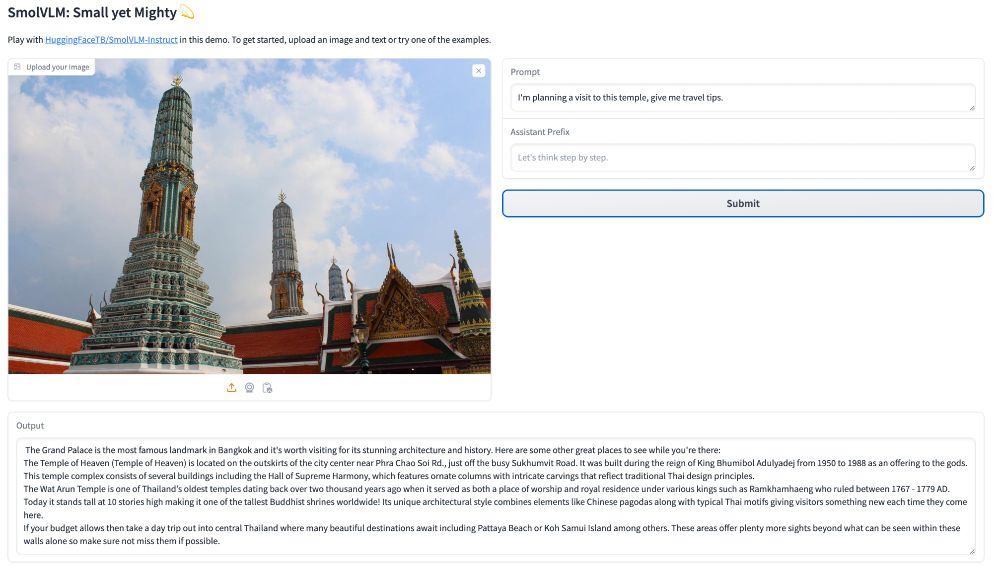

We are releasing SmolVLM: a new 2B small vision language made for on-device use, fine-tunable on consumer GPU, immensely memory efficient 🤠

We release three checkpoints under Apache 2.0: SmolVLM-Instruct, SmolVLM-Synthetic and SmolVLM-Base huggingface.co/collections/...

We are releasing SmolVLM: a new 2B small vision language made for on-device use, fine-tunable on consumer GPU, immensely memory efficient 🤠

We release three checkpoints under Apache 2.0: SmolVLM-Instruct, SmolVLM-Synthetic and SmolVLM-Base huggingface.co/collections/...

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

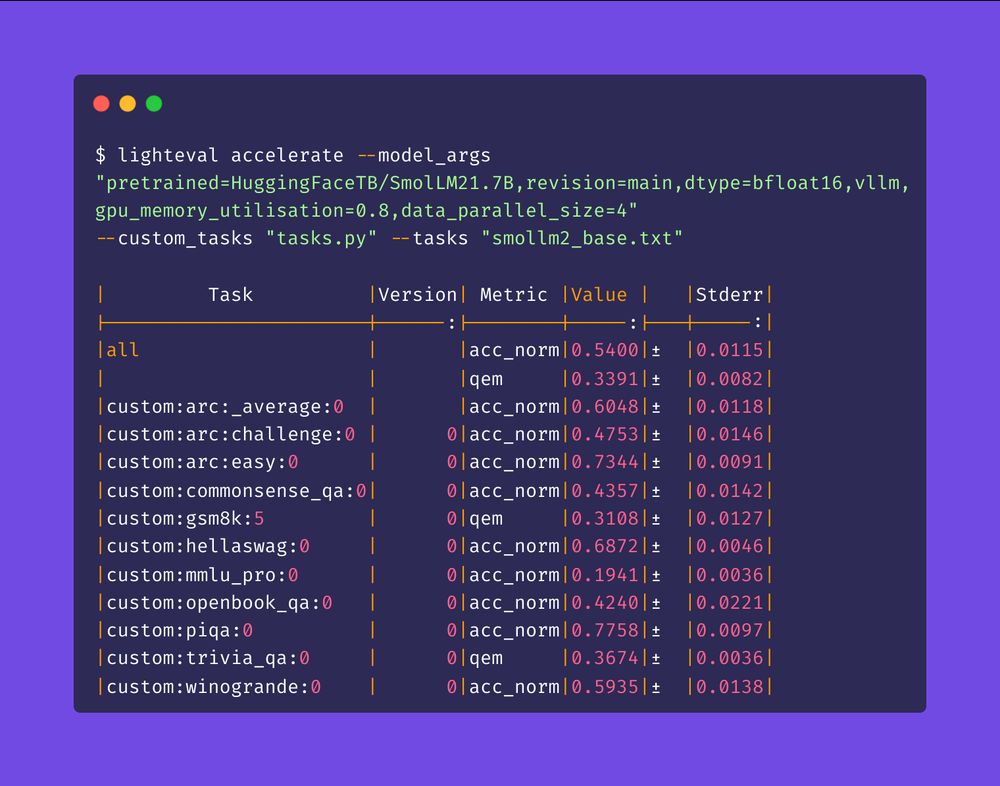

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

* any dataset on the 🤗 Hub can become an eval task in a few lines of code: customize the prompt, metrics, parsing, few-shots, everything!

* model- and data-parallel inference

* auto batching with the new vLLM backend

Fully open-source. We’ll release a blog post soon to detail how we trained it. I'm also super excited about all the demos that will come in the next few days, especially looking forward for people to test it with entropix 🐸

Fully open-source. We’ll release a blog post soon to detail how we trained it. I'm also super excited about all the demos that will come in the next few days, especially looking forward for people to test it with entropix 🐸