The goal is to turns hard interpretability questions into tools for human empowerment, oversight and governance.

The goal is to turns hard interpretability questions into tools for human empowerment, oversight and governance.

The goal is to turns hard interpretability questions into tools for human empowerment, oversight and governance.

@aims_oxford

!

I’m thrilled to launch a new called AI Safety & Alignment (AISAA) course on the foundations & frontier research of making advanced AI systems safe and aligned at

@UniofOxford

what to expect 👇

robots.ox.ac.uk/~fazl/aisaa/

@aims_oxford

!

I’m thrilled to launch a new called AI Safety & Alignment (AISAA) course on the foundations & frontier research of making advanced AI systems safe and aligned at

@UniofOxford

what to expect 👇

robots.ox.ac.uk/~fazl/aisaa/

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

🧵

My latest paper tries to solve a longstanding problem afflicting fields such as decision theory, economics, and ethics — the problem of infinities.

Let me explain a bit about what causes the problem and how my solution avoids it.

1/N

arxiv.org/abs/2509.19389

More details (and more bragging) soon! and maybe even more news on sep 25 👀

See you all in… Mexico? San Diego? Copenhagen? Who knows! 🌍✈️

More details (and more bragging) soon! and maybe even more news on sep 25 👀

See you all in… Mexico? San Diego? Copenhagen? Who knows! 🌍✈️

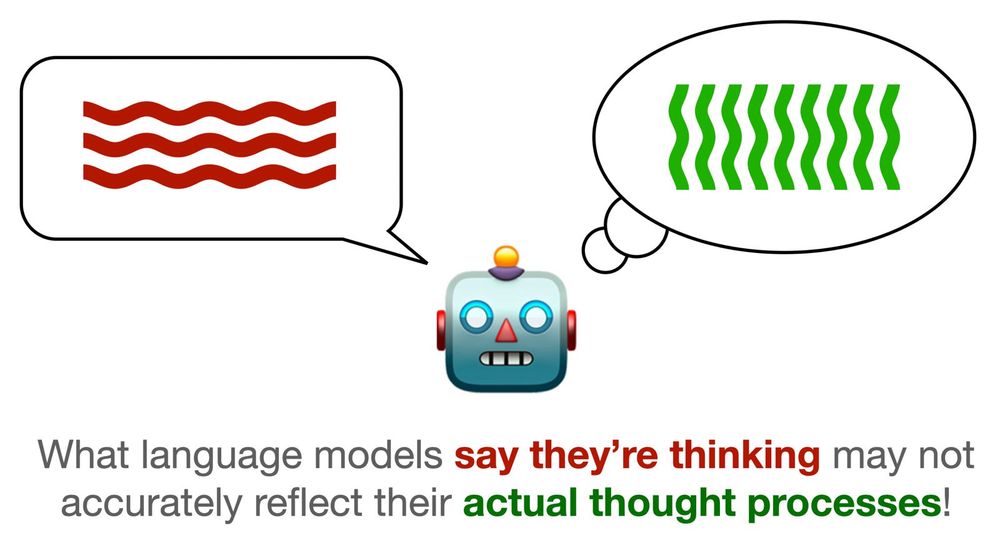

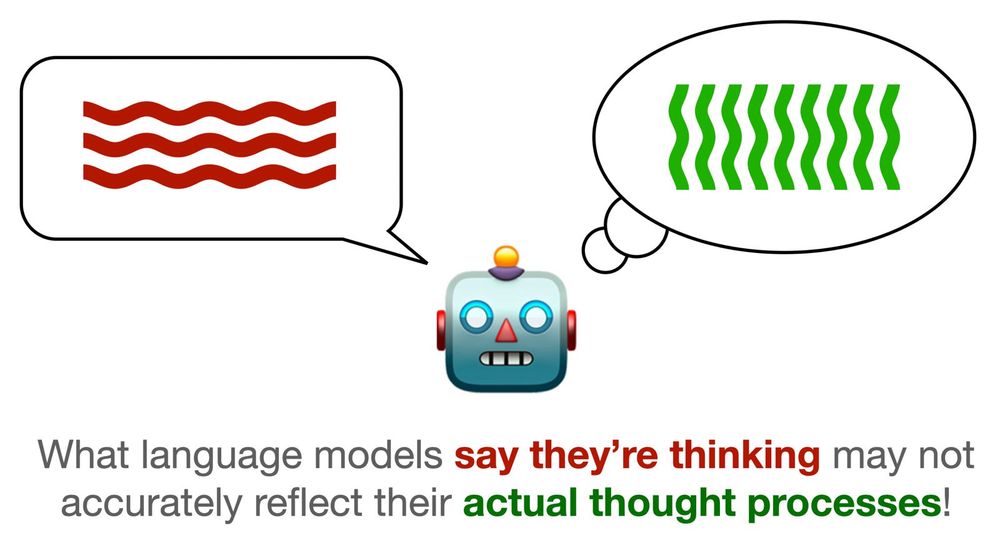

arxiv.org/pdf/2509.00117

Co-authors Jared Perlo @fbarez.bsky.social Alex Robey & @floridi.bsky.social

1\4

arxiv.org/pdf/2509.00117

Co-authors Jared Perlo @fbarez.bsky.social Alex Robey & @floridi.bsky.social

1\4

Here, FUR offers a fine-grained test if LMs latently used information from CoTs for answers!

Here, FUR offers a fine-grained test if LMs latently used information from CoTs for answers!

Today’s AI doesn’t just assist decisions; it makes them. Governments use it for surveillance, prediction, and control — often with no oversight.

Technical safeguards aren’t enough on their own — but they’re essential for AI to serve society.

Today’s AI doesn’t just assist decisions; it makes them. Governments use it for surveillance, prediction, and control — often with no oversight.

Technical safeguards aren’t enough on their own — but they’re essential for AI to serve society.

We'll draw from political theory, cooperative AI, economics, mechanism design, history, and hierarchical agency.

We'll draw from political theory, cooperative AI, economics, mechanism design, history, and hierarchical agency.

Joint work with Clara Suslik, Yihuai Hong, and @fbarez.bsky.social, advised by @megamor2.bsky.social

🔗 Paper: arxiv.org/abs/2505.22586

🔗 Code: github.com/yoavgur/PISCES

Joint work with Clara Suslik, Yihuai Hong, and @fbarez.bsky.social, advised by @megamor2.bsky.social

🔗 Paper: arxiv.org/abs/2505.22586

🔗 Code: github.com/yoavgur/PISCES

White-box LLMs & model security

Safe RL & reward hacking

Interpretability & governance tools

Remote or Oxford.

Apply by 30 May 23:59 UTC. DM with questions.

White-box LLMs & model security

Safe RL & reward hacking

Interpretability & governance tools

Remote or Oxford.

Apply by 30 May 23:59 UTC. DM with questions.

We’re hiring a Postdoc in Causal Systems Modelling to:

- Build causal & white-box models that make frontier AI safer and more transparent

- Turn technical insights into safety cases, policy briefs, and governance tools

]

DM if you have any questions.

We’re hiring a Postdoc in Causal Systems Modelling to:

- Build causal & white-box models that make frontier AI safer and more transparent

- Turn technical insights into safety cases, policy briefs, and governance tools

]

DM if you have any questions.

After suffering through unhelpful reviews as an author, I want to do right by papers in my track.

After suffering through unhelpful reviews as an author, I want to do right by papers in my track.

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

> Follow @actinterp.bsky.social

> Website actionable-interpretability.github.io

@talhaklay.bsky.social @anja.re @mariusmosbach.bsky.social @sarah-nlp.bsky.social @iftenney.bsky.social

Paper submission deadline: May 9th!

Weiwei Pan, Siddharth Swaroop, @ankareuel.bsky.social , Robert Trager @maosbot.bsky.social

Weiwei Pan, Siddharth Swaroop, @ankareuel.bsky.social , Robert Trager @maosbot.bsky.social

Credit to

Ben and Lisa for all the work!

We have a new centre at Oxford working on technical AI governance with Robert Trager and @maosbot.bsky.social many other great minds. We are hiring - please reach out!

Quote

Credit to

Ben and Lisa for all the work!

We have a new centre at Oxford working on technical AI governance with Robert Trager and @maosbot.bsky.social many other great minds. We are hiring - please reach out!

Quote

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

@bucds.bsky.social in 2026! BU has SCHEMES for LM interpretability & analysis, I couldn't be more pumped to join a burgeoning supergroup w/ @najoung.bsky.social @amuuueller.bsky.social. Looking for my first students, so apply and reach out!

Curious how small prompt tweaks impact LLM accuracy but don’t want to run endless inferences? We got you. Meet DOVE - a dataset built to uncover these sensitivities.

Use DOVE for your analysis or contribute samples -we're growing and welcome you aboard!

We bring you ️️🕊️ DOVE a massive (250M!) collection of LLMs outputs

On different prompts, domains, tokens, models...

Join our community effort to expand it with YOUR model predictions & become a co-author!

Curious how small prompt tweaks impact LLM accuracy but don’t want to run endless inferences? We got you. Meet DOVE - a dataset built to uncover these sensitivities.

Use DOVE for your analysis or contribute samples -we're growing and welcome you aboard!

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

We propose a method to automatically find position-aware circuits, improving faithfulness while keeping circuits compact. 🧵👇

Important question: Do SAEs generalise?

We explore the answerability detection in LLMs by comparing SAE features vs. linear residual stream probes.

Answer:

probes outperform SAE features in-domain, out-of-domain generalization varies sharply between features and datasets. 🧵

Important question: Do SAEs generalise?

We explore the answerability detection in LLMs by comparing SAE features vs. linear residual stream probes.

Answer:

probes outperform SAE features in-domain, out-of-domain generalization varies sharply between features and datasets. 🧵