x.com/withmartian/...

x.com/withmartian/...

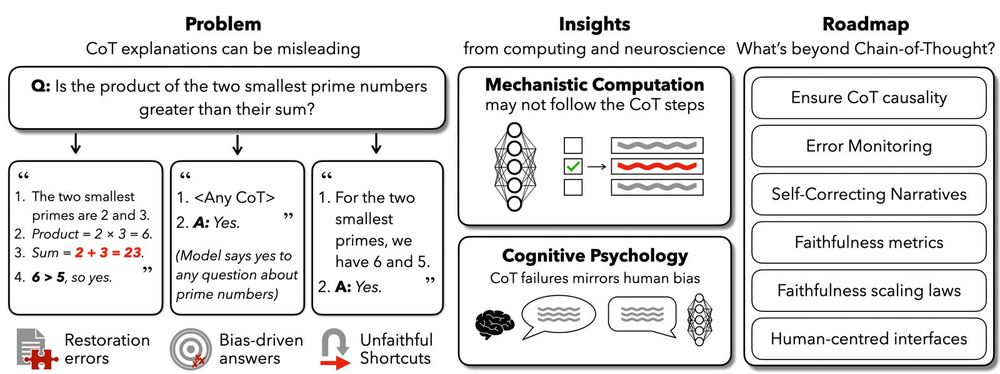

We don't just want nice visualizations or circuits in toy models. We want methods that allow us to meaningfully explain a system and fix it when things go wrong.

We don't just want nice visualizations or circuits in toy models. We want methods that allow us to meaningfully explain a system and fix it when things go wrong.

Why? Because code offers ground truth. Unlike natural language, code is formal and allows us to measure faithfulness and track progress

Why? Because code offers ground truth. Unlike natural language, code is formal and allows us to measure faithfulness and track progress

Are our methods Scalable? → Are they Complete? → And most critically—Are they actually useful for fix things?

Are our methods Scalable? → Are they Complete? → And most critically—Are they actually useful for fix things?

@hunarbatra.bsky.social

Matthew Farrugia-Roberts

(Head TA) and

@jamesaoldfield.bsky.social

🎟 We also have the wonderful

Marta Ziosi

asa guest tutorial speaker on AI governance and regulations!

@hunarbatra.bsky.social

Matthew Farrugia-Roberts

(Head TA) and

@jamesaoldfield.bsky.social

🎟 We also have the wonderful

Marta Ziosi

asa guest tutorial speaker on AI governance and regulations!

•

@yoshuabengio.bsky.social

– Université de Montréal, Mila, LawZero

•

@neelnanda.bsky.social

Google DeepMind

•

Joslyn Barnhart

Google DeepMind

•

Robert Trager

– Oxford Martin AI Governance Initiative

•

@yoshuabengio.bsky.social

– Université de Montréal, Mila, LawZero

•

@neelnanda.bsky.social

Google DeepMind

•

Joslyn Barnhart

Google DeepMind

•

Robert Trager

– Oxford Martin AI Governance Initiative

1️⃣ The alignment problem -- foundations & present-day challenges

2️⃣ Frontier alignment methods & evaluation (RLHF, Constitutional AI, etc.)

3️⃣ Interpretability & monitoring incl. hands-on mech interp labs

4️⃣ Sociotechnical aspects of alignment, governance, risks, Economics of AI and policy

1️⃣ The alignment problem -- foundations & present-day challenges

2️⃣ Frontier alignment methods & evaluation (RLHF, Constitutional AI, etc.)

3️⃣ Interpretability & monitoring incl. hands-on mech interp labs

4️⃣ Sociotechnical aspects of alignment, governance, risks, Economics of AI and policy

An AIMS CDT course with 15 h of lectures + 15 h of labs

💡 Lectures are open to all Oxford students, and we’ll do our best to record & make them publicly available.

An AIMS CDT course with 15 h of lectures + 15 h of labs

💡 Lectures are open to all Oxford students, and we’ll do our best to record & make them publicly available.

Here, FUR offers a fine-grained test if LMs latently used information from CoTs for answers!

Here, FUR offers a fine-grained test if LMs latently used information from CoTs for answers!