1️⃣ Can be directly trained on casual videos without the need for 3D annotation.

2️⃣ Based around a feed-forward transformer and light-weight refinement.

Code and more info: ⏩ fwmb.github.io/anycam/

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

Come by our SceneDINO poster at NeuSLAM today 14:15 (Kamehameha II) or Tue, 15:15 (Ex. Hall I 627)!

W/ Jevtić @fwimbauer.bsky.social @olvrhhn.bsky.social Rupprecht, @stefanroth.bsky.social @dcremers.bsky.social

www.jobs.cam.ac.uk/job/49361/

www.jobs.cam.ac.uk/job/49361/

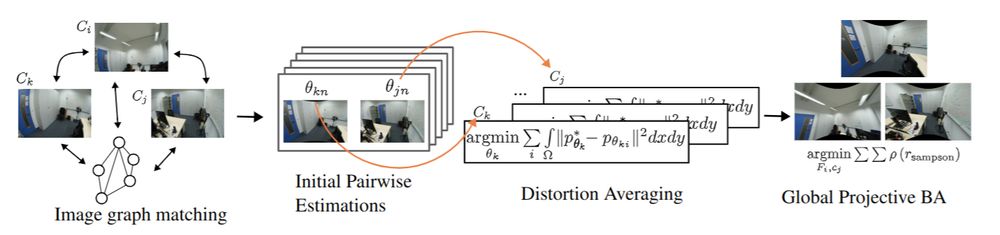

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

Turns out distortion calibration from multiview 2D correspondences can be fully decoupled from 3D reconstruction, greatly simplifying the problem

arxiv.org/abs/2504.16499

github.com/DaniilSinits...

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

🌍: visinf.github.io/scenedino/

📃: arxiv.org/abs/2507.06230

🤗: huggingface.co/spaces/jev-a...

@jev-aleks.bsky.social @fwimbauer.bsky.social @olvrhhn.bsky.social @stefanroth.bsky.social @dcremers.bsky.social

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

Surprisingly, yes!

Our #CVPR2025 paper with @neekans.bsky.social and @dcremers.bsky.social shows that the pairwise distances in both modalities are often enough to find correspondences.

⬇️ 1/4

This is a fully-funded position with salary level E13 at the newly founded DEEM Lab, as part of @bifold.berlin .

Details available at deem.berlin#jobs-2225

This is a fully-funded position with salary level E13 at the newly founded DEEM Lab, as part of @bifold.berlin .

Details available at deem.berlin#jobs-2225

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

cvpr.thecvf.com/Conferences/...

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

Turns out you can!

In our #CVPR2025 paper AnyCam, we directly train on YouTube videos and achieve SOTA results by using an uncertainty-based flow loss and monocular priors!

⬇️

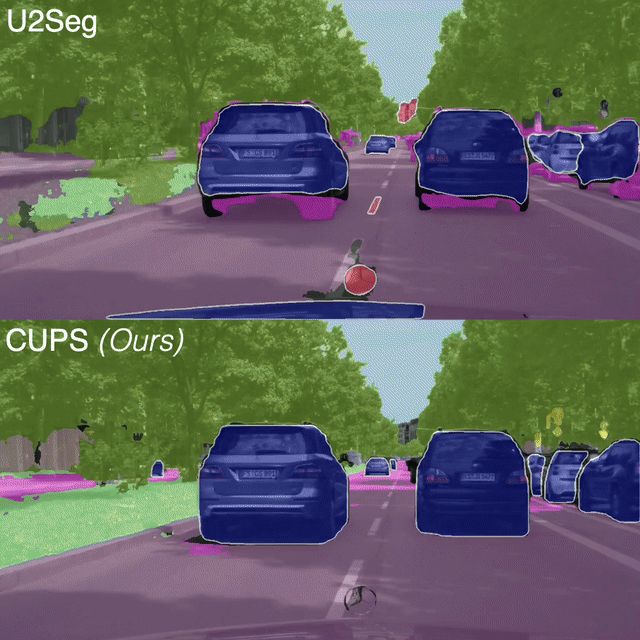

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

We present CUPS, the first unsupervised panoptic segmentation method trained directly on scene-centric imagery.

Using self-supervised features, depth & motion, we achieve SotA results!

🌎 visinf.github.io/cups

🔗 haofeixu.github.io/depthsplat/

🔗 haofeixu.github.io/depthsplat/

🔥 Our method produces geometry, texture-consistent, and physically plausible 4D reconstructions

📰 Check our project page sangluisme.github.io/TwoSquared/

❤️ @ricmarin.bsky.social @dcremers.bsky.social

🔥 Our method produces geometry, texture-consistent, and physically plausible 4D reconstructions

📰 Check our project page sangluisme.github.io/TwoSquared/

❤️ @ricmarin.bsky.social @dcremers.bsky.social

Two rounds: #CVPR2025 and #ICCV2025. $18K in prizes + several $1.5k travel grants. Submit in May for Round 1! opendrivelab.com/challenge2025/ 🧵👇

Two rounds: #CVPR2025 and #ICCV2025. $18K in prizes + several $1.5k travel grants. Submit in May for Round 1! opendrivelab.com/challenge2025/ 🧵👇

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

🔗 autonomousvision.github.io/volsurfs/

📄 arxiv.org/pdf/2409.02482

1️⃣ Can be directly trained on casual videos without the need for 3D annotation.

2️⃣ Based around a feed-forward transformer and light-weight refinement.

Code and more info: ⏩ fwmb.github.io/anycam/

1️⃣ Can be directly trained on casual videos without the need for 3D annotation.

2️⃣ Based around a feed-forward transformer and light-weight refinement.

Code and more info: ⏩ fwmb.github.io/anycam/

@fwimbauer.bsky.social, Weirong Chen, Dominik Muhle, Christian Rupprecht, @dcremers.bsky.social

tl;dr: uncertaintybased loss+pre-trained depth and flow networks+test-time trajectory refinement

arxiv.org/abs/2503.23282

@fwimbauer.bsky.social, Weirong Chen, Dominik Muhle, Christian Rupprecht, @dcremers.bsky.social

tl;dr: uncertaintybased loss+pre-trained depth and flow networks+test-time trajectory refinement

arxiv.org/abs/2503.23282

sites.google.com/view/eval-fo...

We welcome submissions (incl. published papers) on the analysis of emerging capabilities / limits in visual foundation models. #CVPR2025

sites.google.com/view/eval-fo...

We welcome submissions (incl. published papers) on the analysis of emerging capabilities / limits in visual foundation models. #CVPR2025

For more details check out cvg.cit.tum.de

For more details check out cvg.cit.tum.de

For more details check out cvg.cit.tum.de

He'll talk about "𝐅𝐋𝐔𝐗: Flow Matching for Content Creation at Scale".

Live stream: youtube.com/live/nrKKLJX...

6pm GMT+1 / 9am PST (Mon Feb 17rd)

He'll talk about "𝐅𝐋𝐔𝐗: Flow Matching for Content Creation at Scale".

Live stream: youtube.com/live/nrKKLJX...

6pm GMT+1 / 9am PST (Mon Feb 17rd)

Emergent Visual Abilities and Limits of Foundation Models 📷📷🧠🚀✨

sites.google.com/view/eval-fo...

Submission Deadline: March 12th!

code is available at: github.com/Sangluisme/I...

😊Huge thanks to my amazing co-authors. @dongliangcao.bsky.social @dcremers.bsky.social

👏Special thanks to @ricmarin.bsky.social

code is available at: github.com/Sangluisme/I...

😊Huge thanks to my amazing co-authors. @dongliangcao.bsky.social @dcremers.bsky.social

👏Special thanks to @ricmarin.bsky.social