Go check it out and don't hesitate to write your thoughts/questions in the comments section!

Go check it out and don't hesitate to write your thoughts/questions in the comments section!

Follow along: github.com/huggingface/...

Follow along: github.com/huggingface/...

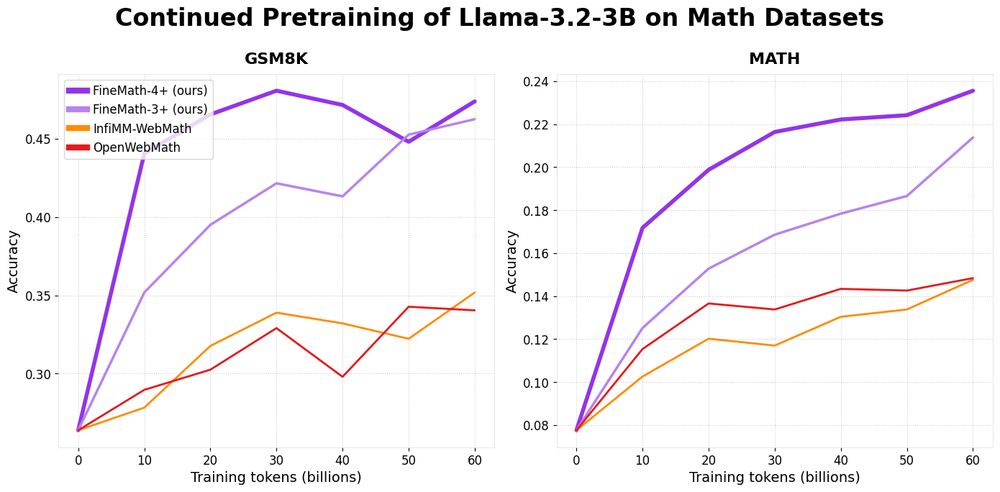

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

Math remains challenging for LLMs and by training on FineMath we see considerable gains over other math datasets, especially on GSM8K and MATH.

🤗 huggingface.co/datasets/Hug...

Here’s a breakdown 🧵

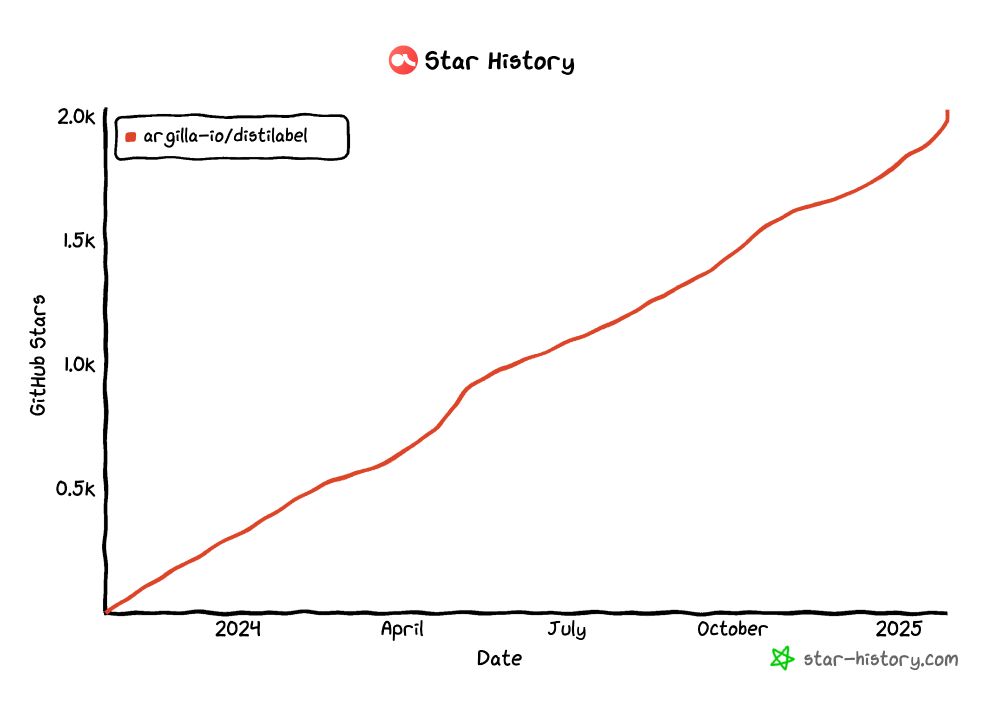

Let me show you how EASY it is to export your annotated datasets from Argilla to the Hugging Face Hub. 🤩

Take a look to this quick demo 👇

💁♂️ More info about the release at github.com/argilla-io/a...

#AI #MachineLearning #OpenSource #DataScience #HuggingFace #Argilla

Let me show you how EASY it is to export your annotated datasets from Argilla to the Hugging Face Hub. 🤩

Take a look to this quick demo 👇

💁♂️ More info about the release at github.com/argilla-io/a...

#AI #MachineLearning #OpenSource #DataScience #HuggingFace #Argilla

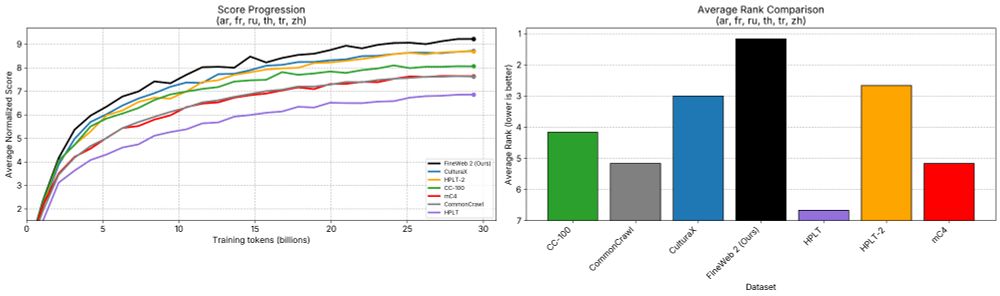

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

FineWeb 2 extends the data driven approach to pre-training dataset design that was introduced in FineWeb 1 to now covers 1893 languages/scripts

Details: huggingface.co/datasets/Hug...

A detailed open-science tech report is coming soon

🧵>>

🧵>>

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

SmolVLM can be fine-tuned on a Google collab and be run on a laptop! Or process millions of documents with a consumer GPU!

It contains 1M rows of multi-turn conversations with diverse instructions!

huggingface.co/datasets/arg...

It contains 1M rows of multi-turn conversations with diverse instructions!

huggingface.co/datasets/arg...

At @huggingface.bsky.social we'll launch a huge community sprint soon to build high-quality training datasets for many languages.

We're looking for Language Leads to help with outreach.

Find your language and nominate yourself:

forms.gle/iAJVauUQ3FN8...

At @huggingface.bsky.social we'll launch a huge community sprint soon to build high-quality training datasets for many languages.

We're looking for Language Leads to help with outreach.

Find your language and nominate yourself:

forms.gle/iAJVauUQ3FN8...

Let's build the largest open community dataset to evaluate and improve image generation models.

Follow:

huggingface.co/data-is-bett...

And stay tuned here

Let's build the largest open community dataset to evaluate and improve image generation models.

Follow:

huggingface.co/data-is-bett...

And stay tuned here

Pre-training & evaluation code, synthetic data generation pipelines, post-training scripts, on-device tools & demos

Apache 2.0. V2 data mix coming soon!

Which tools should we add next?

Pre-training & evaluation code, synthetic data generation pipelines, post-training scripts, on-device tools & demos

Apache 2.0. V2 data mix coming soon!

Which tools should we add next?

The dataset for SmolLM2 was created by combining multiple existing datasets and generating new synthetic datasets, including MagPie Ultra v1.0, using distilabel.

Check out the dataset:

huggingface.co/datasets/Hug...

The dataset for SmolLM2 was created by combining multiple existing datasets and generating new synthetic datasets, including MagPie Ultra v1.0, using distilabel.

Check out the dataset:

huggingface.co/datasets/Hug...

thanks @philschmid.bsky.social for the finetuning code

thanks @huggingface.bsky.social for the smol model

thanks @qgallouedec.bsky.social and friends for TRL

thanks @philschmid.bsky.social for the finetuning code

thanks @huggingface.bsky.social for the smol model

thanks @qgallouedec.bsky.social and friends for TRL

For those who don’t know me, I’m Gabriel, ML Engineer at @huggingface.bsky.social where I work developing tools like distilabel or Argilla for you to take care of your data 🤗

The content of my posts here will be mainly related to synthetic data and LLM post-training.

For those who don’t know me, I’m Gabriel, ML Engineer at @huggingface.bsky.social where I work developing tools like distilabel or Argilla for you to take care of your data 🤗

The content of my posts here will be mainly related to synthetic data and LLM post-training.