https://kaidik.github.io/

doi.org/10.18637/jss...

Check out the website (statimagcoll.github.io/RESI/) to report bugs or request model types.

@mntj21.bsky.social - first author, with @kaidikang.bsky.social

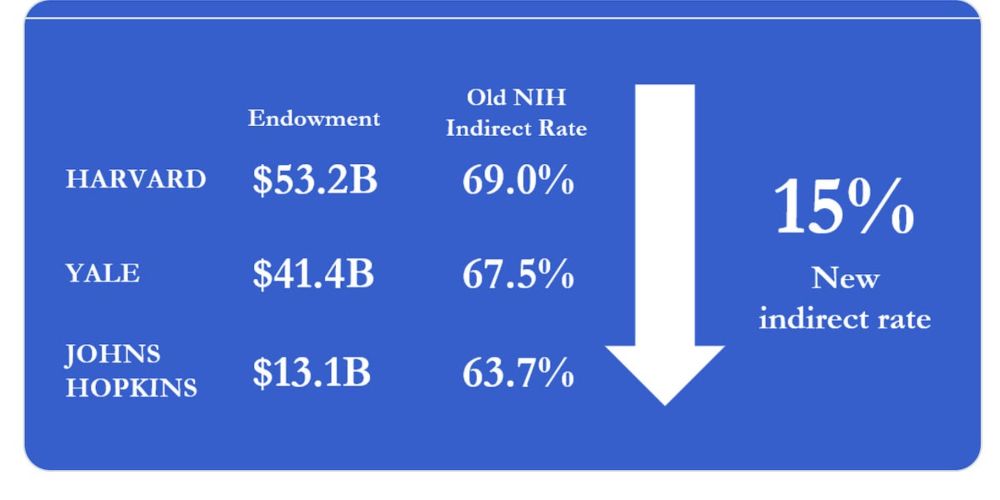

goodscience.substack.com/p/indirect-c...

goodscience.substack.com/p/indirect-c...

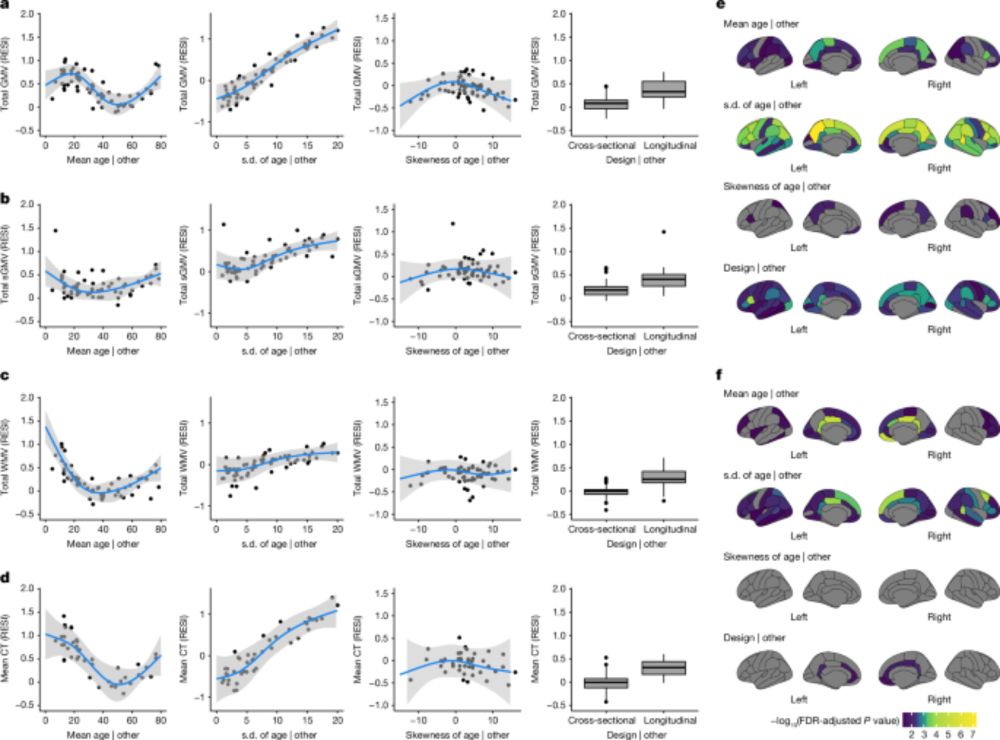

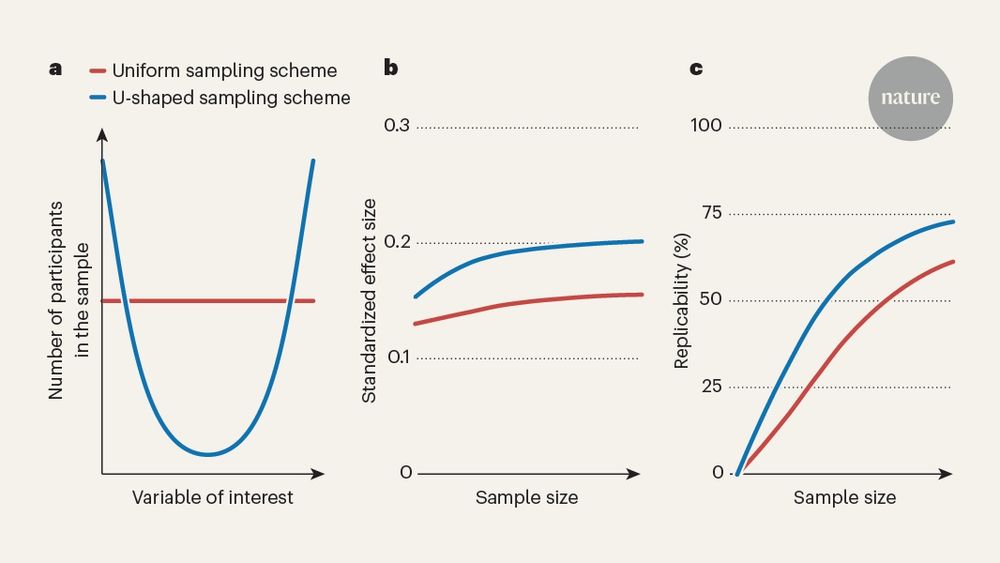

In this work, we investigated how we can leverage study designs to improve the replicability of brain-wide association studies (BWAS)

👇👇👇

www.nature.com/articles/s41...

In this work, we investigated how we can leverage study designs to improve the replicability of brain-wide association studies (BWAS)

👇👇👇

www.nature.com/articles/s41...

www.nature.com/articles/d41...

Also proud of the team at #MIDB in the support! @tervoclemmensb.bsky.social @bart-larsen.bsky.social and more!

If you are interested in optimizing study designs, take a look, it's a great read!

www.nature.com/articles/s41...