🌐 karstanczak.github.io

To help us improve future events, please share your feedback in our anonymous 5-minute survey. Your perspective is needed!

📝 Survey Link: forms.gle/mXtZ4mWUGSBN...

#GeBNLP #NLP

To help us improve future events, please share your feedback in our anonymous 5-minute survey. Your perspective is needed!

📝 Survey Link: forms.gle/mXtZ4mWUGSBN...

#GeBNLP #NLP

Please join us in welcoming:

🔹Anne Lauscher @a-lauscher.bsky.social

🔹Maarten Sap @maartensap.bsky.social

Full details: gebnlp-workshop.github.io/keynotes.html

See you on August 1! ☀️

#NLP #GeBNLP

Please join us in welcoming:

🔹Anne Lauscher @a-lauscher.bsky.social

🔹Maarten Sap @maartensap.bsky.social

Full details: gebnlp-workshop.github.io/keynotes.html

See you on August 1! ☀️

#NLP #GeBNLP

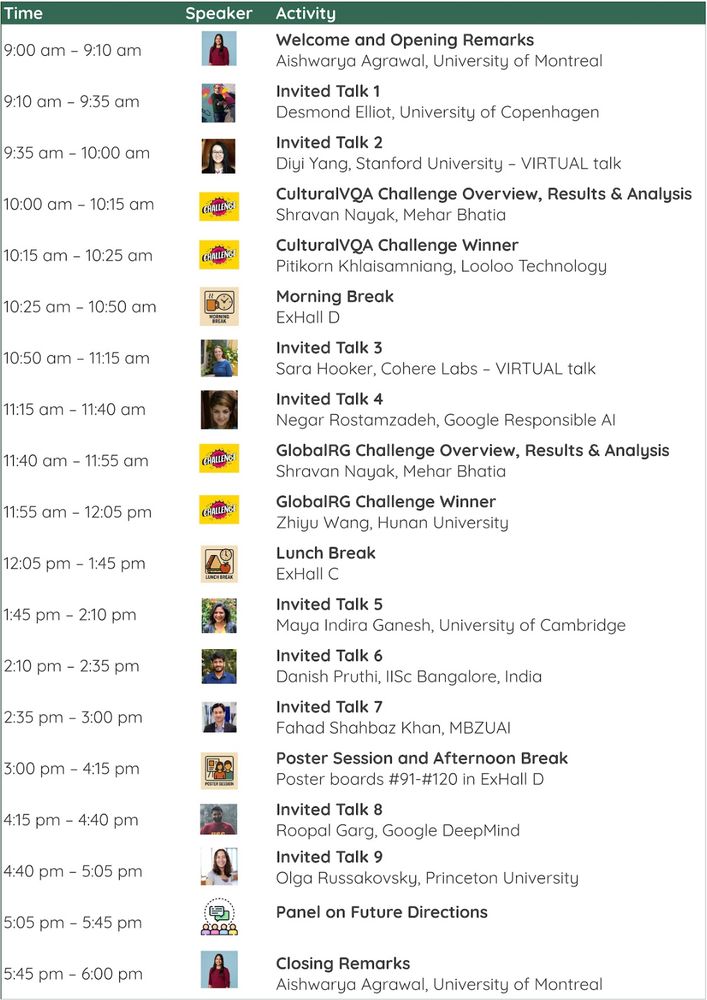

📅 on Thursday, June 12

⏲️ from 9AM CDT

🏛️in Room 104E

Join us today at @cvprconference.bsky.social for amazing speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware!

#CVPR2025

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

📅 on Thursday, June 12

⏲️ from 9AM CDT

🏛️in Room 104E

Join us today at @cvprconference.bsky.social for amazing speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware!

#CVPR2025

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Our #ACL2025 (Main) paper shows that hallucinations under irrelevant contexts follow a systematic failure mode — revealing how LLMs generalize using abstract classes + context cues, albeit unreliably.

📎 Paper: arxiv.org/abs/2505.22630 1/n

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

Get ready for a full day of speakers, posters, and a panel discussion on making VLMs more geo-diverse and culturally aware 🌐

Check out the schedule below!

We have extended the challenge submission deadline

🛠️ New challenge deadline: Apr 22

Show your stuff in the CulturalVQA and GlobalRG challenges!

👉 sites.google.com/view/vlms4al...

Spread the word and keep those submissions coming! 🌍✨

We have extended the challenge submission deadline

🛠️ New challenge deadline: Apr 22

Show your stuff in the CulturalVQA and GlobalRG challenges!

👉 sites.google.com/view/vlms4al...

Spread the word and keep those submissions coming! 🌍✨

We are releasing the first benchmark to evaluate how well automatic evaluators, such as LLM judges, can evaluate web agent trajectories.

Help shape the future of culturally aware & geo-diverse VLMs:

⚔️ Challenges: Deadline: Apr 15 🔗https://sites.google.com/view/vlms4all/challenges

📄 Papers (4pg): Deadline: Apr 22 🔗https://sites.google.com/view/vlms4all/call-for-papers

Join us!

🌐 sites.google.com/view/vlms4all

forms.gle/VkPU4vS4EacE... #NLP

forms.gle/VkPU4vS4EacE... #NLP

The workshop features fantastic speakers, a short-paper track, and two challenges, including one based on CulturalVQA. Don’t miss it!

The workshop features fantastic speakers, a short-paper track, and two challenges, including one based on CulturalVQA. Don’t miss it!

@meharbhatia.bsky.social @rabiul.bsky.social @spandanagella.bsky.social @sivareddyg.bsky.social @svansteenkiste.bsky.social @karstanczak.bsky.social

@meharbhatia.bsky.social @rabiul.bsky.social @spandanagella.bsky.social @sivareddyg.bsky.social @svansteenkiste.bsky.social @karstanczak.bsky.social

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

Retrievers need to be aligned too! 🚨🚨🚨

Work done with the wonderful Nick and @sivareddyg.bsky.social

🔗 mcgill-nlp.github.io/malicious-ir/

Thread: 🧵👇

That's why we created SafeArena, a safety benchmark for web agents. See the thread and our paper for details: arxiv.org/abs/2503.04957 👇

That's why we created SafeArena, a safety benchmark for web agents. See the thread and our paper for details: arxiv.org/abs/2503.04957 👇

Check out our new Web Agents ∩ Safety benchmark: SafeArena!

Paper: arxiv.org/abs/2503.04957

Check out our new Web Agents ∩ Safety benchmark: SafeArena!

Paper: arxiv.org/abs/2503.04957

Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔

Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵

Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔

Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵

📢 Check out our Call for Papers! Find all the details on our website: gebnlp-workshop.github.io

We look forward to your submissions!

📢 Check out our Call for Papers! Find all the details on our website: gebnlp-workshop.github.io

We look forward to your submissions!

Check the new deadlines on our webpage: gebnlp-workshop.github.io/cfp.html

Check the new deadlines on our webpage: gebnlp-workshop.github.io/cfp.html

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

Work w/ fantastic advisors Dima Bahdanau and @sivareddyg.bsky.social

Thread 🧵:

The first ever resource of multilingual, multicultural, and multigeographical stereotypes, built to support nuanced LLM evaluation and bias mitigation. We have been working on this around the world for almost **4 years** and I am thrilled to share it with you all soon.

The first ever resource of multilingual, multicultural, and multigeographical stereotypes, built to support nuanced LLM evaluation and bias mitigation. We have been working on this around the world for almost **4 years** and I am thrilled to share it with you all soon.

Let's complete the list with three more🧵

From interpretability to bias/fairness and cultural understanding -> 🧵

Let's complete the list with three more🧵

See you again at #ACL2025NLP and #EMNLP2025!

#NLProc

@rnv.bsky.social, @dwright37.bsky.social, @zeerak.bsky.social

See you again at #ACL2025NLP and #EMNLP2025!

#NLProc

@rnv.bsky.social, @dwright37.bsky.social, @zeerak.bsky.social