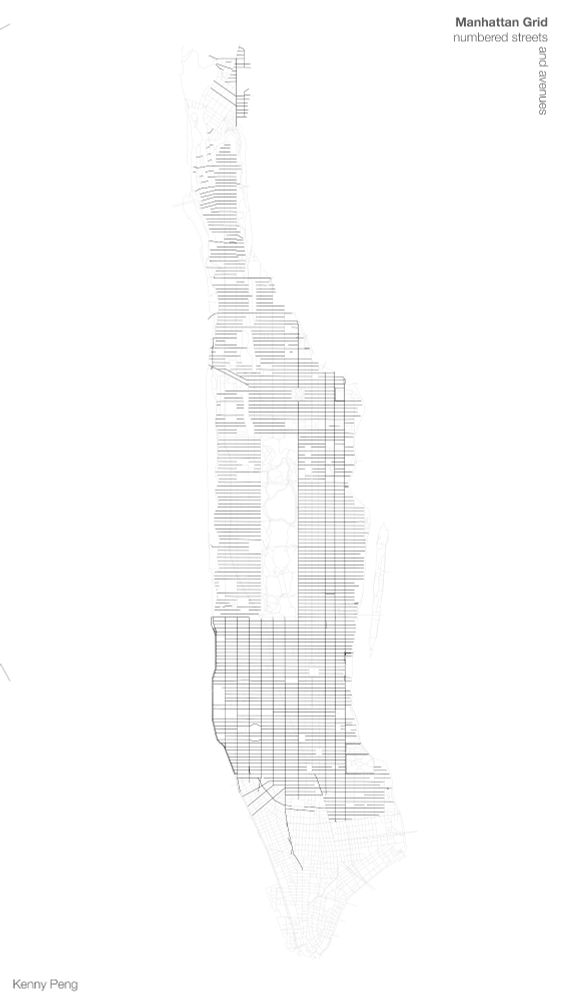

kennypeng.me

Kenny extracts minimal elements from a not-as-minimal-as-it-seems object: the Manhattan street grid. "I show how Manhattan’s numbered grid of streets and avenues is more complicated than you might realize," he says.

This point by @nkgarg.bsky.social has greatly shaped my thinking about the role of computer science in public service settings.

arxiv.org/abs/2507.03600

This point by @nkgarg.bsky.social has greatly shaped my thinking about the role of computer science in public service settings.

Come chat about "Sparse Autoencoders for Hypothesis Generation" (west-421), and "Correlated Errors in LLMs" (east-1102)!

Short thread ⬇️

Come chat about "Sparse Autoencoders for Hypothesis Generation" (west-421), and "Correlated Errors in LLMs" (east-1102)!

Short thread ⬇️

arxiv.org/abs/2506.07962

arxiv.org/abs/2506.07962

In our #CVPR2025 paper, we propose a method to make them more compact without sacrificing coverage.

In our #CVPR2025 paper, we propose a method to make them more compact without sacrificing coverage.

Draft: arxiv.org/abs/2502.04382

We're continuing to cook up new updates for our Python package: github.com/rmovva/Hypot...

(Recently, "Matryoshka SAEs", which help extract coarse and granular concepts without as much hyperparameter fiddling.)

Draft: arxiv.org/abs/2502.04382

We're continuing to cook up new updates for our Python package: github.com/rmovva/Hypot...

(Recently, "Matryoshka SAEs", which help extract coarse and granular concepts without as much hyperparameter fiddling.)

📄: arxiv.org/abs/2412.16406

📄: arxiv.org/abs/2412.16406

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

My answer today in Nature.

We will not be cowed. We will keep using AI to build a fairer, healthier world.

www.nature.com/articles/d41...

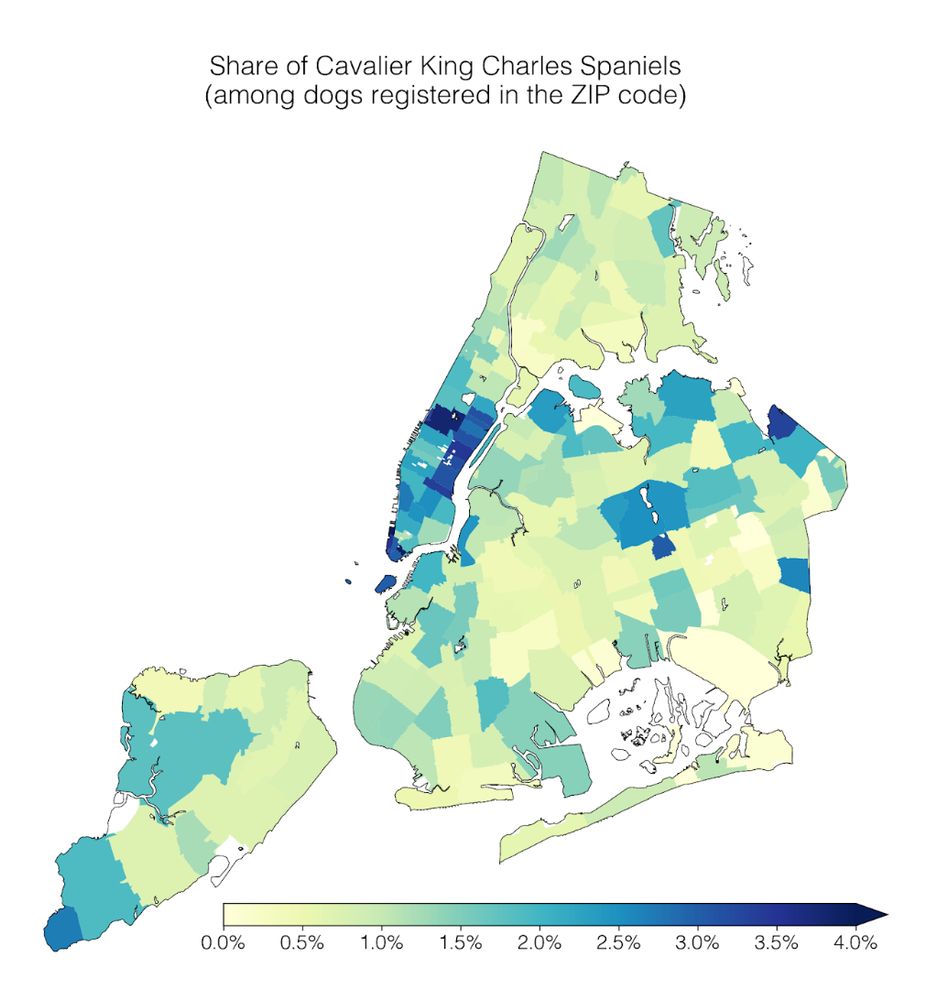

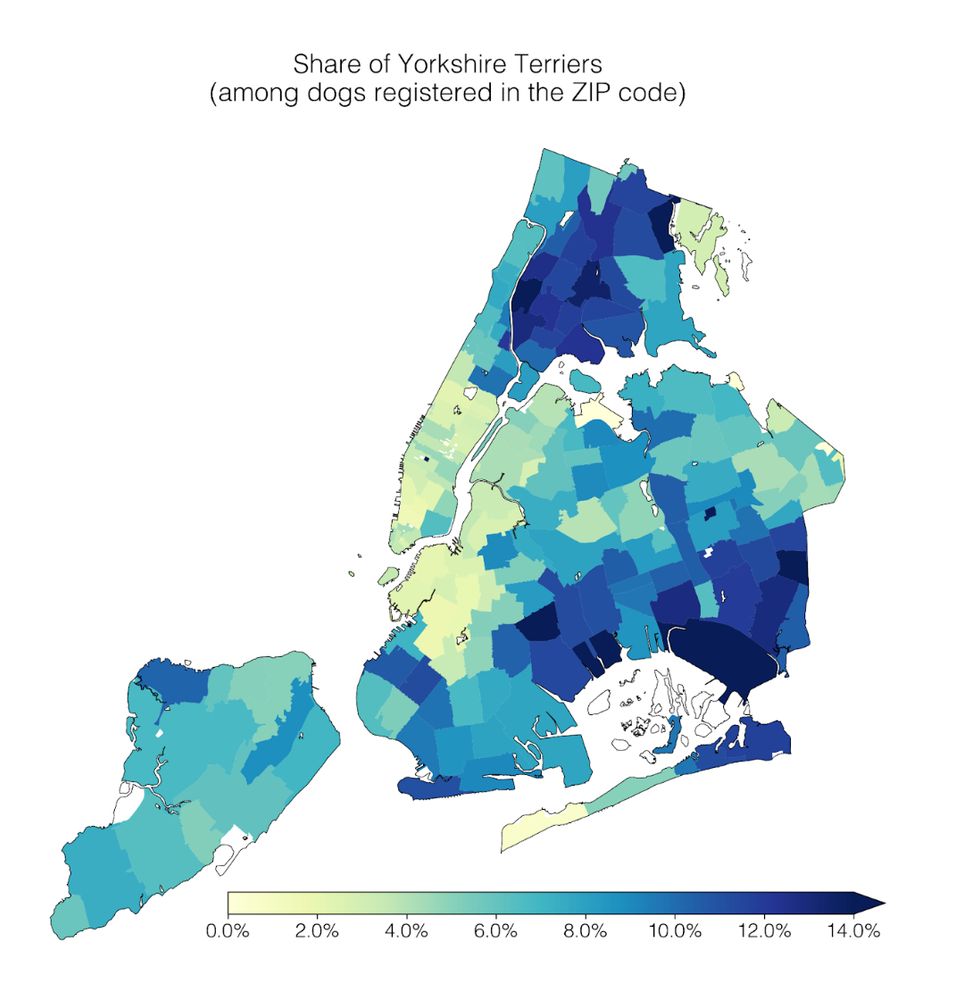

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

1) Geospatial trends: Cavalier King Charles Spaniels are common in Manhattan; the opposite is true for Yorkshire Terriers.

We build MIGRATE: a dataset of yearly flows between 47 billion pairs of US Census Block Groups. 1/5

We build MIGRATE: a dataset of yearly flows between 47 billion pairs of US Census Block Groups. 1/5

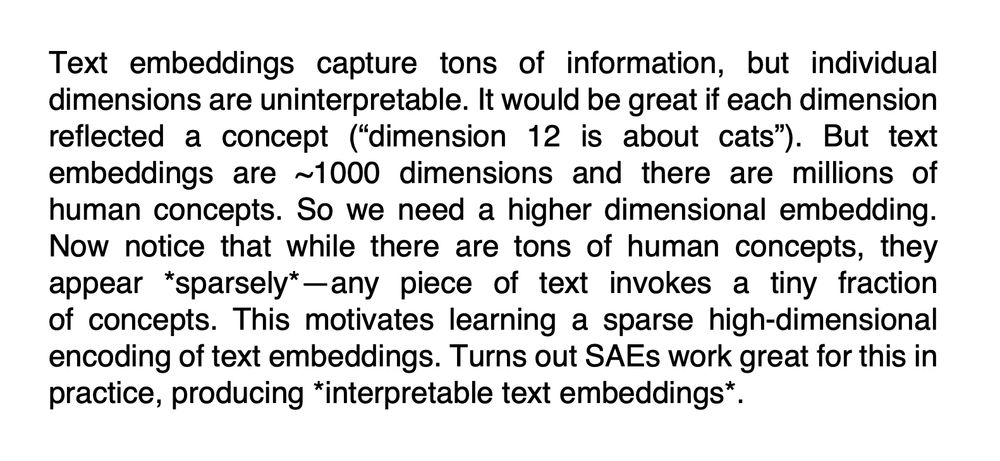

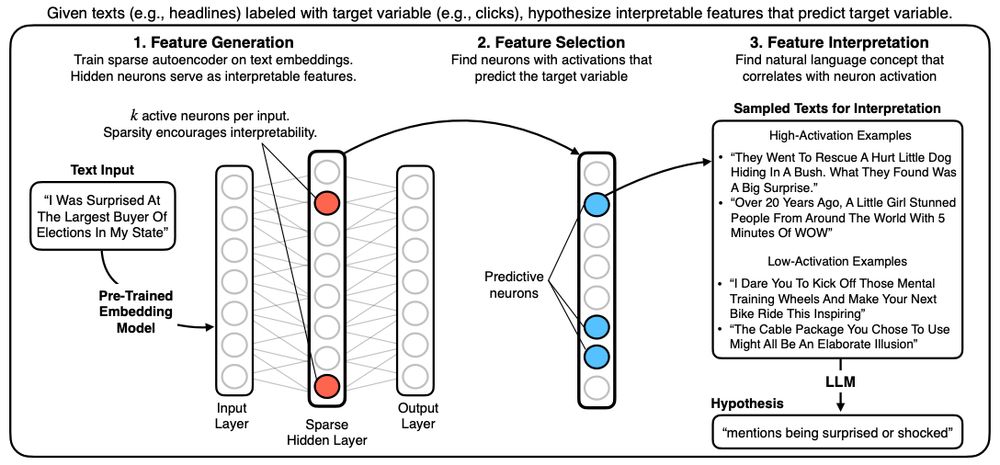

Our method, HypotheSAEs, produces interpretable text features that predict a target variable, e.g. features in news headlines that predict engagement. 🧵1/

Our method, HypotheSAEs, produces interpretable text features that predict a target variable, e.g. features in news headlines that predict engagement. 🧵1/

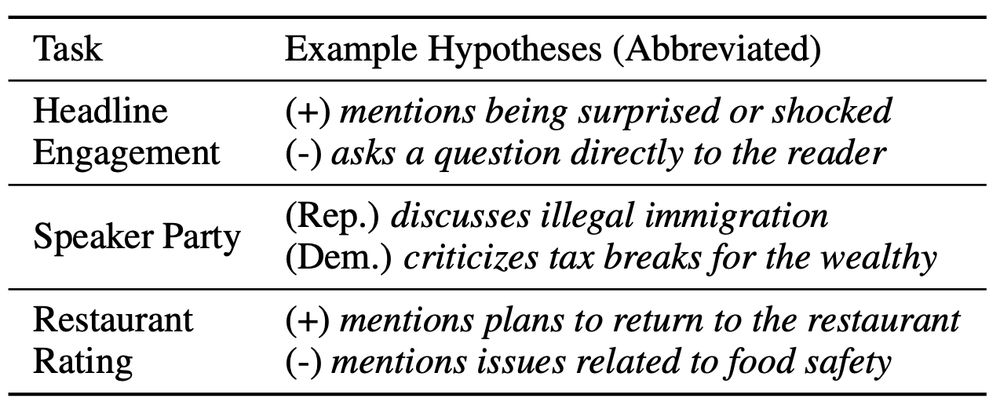

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

HypotheSAEs generates interpretable features of text data that predict a target variable: What features predict clicks from headlines / party from congressional speech / rating from Yelp review?

arxiv.org/abs/2502.04382

(And if you're at #AAAI, I'm presenting at 11:15am today in the Humans and AI session. Poster 12:30-2:30.)

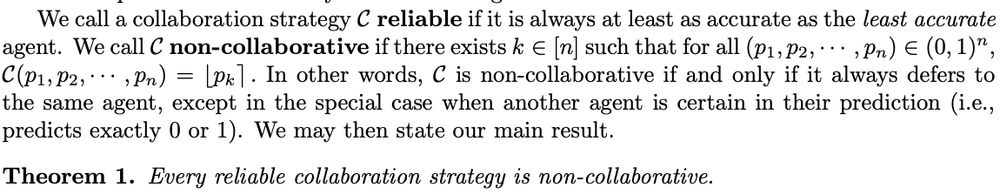

arxiv.org/abs/2411.15230

(And if you're at #AAAI, I'm presenting at 11:15am today in the Humans and AI session. Poster 12:30-2:30.)

arxiv.org/abs/2411.15230

Poster is in a few hours, come chat!

Wed 11am-2pm | West Ballroom A-D #5505

Poster is in a few hours, come chat!

Wed 11am-2pm | West Ballroom A-D #5505