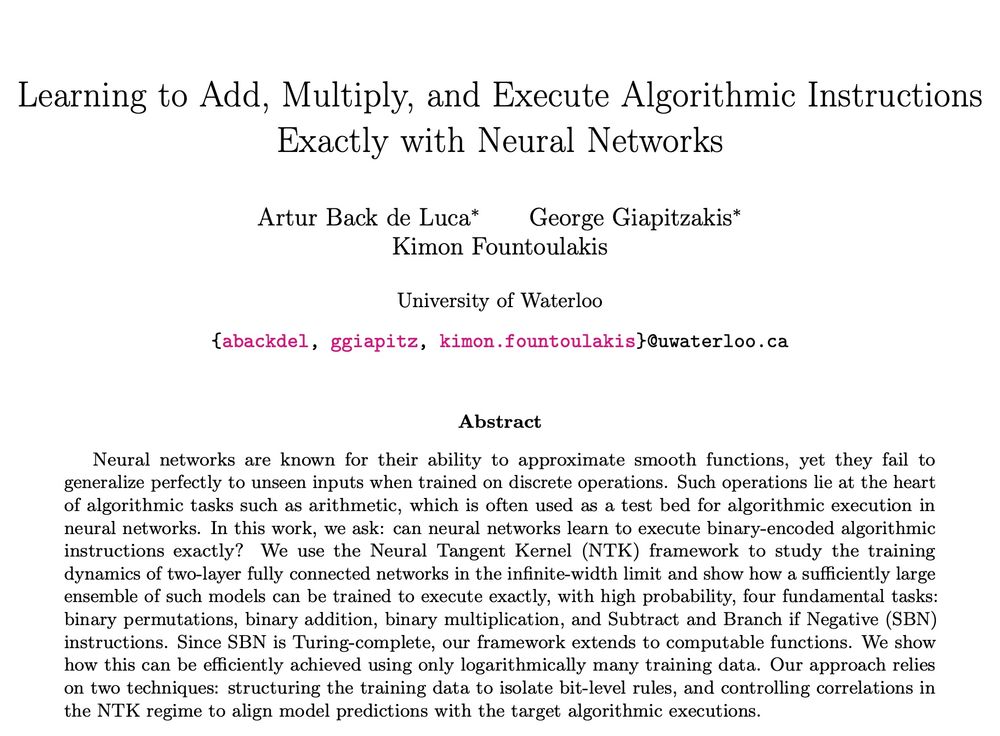

Machine Learning

Lab: opallab.ca

Link to the paper: arxiv.org/abs/2510.04115

Link to the paper: arxiv.org/abs/2510.04115

I compiled a list of theoretical papers related to the computational capabilities of Transformers, recurrent networks, feedforward networks, and graph neural networks.

Link: github.com/opallab/neur...

Observation made by my student George Giapitzakis.

Observation made by my student George Giapitzakis.

@jasondeanlee.bsky.social!

We prove a neural scaling law in the SGD learning of extensive width two-layer neural networks.

arxiv.org/abs/2504.19983

🧵below (1/10)

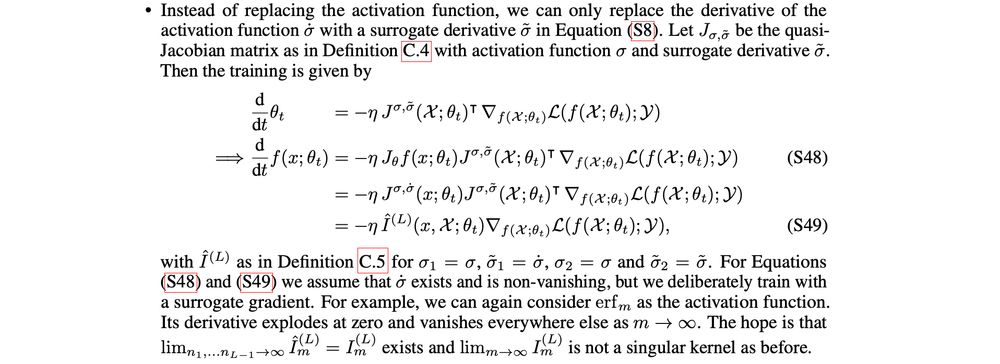

They extend the NTK framework to activation functions that have finitely many jumps.

They extend the NTK framework to activation functions that have finitely many jumps.

🏆 This game-changing platform won the Best Paper Award at #CHI2025.

🔗Read more: uwaterloo.ca/computer-sci...

#UWaterloo #AI

🏆 This game-changing platform won the Best Paper Award at #CHI2025.

🔗Read more: uwaterloo.ca/computer-sci...

#UWaterloo #AI

arxiv.org/abs/2410.21550

A bit more info on linkedin: www.linkedin.com/posts/aleksa...

arxiv.org/abs/2410.21550

A bit more info on linkedin: www.linkedin.com/posts/aleksa...

"Memory Augmented Large Language Models are Computationally Universal", Dale Schuurmans

link: arxiv.org/abs/2301.04589

"Memory Augmented Large Language Models are Computationally Universal", Dale Schuurmans

link: arxiv.org/abs/2301.04589

@alexdimakis.bsky.social @manoliskellis.bsky.social

www.greeksin.ai

Link: dspacemainprd01.lib.uwaterloo.ca/server/api/c...

Relevant papers:

1) Local Graph Clustering with Noisy Labels (ICLR 2024)

Link: dspacemainprd01.lib.uwaterloo.ca/server/api/c...

Relevant papers:

1) Local Graph Clustering with Noisy Labels (ICLR 2024)

Link: arxiv.org/abs/2502.16763

1. Graph neural networks extrapolate out-of-distribution for shortest paths. arxiv.org/abs/2503.19173

2. Round and Round We Go! What makes Rotary Positional Encodings useful?. ICLR 2025. openreview.net/forum?id=Gtv...

1. Graph neural networks extrapolate out-of-distribution for shortest paths. arxiv.org/abs/2503.19173

2. Round and Round We Go! What makes Rotary Positional Encodings useful?. ICLR 2025. openreview.net/forum?id=Gtv...

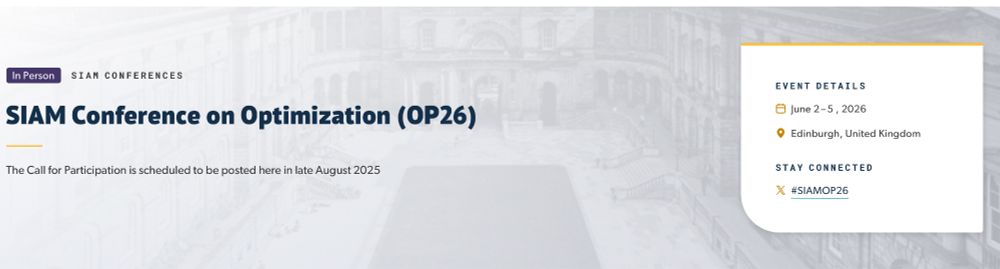

He will lecture on LLMs as GNNs – a topic which received quite some attention at our last session.

Specifically, we will learn how Graph ML tools can help understand LLM generalisation

He will lecture on LLMs as GNNs – a topic which received quite some attention at our last session.

Specifically, we will learn how Graph ML tools can help understand LLM generalisation