Learn more & apply: tinyurl.com/use-inspired....

Learn more & apply: tinyurl.com/use-inspired....

No expert traces. No test-time hacks.

Just: Self-explanation + RL-style training

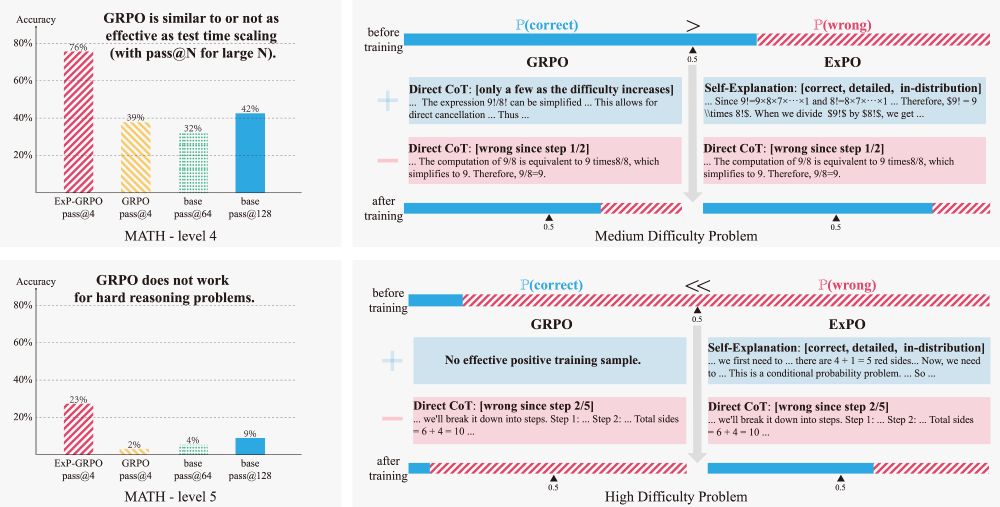

Result? Accuracy on MATH level-5 jumped from 2% → 23%.

No expert traces. No test-time hacks.

Just: Self-explanation + RL-style training

Result? Accuracy on MATH level-5 jumped from 2% → 23%.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

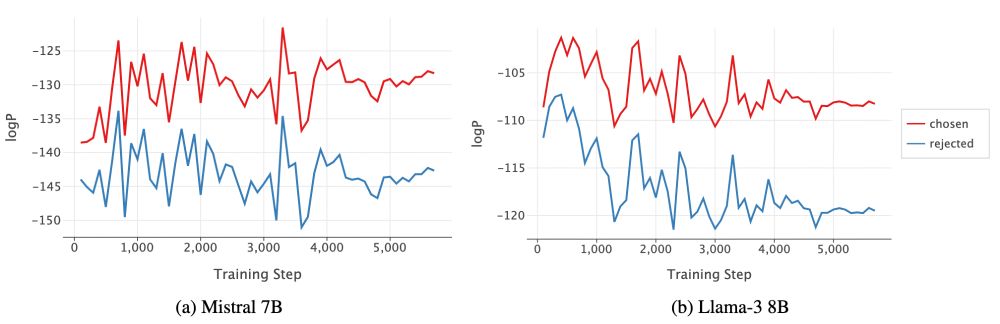

Our answer: Gradient Entanglement!

arxiv.org/abs/2410.13828

Our answer: Gradient Entanglement!

arxiv.org/abs/2410.13828

Our Personalized-RLHF work:

- light-weight user model

- personalize all *PO alignment algorithms

- strong performance on the largest personalized preference dataset

arxiv.org/abs/2402.05133

Our Personalized-RLHF work:

- light-weight user model

- personalize all *PO alignment algorithms

- strong performance on the largest personalized preference dataset

arxiv.org/abs/2402.05133

Learn more & apply: t.co/OPrxO3yMhf

Learn more & apply: t.co/OPrxO3yMhf