Also a linguistics enthusiast.

morrisalp.github.io

Meet ConlangCrafter - a pipeline for creating novel languages with LLMs.

A Japanese-Esperanto creole? An alien cephalopod color-based language?

Enter your idea and see a conlang emerge. 🧵👇

Meet ConlangCrafter - a pipeline for creating novel languages with LLMs.

A Japanese-Esperanto creole? An alien cephalopod color-based language?

Enter your idea and see a conlang emerge. 🧵👇

Meet ConlangCrafter - a pipeline for creating novel languages with LLMs.

A Japanese-Esperanto creole? An alien cephalopod color-based language?

Enter your idea and see a conlang emerge. 🧵👇

tau-vailab.github.io/ProtoSnap/

h/t Rachel Mikulinsky @ShGordin @ElorHadar and all collaborators.

🧵👇

tau-vailab.github.io/ProtoSnap/

h/t Rachel Mikulinsky @ShGordin @ElorHadar and all collaborators.

🧵👇

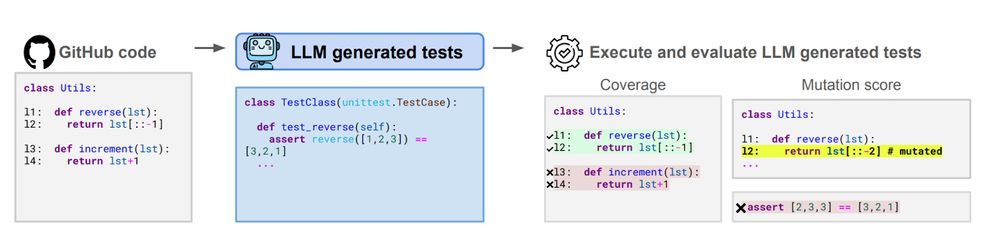

Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

Preprint: arxiv.org/abs/2410.00752

Leaderboard: testgeneval.github.io/leaderboard....

Work with Keren Ganon, Rachel Mikulinsky, Hadar Elor.

More info below👇

Work with Keren Ganon, Rachel Mikulinsky, Hadar Elor.

More info below👇

suno.com/song/1e21b93...

suno.com/song/1e21b93...

This one's got everything! Poetry! Grammar! Love songs! Queer communities! Unicode! and ... Y.R. Chao!

What more could you want?

#langsky 🀄️📚

1/

This one's got everything! Poetry! Grammar! Love songs! Queer communities! Unicode! and ... Y.R. Chao!

What more could you want?

#langsky 🀄️📚

1/

pubs.aip.org/asa/jasa/art...

pubs.aip.org/asa/jasa/art...

Gene Chou, Kai Zhang, Sai Bi, Hao Tan, Zexiang Xu, Fujun Luan, Bharath Hariharan, @snavely.bsky.social

tl;dr: multiview CroCo meets diffusion (DiT). Better than luma?

arxiv.org/abs/2411.13549

Gene Chou, Kai Zhang, Sai Bi, Hao Tan, Zexiang Xu, Fujun Luan, Bharath Hariharan, @snavely.bsky.social

tl;dr: multiview CroCo meets diffusion (DiT). Better than luma?

arxiv.org/abs/2411.13549

Hana Bezalel, Dotan Ankri, Ruojin Cai, Hadar Averbuch-Elor

tl;dr: MegaDepth/Scenes subset with small/large/no overlap image pairs, the task is R prediction

arxiv.org/abs/2411.07096

Hana Bezalel, Dotan Ankri, Ruojin Cai, Hadar Averbuch-Elor

tl;dr: MegaDepth/Scenes subset with small/large/no overlap image pairs, the task is R prediction

arxiv.org/abs/2411.07096

CLIP shows emergent understanding of *visual-semantic hierarchies*. Our benchmark *HierarCaps* gives GT to measure and further align this!

Project page: hierarcaps.github.io

Paper: arxiv.org/abs/2407.08521

CLIP shows emergent understanding of *visual-semantic hierarchies*. Our benchmark *HierarCaps* gives GT to measure and further align this!

Project page: hierarcaps.github.io

Paper: arxiv.org/abs/2407.08521