📍Germany 🔗 https://markboss.me

Join us in Honolulu for the Instance-Level Recognition and Generation Workshop at #ICCV2025 🏝

🗓️ Oct 19, 8:30am–12:30pm 📍 Room 306 A

We’ll have amazing keynotes, plus oral and poster sessions featuring accepted and invited papers.

Don’t miss it!

ilr-workshop.github.io/ICCVW2025/

Join us in Honolulu for the Instance-Level Recognition and Generation Workshop at #ICCV2025 🏝

🗓️ Oct 19, 8:30am–12:30pm 📍 Room 306 A

We’ll have amazing keynotes, plus oral and poster sessions featuring accepted and invited papers.

Don’t miss it!

ilr-workshop.github.io/ICCVW2025/

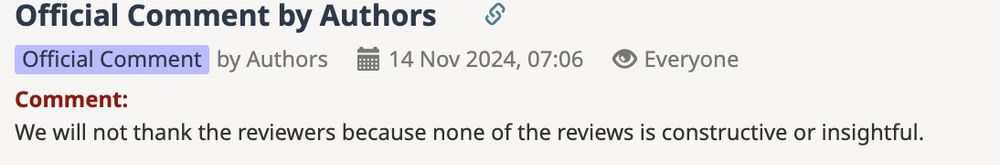

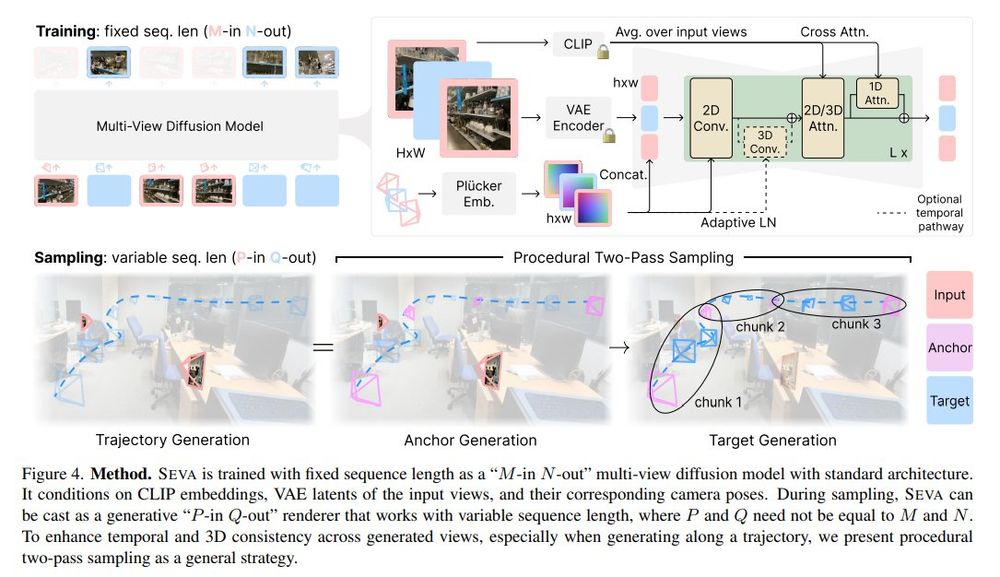

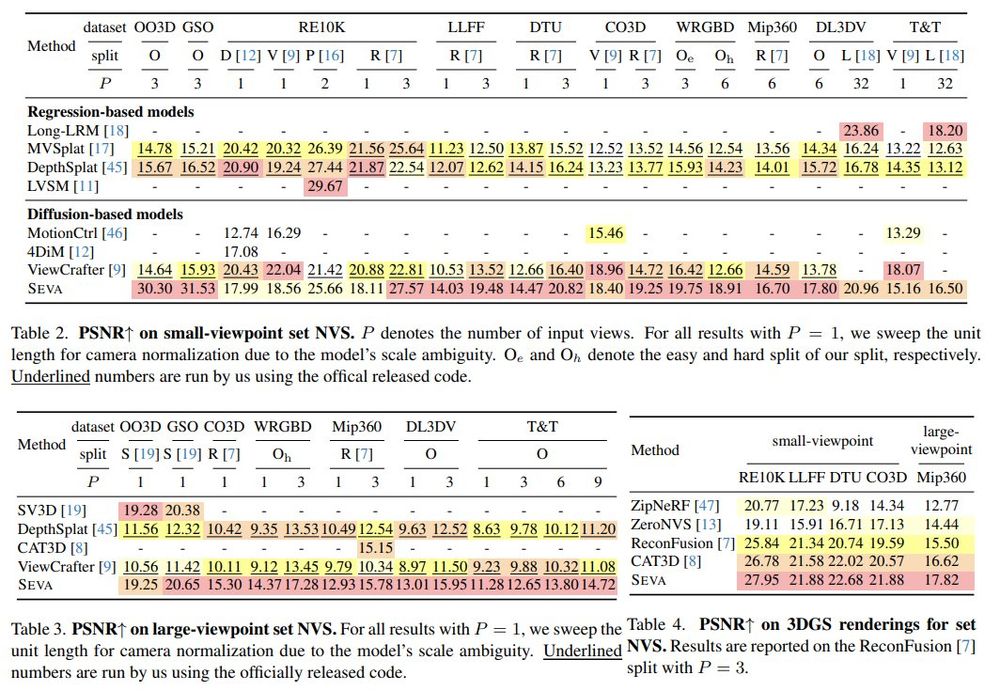

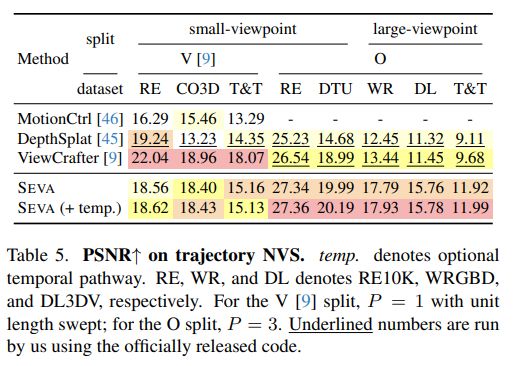

Project page: reservoirswd.github.io

Project page: reservoirswd.github.io

Jensen (Jinghao)Zhou, Hang Gao, Vikram Voleti, @adyaman.bsky.social, Chun-Han Yao, @markboss.bsky.social, @philiptorr.bsky.social, Christian Rupprecht, Varun Jampani

arxiv.org/abs/2503.14489

Jensen (Jinghao)Zhou, Hang Gao, Vikram Voleti, @adyaman.bsky.social, Chun-Han Yao, @markboss.bsky.social, @philiptorr.bsky.social, Christian Rupprecht, Varun Jampani

arxiv.org/abs/2503.14489

stability.ai/news/stable-...

stability.ai/news/stable-...