Used by @ai2.bsky.social for OLMo-2 32B 🔥

New results show ~70% speedups for LLM + RL math and reasoning 🧠

🧵below or hear my DLCT talk online on March 28!

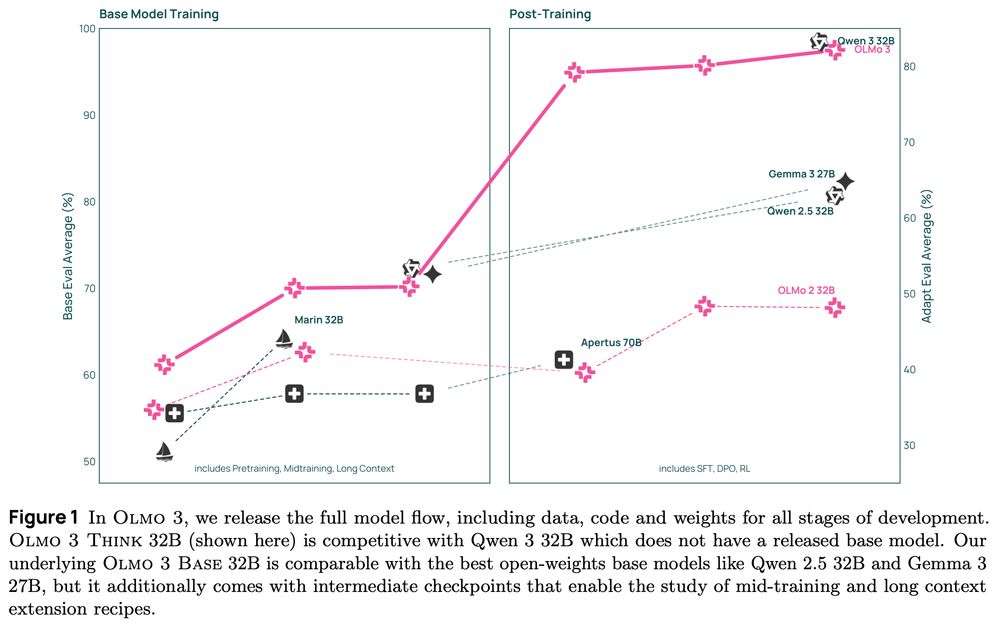

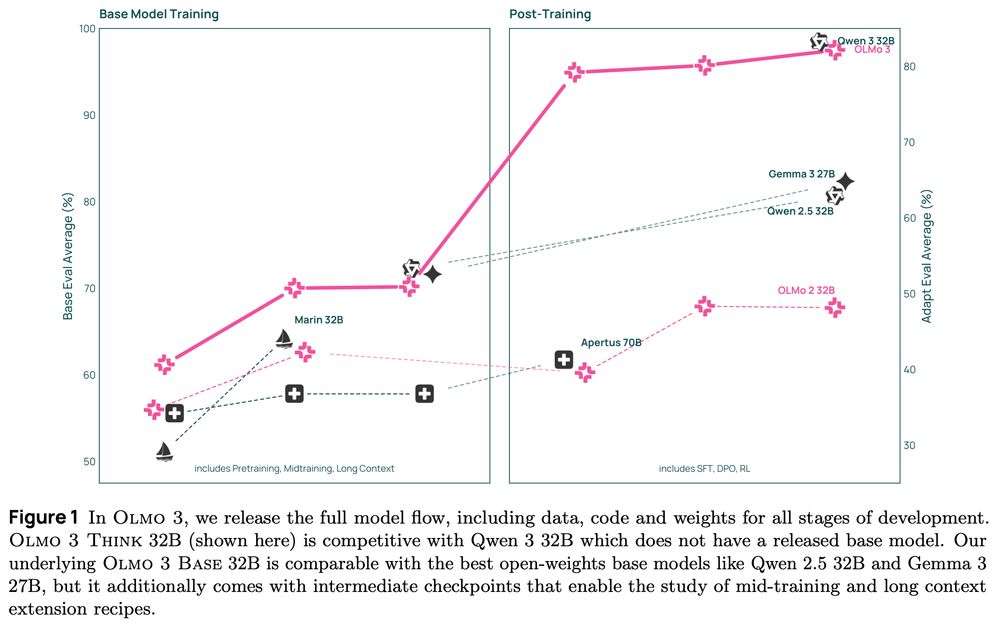

Everyone is finetuning with Qwen but its hard to know whether your eval is contaminated and skewing your RLVR results. Olmo 3 has a solution.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

Everyone is finetuning with Qwen but its hard to know whether your eval is contaminated and skewing your RLVR results. Olmo 3 has a solution.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

This family of 7B and 32B models represents:

1. The best 32B base model.

2. The best 7B Western thinking & instruct models.

3. The first 32B (or larger) fully open reasoning model.

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

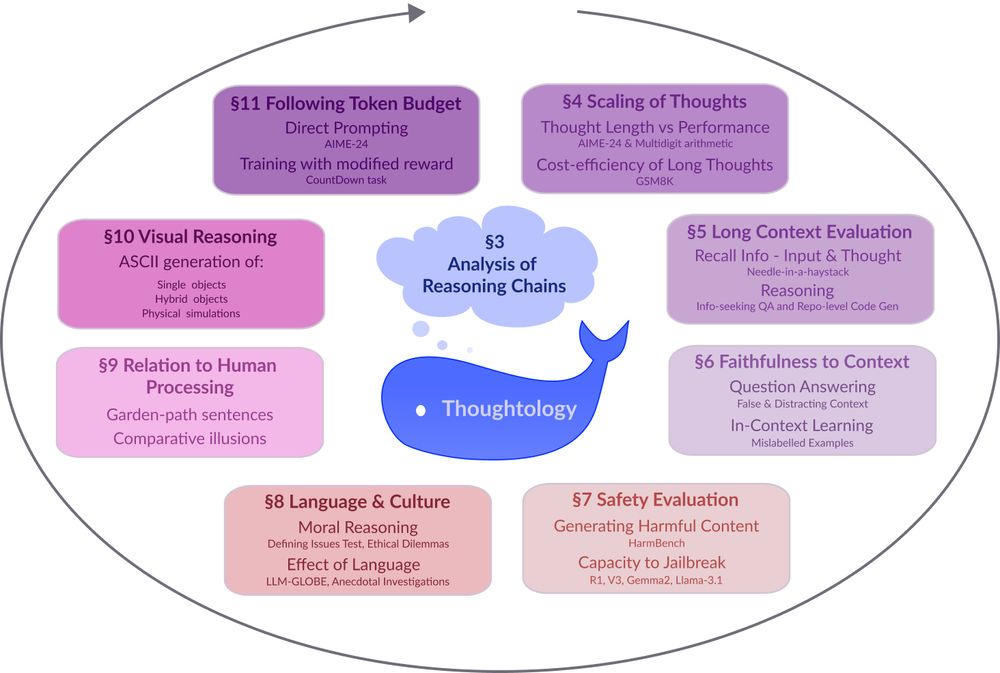

🔗: mcgill-nlp.github.io/thoughtology/

🔗: mcgill-nlp.github.io/thoughtology/

Used by @ai2.bsky.social for OLMo-2 32B 🔥

New results show ~70% speedups for LLM + RL math and reasoning 🧠

🧵below or hear my DLCT talk online on March 28!

Used by @ai2.bsky.social for OLMo-2 32B 🔥

New results show ~70% speedups for LLM + RL math and reasoning 🧠

🧵below or hear my DLCT talk online on March 28!