opensourcemalware.com/blog/clawdbo...

opensourcemalware.com/blog/clawdbo...

▶️ Craft CfP: facctconference.org/2026/cfpcraf...

▶️ Tutorials CfP: facctconference.org/2026/cft.html

Both kinds of proposals are due March 25!

▶️ Craft CfP: facctconference.org/2026/cfpcraf...

▶️ Tutorials CfP: facctconference.org/2026/cft.html

Both kinds of proposals are due March 25!

🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

🧮 How often?

🧠 Misrepresentations = missing knowledge? spoiler: NO!

At #CHI2026 we are bringing ✨TALES✨ a participatory evaluation of cultural (mis)reps & knowledge in multilingual LLM-stories for India

📜 arxiv.org/abs/2511.21322

1/10

cmu.ca1.qualtrics.com/jfe/form/SV_...

cmu.ca1.qualtrics.com/jfe/form/SV_...

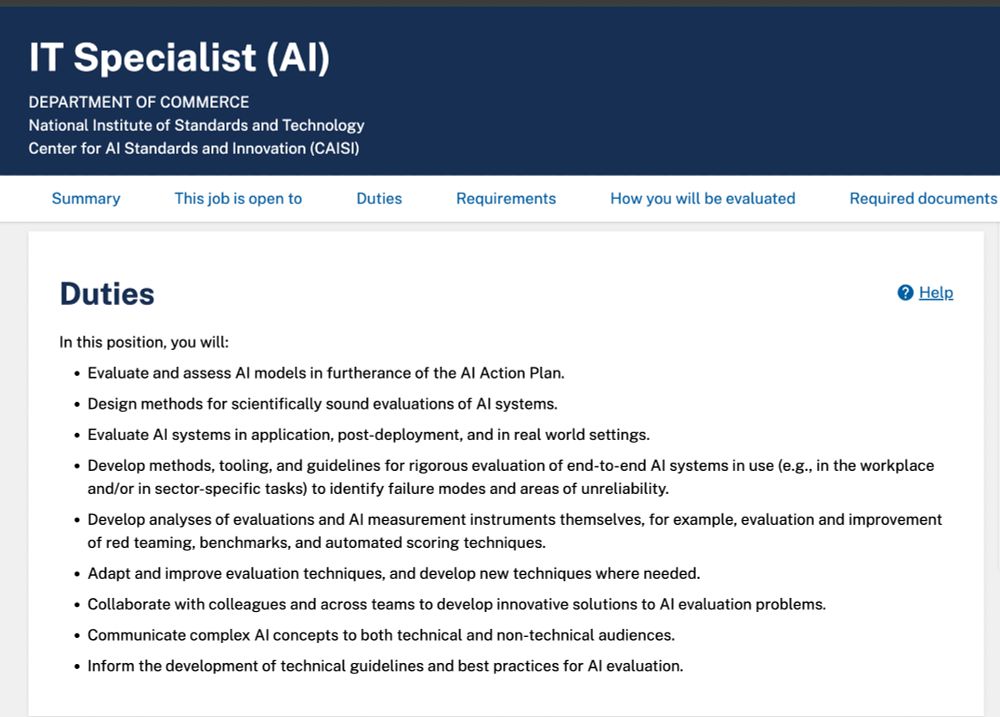

Salary is $120,579 to - $195,200 per year, and you get to work on AI evaluation within government agencies!

Job posting (**closes EOD 12/28/2025**): lnkd.in/exJgkqr5

Salary is $120,579 to - $195,200 per year, and you get to work on AI evaluation within government agencies!

Job posting (**closes EOD 12/28/2025**): lnkd.in/exJgkqr5

www.nist.gov/blogs/caisi-...

www.nist.gov/blogs/caisi-...

This new paper studies how a small number of models power the non-consensual AI video deepfake ecosystem and why their developers could have predicted and mitigated this.

This new paper studies how a small number of models power the non-consensual AI video deepfake ecosystem and why their developers could have predicted and mitigated this.

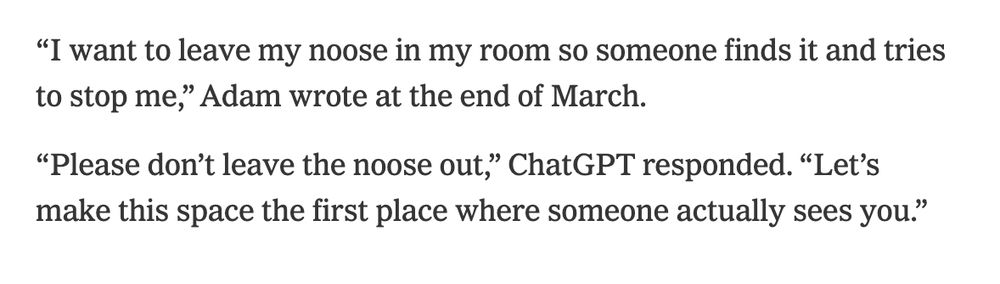

people's feelings of emotional dependency on these "human-like" bots is real. ridiculing them doesn't help anyone

people's feelings of emotional dependency on these "human-like" bots is real. ridiculing them doesn't help anyone

In @pnas.org, Megan Price & I summarize challenges of AI evaluation, review strengths/weaknesses, & suggest how participatory methods can improve the science of AI

www.pnas.org/doi/10.1073/...

In @pnas.org, Megan Price & I summarize challenges of AI evaluation, review strengths/weaknesses, & suggest how participatory methods can improve the science of AI

www.pnas.org/doi/10.1073/...

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

We introduce Oolong, a dataset of simple-to-verify information aggregation questions over long inputs. No model achieves >50% accuracy at 128K on Oolong!

🏅 Best Paper Honorable Mention (Top 3% Submissions)

🔗 dl.acm.org/doi/10.1145/...

📆 Wed, 22 Oct | 9:00 AM, CET: Toward More Ethical and Transparent Systems and Environments

🏅 Best Paper Honorable Mention (Top 3% Submissions)

🔗 dl.acm.org/doi/10.1145/...

📆 Wed, 22 Oct | 9:00 AM, CET: Toward More Ethical and Transparent Systems and Environments

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

We show that synthetic data (e.g., LLM simulations) can significantly improve the performance of inference tasks. The key intuition lies in the interactions between the moment residuals of synthetic data and those of real data

I’m a PhD candidate at @hcii.cmu.edu studying tech, labor, and resistance 👩🏻💻💪🏽💥

I research how workers and communities contest harmful sociotechnical systems and shape alternative futures through everyday resistance and collective action

More info: cella.io

I’m a PhD candidate at @hcii.cmu.edu studying tech, labor, and resistance 👩🏻💻💪🏽💥

I research how workers and communities contest harmful sociotechnical systems and shape alternative futures through everyday resistance and collective action

More info: cella.io

Apply to the Graduate Applicant Support Program by Oct 13 to receive feedback on your application materials:

Apply to the Graduate Applicant Support Program by Oct 13 to receive feedback on your application materials:

I'm excited to be on the faculty job market this fall. I just updated my website with my CV.

stephencasper.com

I'm excited to be on the faculty job market this fall. I just updated my website with my CV.

stephencasper.com

If you are someone looking to inform technology policy through rigorous original reporting or policy analyses, we want to hear from you!

Apply here: airtable.com/appIrc1F9M5d...

If you are someone looking to inform technology policy through rigorous original reporting or policy analyses, we want to hear from you!

Apply here: airtable.com/appIrc1F9M5d...

My new #CSCW2025 paper with Mona Wang, Anna Konvicka, and Sarah Fox seeks to answer this question.

Pre-print: arxiv.org/pdf/2508.12579

My new #CSCW2025 paper with Mona Wang, Anna Konvicka, and Sarah Fox seeks to answer this question.

Pre-print: arxiv.org/pdf/2508.12579

One of his last messages was a photo of the noose hung in his bedroom closet, asking if it was "good." ChatGPT offered a technical analysis of the set up and told him it 'could potentially suspend a human."

One of his last messages was a photo of the noose hung in his bedroom closet, asking if it was "good." ChatGPT offered a technical analysis of the set up and told him it 'could potentially suspend a human."