Working on the representations of LMs and pretraining methods

https://nathangodey.github.io

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

We trained 3 models - 1.5B, 8B, 24B - from scratch on 2-4T tokens of custom data

(TLDR: we cheat and get good scores)

@wissamantoun.bsky.social @rachelbawden.bsky.social @bensagot.bsky.social @zehavoc.bsky.social

You can read his PhD online here: hal.science/tel-04994414/

You can read his PhD online here: hal.science/tel-04994414/

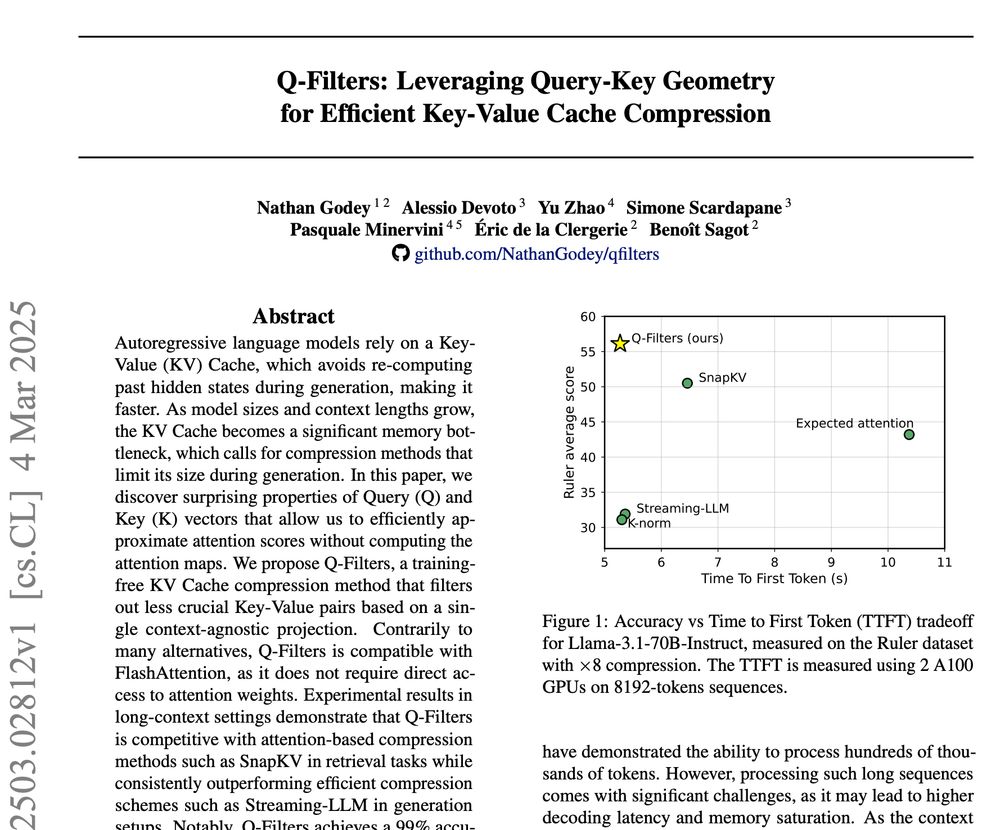

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention