Scientist | Statistician | Bayesian | Author of brms | Member of the Stan and BayesFlow development teams

Website: https://paulbuerkner.com

Opinions are my own

Huge thanks to:

• My supervisors @paulbuerkner.com @stefanradev.bsky.social @avehtari.bsky.social 👥

• The committee @ststaab.bsky.social @mniepert.bsky.social 📝

• The institutions @ellis.eu @unistuttgart.bsky.social @aalto.fi 🏫

• My wonderful collaborators 🧡

#PhDone 🎓

Huge thanks to:

• My supervisors @paulbuerkner.com @stefanradev.bsky.social @avehtari.bsky.social 👥

• The committee @ststaab.bsky.social @mniepert.bsky.social 📝

• The institutions @ellis.eu @unistuttgart.bsky.social @aalto.fi 🏫

• My wonderful collaborators 🧡

#PhDone 🎓

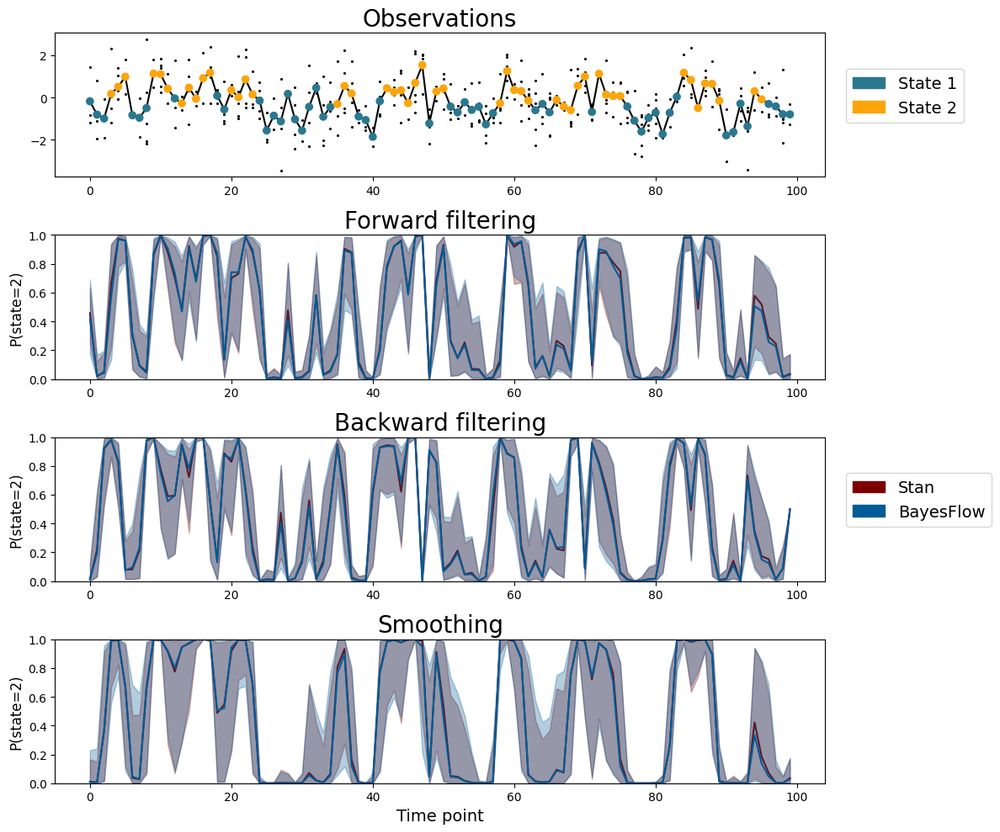

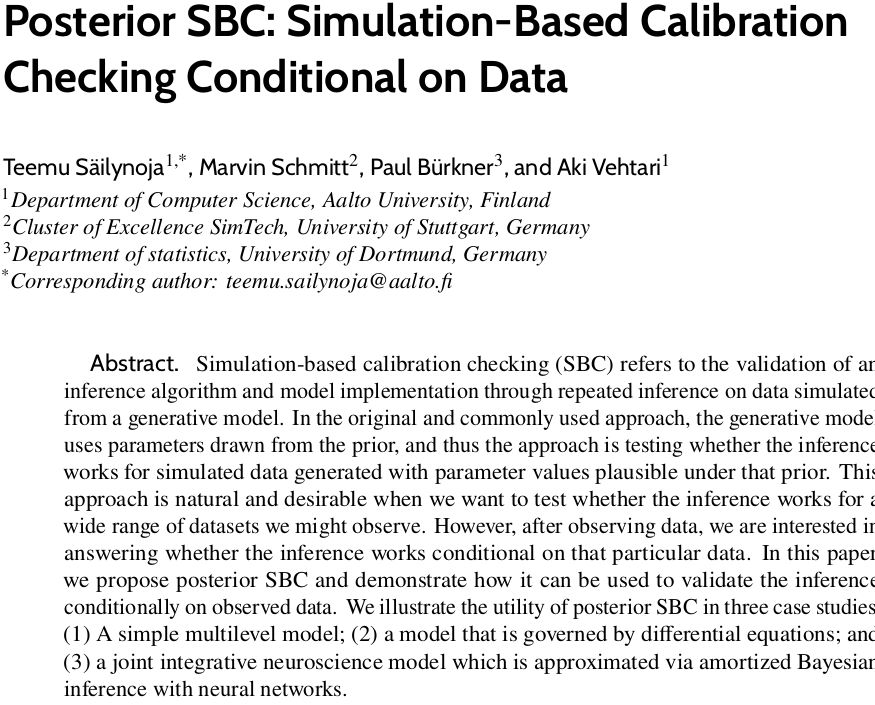

The amortized approximator from BayesFlow closely matches the results of expensive-but-trustworthy HMC with Stan.

Check out the preprint and code by @kucharssim.bsky.social and @paulbuerkner.com👇

The amortized approximator from BayesFlow closely matches the results of expensive-but-trustworthy HMC with Stan.

Check out the preprint and code by @kucharssim.bsky.social and @paulbuerkner.com👇

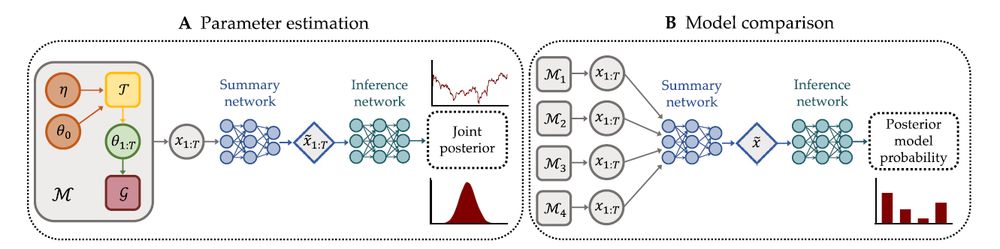

BayesFlow allows:

• Approximating the joint posterior of model parameters and mixture indicators

• Inferences for independent and dependent mixtures

• Amortization for fast and accurate estimation

📄 Preprint

💻 Code

arxiv.org/abs/2502.03279 1/7

arxiv.org/abs/2502.03279 1/7

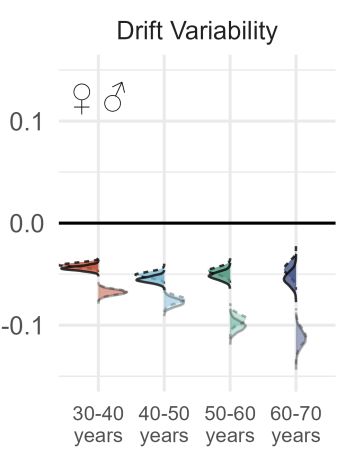

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

BayesFlow facilitated efficient inference for complex decision-making models, scaling Bayesian workflows to big data.

🔗Paper

Sign up to the seminar’s mailing list below to get the meeting link 👇

Sign up to the seminar’s mailing list below to get the meeting link 👇

Including my alma mater, the University of Münster.

HT @thereallorenzmeyer.bsky.social nachrichten.idw-online.de/2025/01/10/h...

Including my alma mater, the University of Münster.

HT @thereallorenzmeyer.bsky.social nachrichten.idw-online.de/2025/01/10/h...

- checking the long tails (few long RTs make the tail estimation unwieldy)

- low initial values for ndt

- careful prior checks

- pathfinder estimation of initial values

still with increasing data, chains get stuck

- checking the long tails (few long RTs make the tail estimation unwieldy)

- low initial values for ndt

- careful prior checks

- pathfinder estimation of initial values

still with increasing data, chains get stuck

It’s not a part of the process that can be skipped; it’s the entire point.

It’s not a part of the process that can be skipped; it’s the entire point.

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

2️⃣ BayesFlow handles amortized parameter estimation in the SBI setting.

📣 Shoutout to @masonyoungblood.bsky.social & @sampassmore.bsky.social

📄 Preprint: osf.io/preprints/ps...

💻 Code: github.com/masonyoungbl...

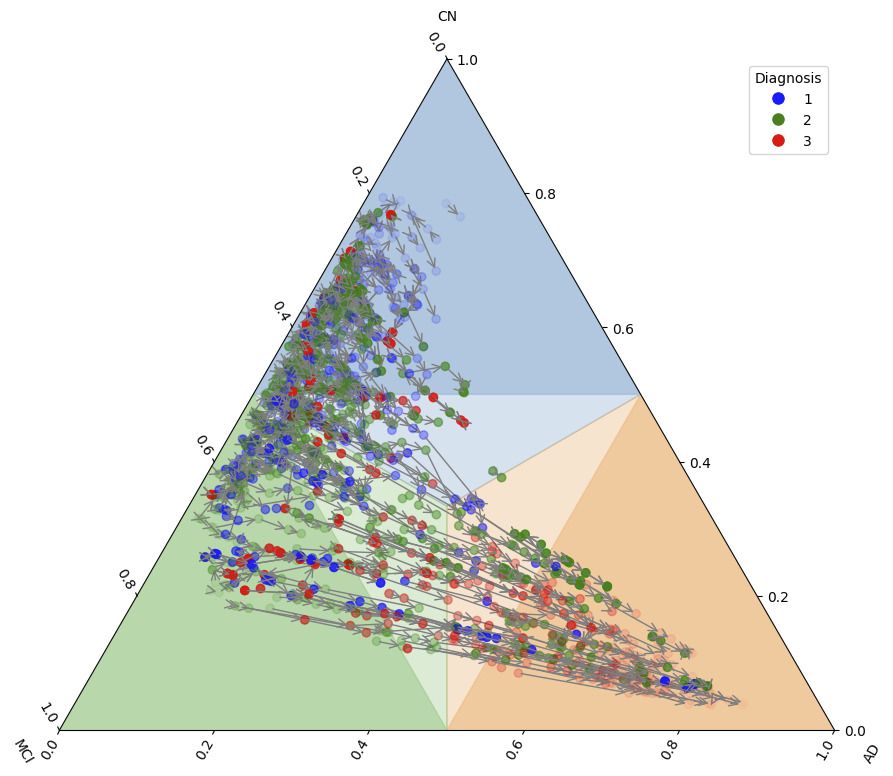

⋅ Joint estimation of stationary and time-varying parameters

⋅ Amortized parameter inference and model comparison

⋅ Multi-horizon predictions and leave-future-out CV

📄 Paper 1

📄 Paper 2

💻 BayesFlow Code

⋅ Joint estimation of stationary and time-varying parameters

⋅ Amortized parameter inference and model comparison

⋅ Multi-horizon predictions and leave-future-out CV

📄 Paper 1

📄 Paper 2

💻 BayesFlow Code