🕸️ Website https://rerun.io/

⭐ GitHub http://github.com/rerun-io/rerun

👾 Discord http://discord.gg/ZqaWgHZ2p7

After a refactoring, my entire egocentric/exocentric pipeline is now modular. One codebase handles different sensor layouts and outputs a unified, multimodal timeseries file that you can open in Rerun.

After a refactoring, my entire egocentric/exocentric pipeline is now modular. One codebase handles different sensor layouts and outputs a unified, multimodal timeseries file that you can open in Rerun.

Lead: pointnine.com

With: costanoa.vc, Sunflower Capital,

@seedcamp.com

Angels including: @rauchg.blue, Eric Jang, Oliver Cameron, @wesmckinney.com , Nicolas Dessaigne, Arnav Bimbhet

Thesis: rerun.io/blog/physica...

Lead: pointnine.com

With: costanoa.vc, Sunflower Capital,

@seedcamp.com

Angels including: @rauchg.blue, Eric Jang, Oliver Cameron, @wesmckinney.com , Nicolas Dessaigne, Arnav Bimbhet

Thesis: rerun.io/blog/physica...

Lead: pointnine.com

With: costanoa.vc, Sunflower Capital,

@seedcamp.com

Angels including: @rauchg.blue, Eric Jang, Oliver Cameron, @wesmckinney.com , Nicolas Dessaigne, Arnav Bimbhet

Thesis: rerun.io/blog/physica...

Lerobot to understand better how I can get a real robot to work on my custom dataset. Using @rerun.io to visualize

code: github.com/rerun-io/pi0...

Lerobot to understand better how I can get a real robot to work on my custom dataset. Using @rerun.io to visualize

code: github.com/rerun-io/pi0...

The release brings long-requested entity filtering for finding data faster in the Viewer, significantly simplified APIs for partial & columnar updates, and many other enhancements.

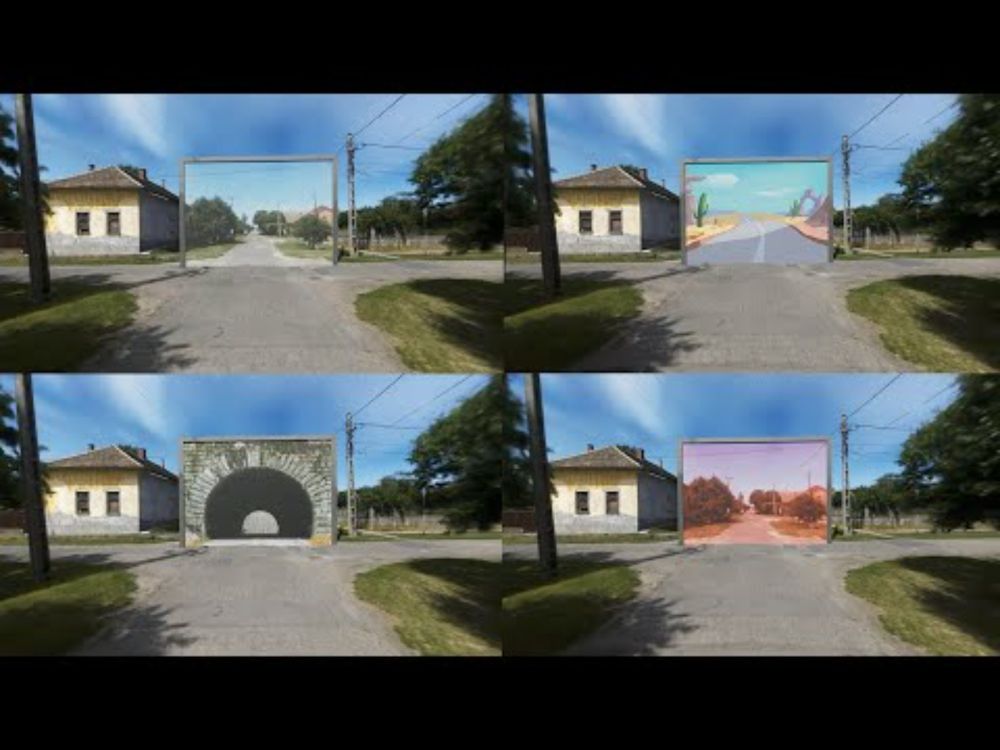

vimeo.com/1054160833

The release brings long-requested entity filtering for finding data faster in the Viewer, significantly simplified APIs for partial & columnar updates, and many other enhancements.

vimeo.com/1054160833

This adds `egui::Scene`: a pannable, zoomable container for other UI elements.

This release also makes frames and corner radius more in line with how CSS and Figma works.

We’ve also improved the crispness of the rendering, and a lot more!

It helps understand why not all rosbags can be easily recovered when your robot's battery dies 🪫.

rerun.io/blog/rosbag

It helps understand why not all rosbags can be easily recovered when your robot's battery dies 🪫.

rerun.io/blog/rosbag