Our panel shows how RL, DDMs, active inference, and neural models can unpack symptom heterogeneity across anxiety, psychosis, ADHD, depression, and suicide risk-moving beyond diagnoses to mechanisms.

@acnporg.bsky.social

#ComputationalPsychiatry

Our panel shows how RL, DDMs, active inference, and neural models can unpack symptom heterogeneity across anxiety, psychosis, ADHD, depression, and suicide risk-moving beyond diagnoses to mechanisms.

@acnporg.bsky.social

#ComputationalPsychiatry

Register HERE for the Zoom Link 👉https://docs.google.com/forms/d/e/1FAIpQLSfA-SAlxM8jP0D9V-tu_fMEK_SeFzhN7HrnG9lrBM8V3UInGA/viewform?usp=header

Register HERE for the Zoom Link 👉https://docs.google.com/forms/d/e/1FAIpQLSfA-SAlxM8jP0D9V-tu_fMEK_SeFzhN7HrnG9lrBM8V3UInGA/viewform?usp=header

doi.org/10.5334/cpsy...

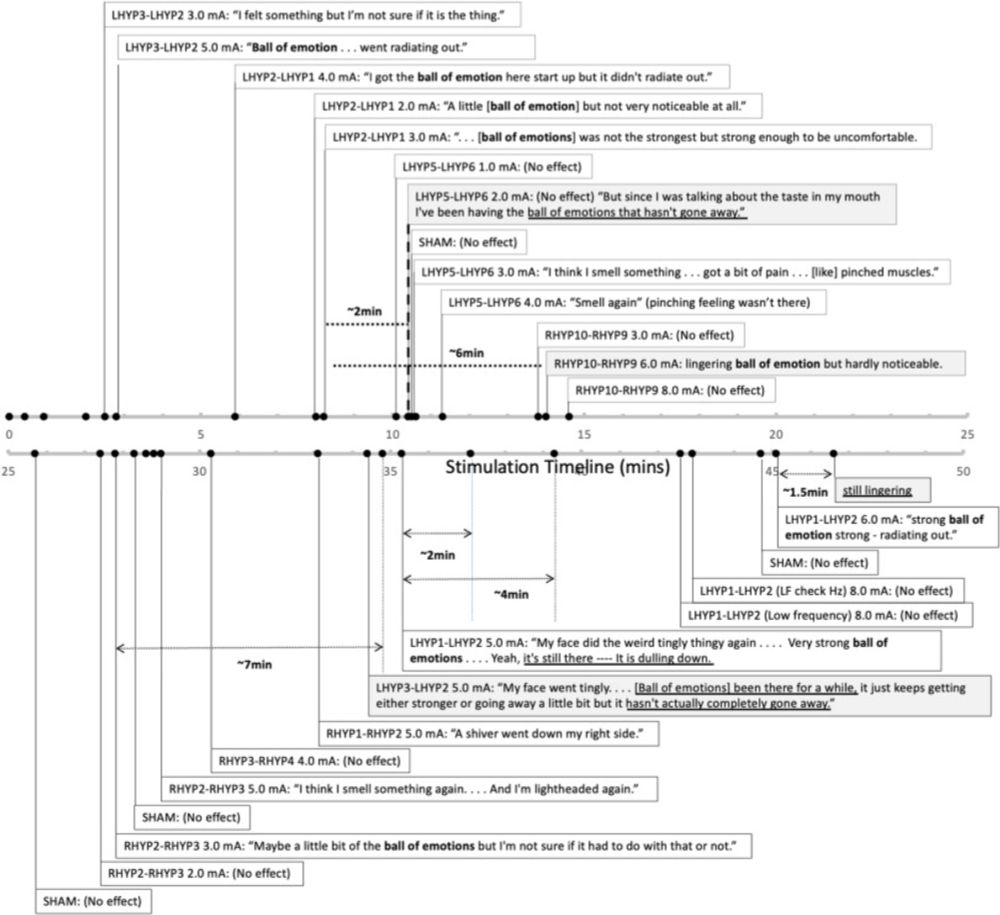

www.brainstimjrnl.com/article/S193...

@torutakahashi.bsky.social @rssmith.bsky.social

www.nature.com/articles/s44...

@torutakahashi.bsky.social @rssmith.bsky.social

www.nature.com/articles/s44...

This paper shows that individuals with methamphetamine use disorder show reduced directed exploration and slower dynamic learning rates in both high/low anxiety states

www.nature.com/articles/s44...

This paper shows that individuals with methamphetamine use disorder show reduced directed exploration and slower dynamic learning rates in both high/low anxiety states

www.nature.com/articles/s44...

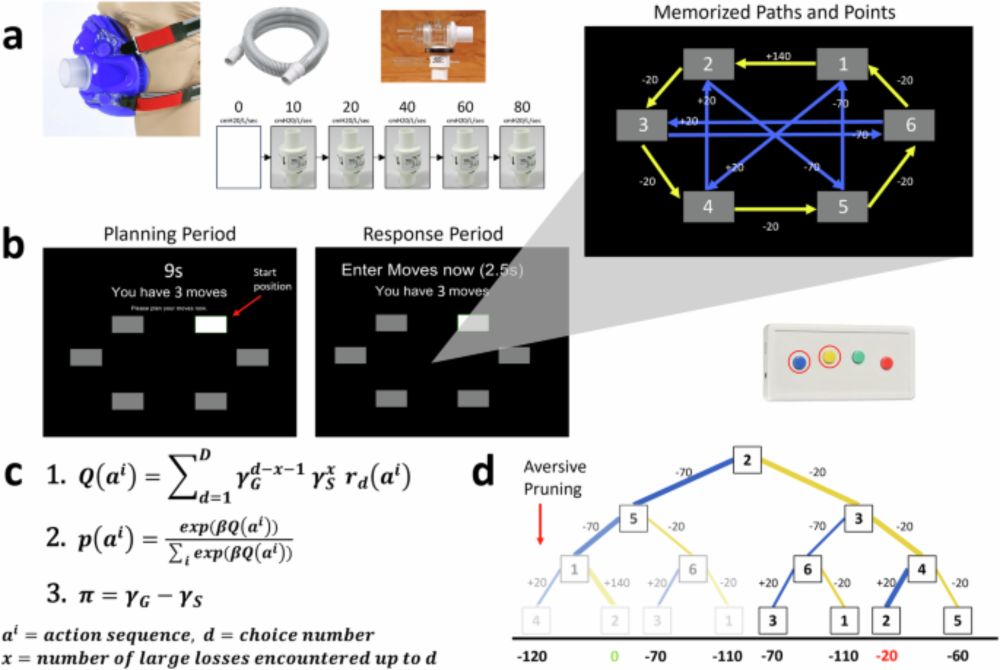

In particular nice to see the very robust basic pruning behavioural effect replicate in this version of the task (originally designed for the scanner). It seems that pruning may end up being more relevant to SUD than MDD, @docqhuys.bsky.social!

In particular nice to see the very robust basic pruning behavioural effect replicate in this version of the task (originally designed for the scanner). It seems that pruning may end up being more relevant to SUD than MDD, @docqhuys.bsky.social!

In psychopathology, this may be crucial for maintaining social support. We hope others find it useful!

In psychopathology, this may be crucial for maintaining social support. We hope others find it useful!

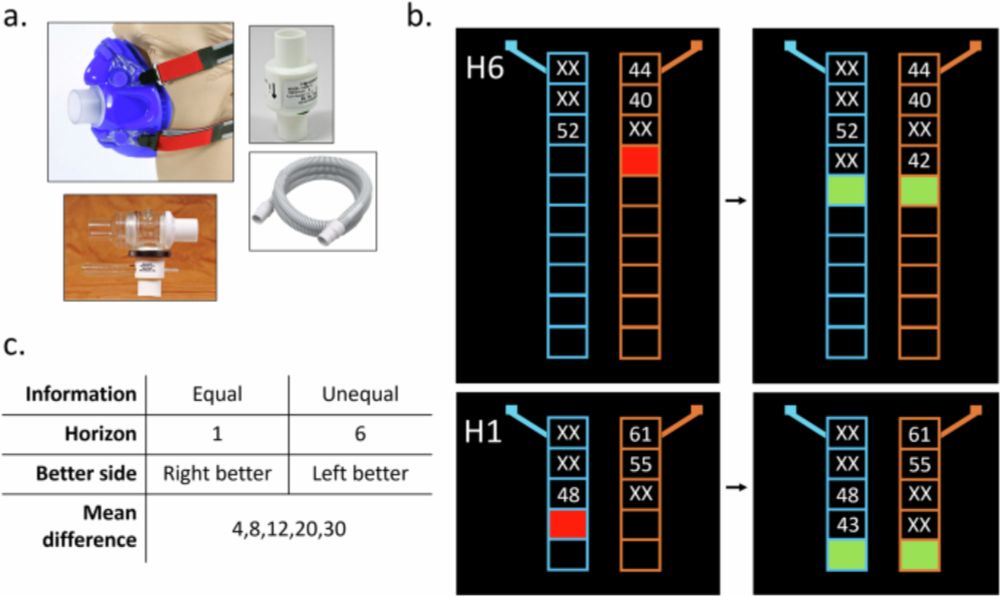

"Directed exploration is reduced by an aversive interoceptive state induction in healthy individuals but not in those with affective disorders" (nature.com/articles/s41...) 🧵👇

"Directed exploration is reduced by an aversive interoceptive state induction in healthy individuals but not in those with affective disorders" (nature.com/articles/s41...) 🧵👇

"Computational Approaches for Uncovering Interoceptive Mechanisms in Psychiatric Disorders and Their Biological Basis"

"Computational Approaches for Uncovering Interoceptive Mechanisms in Psychiatric Disorders and Their Biological Basis"

"Computational Mechanisms of Approach-Avoidance Conflict Predictively Differentiate Between Affective and Substance Use Disorders"

"Computational Mechanisms of Approach-Avoidance Conflict Predictively Differentiate Between Affective and Substance Use Disorders"

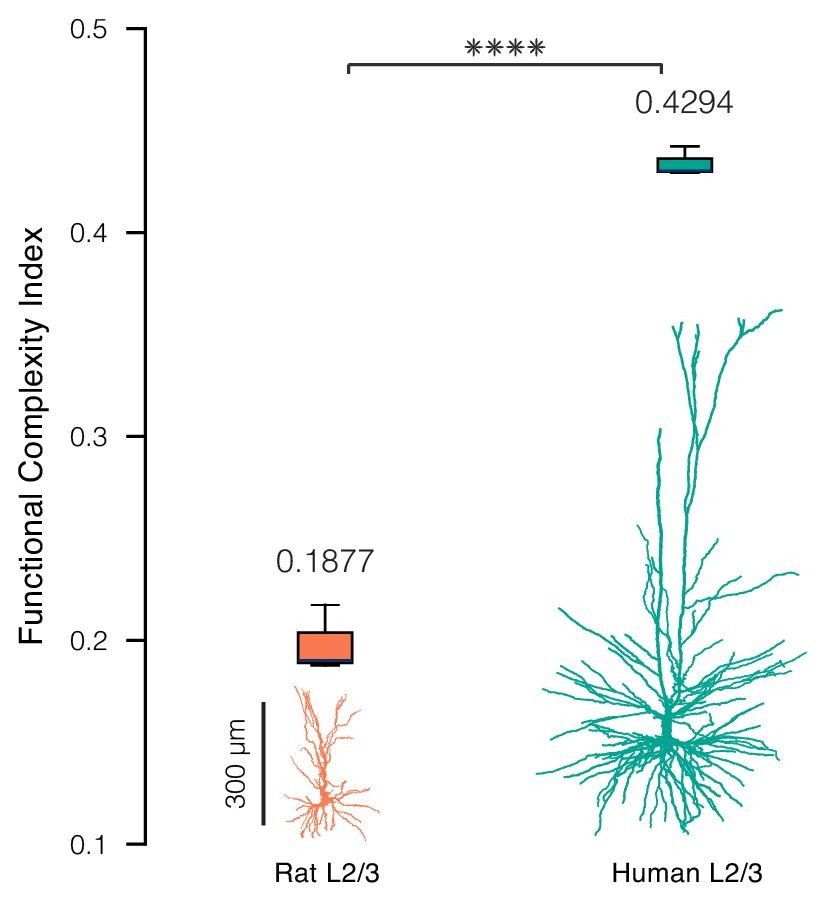

Are they more functionally complex? Could that boost the human brain’s computational power? and is that what makes us human? (1/11)

Are they more functionally complex? Could that boost the human brain’s computational power? and is that what makes us human? (1/11)