Lab: https://mintresearch.org

Self: https://sethlazar.org

Newsletter: https://philosophyofcomputing.substack.com

philosophyofcomputing.substack.com

Read them all at buff.ly/zTrRirO. Thanks so much to @knightcolumbia (and especially Katy) for making it happen

Read them all at buff.ly/zTrRirO. Thanks so much to @knightcolumbia (and especially Katy) for making it happen

The paper—written with the visionary Mariano-Florentino Cuéllar—is published here: buff.ly/dMM0r7K

The paper—written with the visionary Mariano-Florentino Cuéllar—is published here: buff.ly/dMM0r7K

Concentration of corporate power? Also check.

Stuffed-up information ecosystem? Yep that too.

Backsliding as the 'autocratic legalism' playbook gets rolled out in one nation after the next? Agents could be a helpful software Stasi.

Concentration of corporate power? Also check.

Stuffed-up information ecosystem? Yep that too.

Backsliding as the 'autocratic legalism' playbook gets rolled out in one nation after the next? Agents could be a helpful software Stasi.

discuss how AI agents might affect the realization of democratic values. knightcolumbia.org/content/ai-a...

discuss how AI agents might affect the realization of democratic values. knightcolumbia.org/content/ai-a...

AI agents could help or hurt. And they won't protect democratic values on their own.

AI agents could help or hurt. And they won't protect democratic values on their own.

philosophyofcomputing.substack.com/p/normative-...

philosophyofcomputing.substack.com/p/normative-...

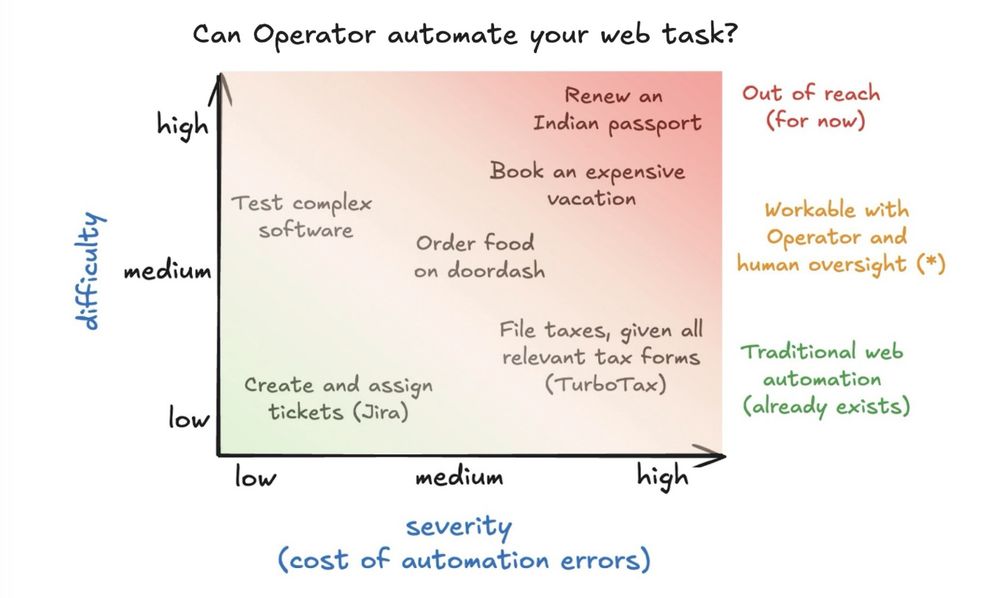

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Some insights on what worked, what broke, and why this matters for the future of agents 🧵

Looks at what 'agent' means, how LM agents work, what kinds of impacts we should expect, and what norms (and regulations) should govern them.

Looks at what 'agent' means, how LM agents work, what kinds of impacts we should expect, and what norms (and regulations) should govern them.

Trying it out is a lot of fun: simonwillison.net/2024/Dec/24/...

Trying it out is a lot of fun: simonwillison.net/2024/Dec/24/...