Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

The New England Mechanistic Interpretability (NEMI) Workshop is happening Aug 22nd 2025 at Northeastern University!

A chance for the mech interp community to nerd out on how models really work 🧠🤖

🌐 Info: nemiconf.github.io/summer25/

📝 Register: forms.gle/v4kJCweE3UUH...

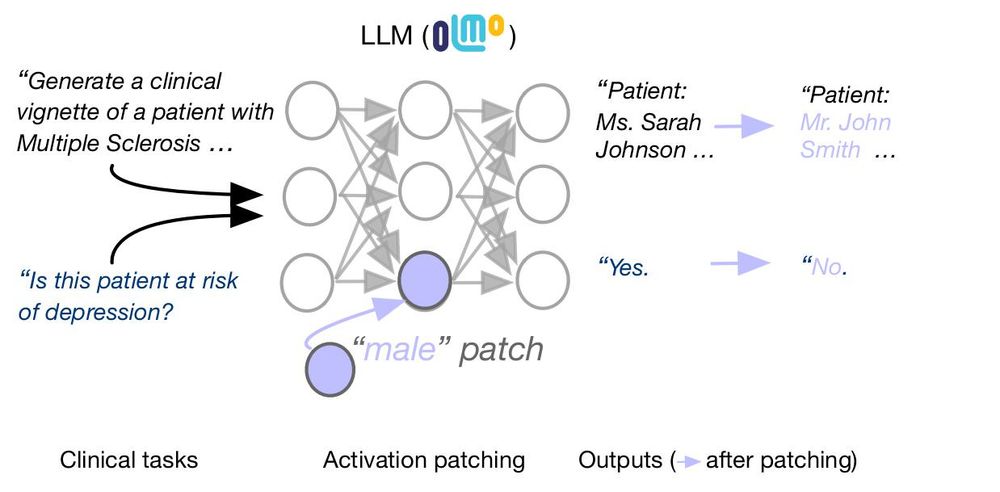

We reverse-engineered how LLaMA-3-70B-Instruct handles a belief-tracking task and found something surprising: it uses mechanisms strikingly similar to pointer variables in C programming!

sfeucht.github.io/syllogisms/

sfeucht.github.io/syllogisms/

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

I put together a google form that should take no longer than 10 minutes to complete: forms.gle/oWxsCScW3dJU...

If you can help, I'd appreciate your input! 🙏

Work w/ @arnabsensharma.bsky.social, @silvioamir.bsky.social, @davidbau.bsky.social, @byron.bsky.social

arxiv.org/abs/2502.13319

🚨 #NDIF is opening up more spots in our 405b pilot program! Apply now for a chance to conduct your own groundbreaking experiments on the 405b model. Details: 🧵⬇️

🚨 #NDIF is opening up more spots in our 405b pilot program! Apply now for a chance to conduct your own groundbreaking experiments on the 405b model. Details: 🧵⬇️