https://sgk98.github.io/

Huge credit to the Cambrian-S team for tackling one of the hardest open problems in video understanding: spatial supersensing. In our paper, we take a closer look at their benchmarks & methods 👇

Huge credit to the Cambrian-S team for tackling one of the hardest open problems in video understanding: spatial supersensing. In our paper, we take a closer look at their benchmarks & methods 👇

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

We show how to efficiently apply Bayesian learning in VLMs, improve calibration, and do active learning. Cool stuff!

📝 arxiv.org/abs/2412.06014

MOCA ☕ - Predicting Masked Online Codebook Assignments w/ @spyrosgidaris.bsky.social O. Simeoni, A. Vobecky, @matthieucord.bsky.social, N. Komodakis, @ptrkprz.bsky.social #TMLR #ICLR2025

Grab a ☕ & brace for a story & a🧵

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

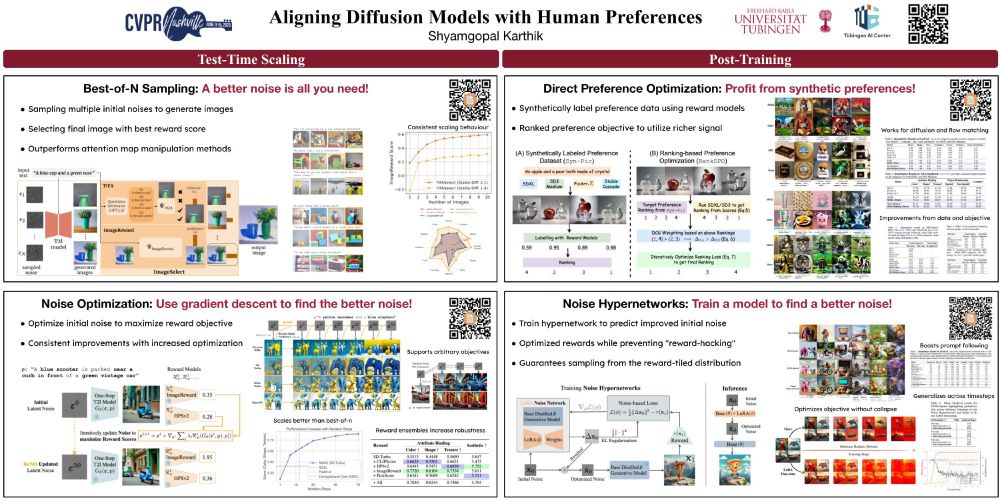

arxiv.org/abs/2502.03349

arxiv.org/abs/2502.03349

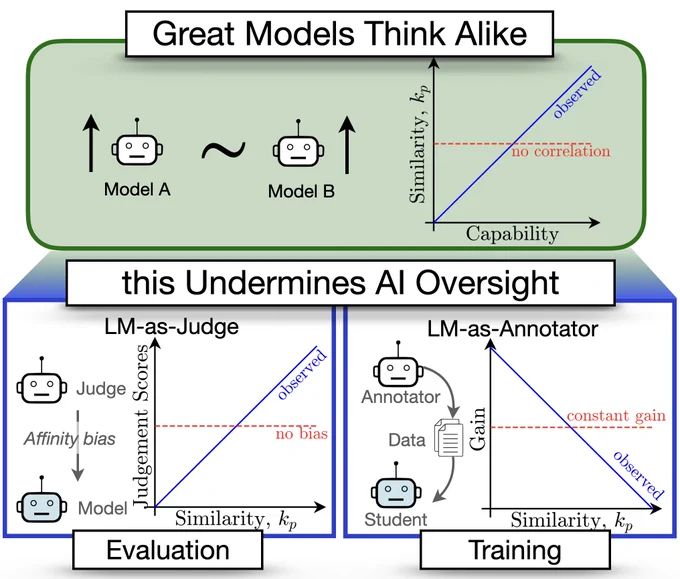

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

New paper quantifies LM similarity

(1) LLM-as-a-judge favor more similar models🤥

(2) Complementary knowledge benefits Weak-to-Strong Generalization☯️

(3) More capable models have more correlated failures 📈🙀

🧵👇

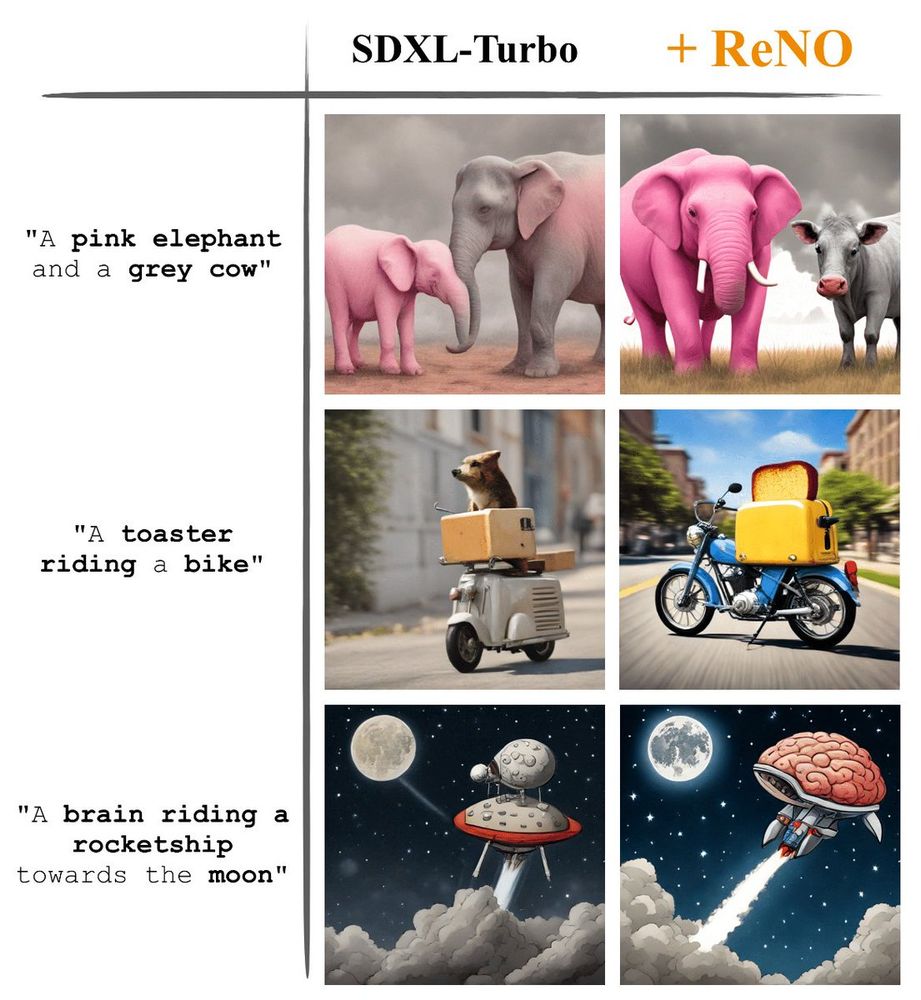

We show that with our ReNO, Reward-based Noise Optimization, one-step models consistently surpass the performance of all current open-source Text-to-Image models within the computational budget of 20-50 sec!

#NeurIPS2024

Head over to HuggingFace and play with this thing. It's quite extraordinary.

🤗: huggingface.co/spaces/fffil...

We are excited to present ReNO at #NeurIPS2024 this week!

Join us tomorrow from 11am-2pm at East Exhibit Hall A-C #1504!

Head over to HuggingFace and play with this thing. It's quite extraordinary.

We show that with our ReNO, Reward-based Noise Optimization, one-step models consistently surpass the performance of all current open-source Text-to-Image models within the computational budget of 20-50 sec!

#NeurIPS2024

We show that with our ReNO, Reward-based Noise Optimization, one-step models consistently surpass the performance of all current open-source Text-to-Image models within the computational budget of 20-50 sec!

#NeurIPS2024

🔗 to extended versions:

1. 🙋 "How can we make predictions in BDL efficiently?" 👉 arxiv.org/abs/2411.18425

2. 🙋 "How can we do prob. active learning in VLMs" 👉 arxiv.org/abs/2412.06014

🔗 to extended versions:

1. 🙋 "How can we make predictions in BDL efficiently?" 👉 arxiv.org/abs/2411.18425

2. 🙋 "How can we do prob. active learning in VLMs" 👉 arxiv.org/abs/2412.06014

Do reach out if you'd like to chat!

Do reach out if you'd like to chat!

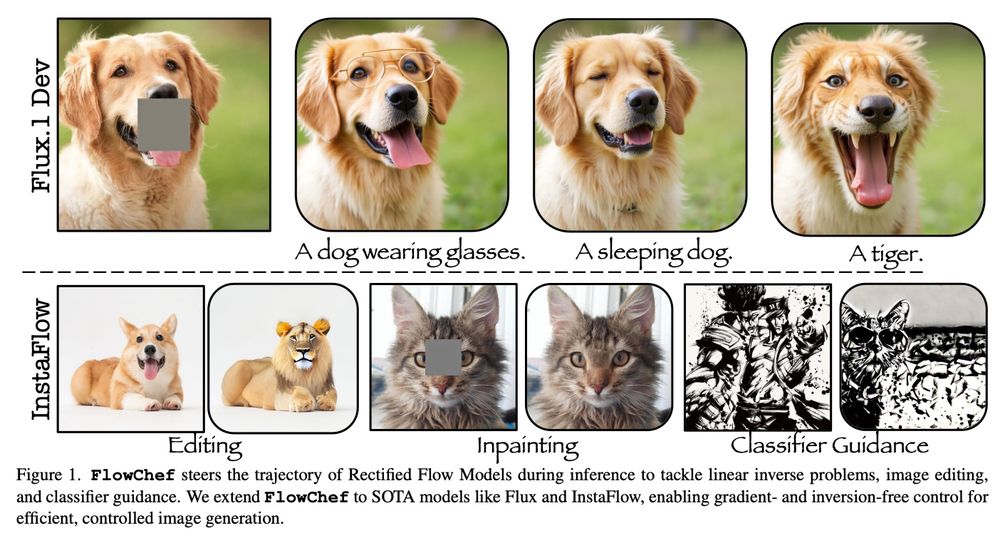

🚀 Introducing FlowChef, "Steering Rectified Flow Models in the Vector Field for Controlled Image Generation"! 🌌✨

- Perform image editing, solve inverse problems, and more.

- Achieved inversion-free, gradient-free, & training-free inference time steering! 🤯

👇👇

🚀 Introducing FlowChef, "Steering Rectified Flow Models in the Vector Field for Controlled Image Generation"! 🌌✨

- Perform image editing, solve inverse problems, and more.

- Achieved inversion-free, gradient-free, & training-free inference time steering! 🤯

👇👇

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

Alternative title: why I decided to stop working on tracking.

Curious about other's thoughts on this.

lb.eyer.be/s/cv-ethics....

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

HF: huggingface.co/Lightricks/L...

Gradio: huggingface.co/spaces/Light...

Github: github.com/Lightricks/L...

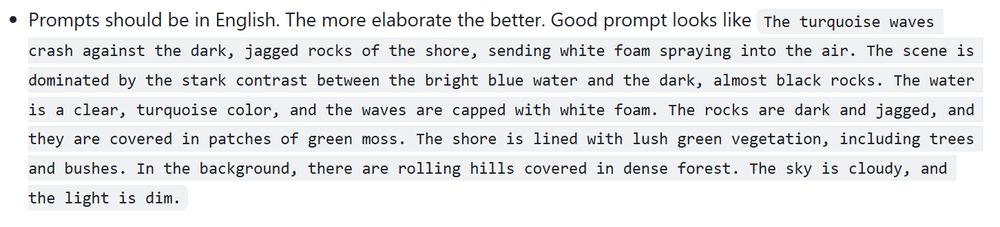

Look at that prompt example though. Need to be a proper writer to get that quality.

HF: huggingface.co/Lightricks/L...

Gradio: huggingface.co/spaces/Light...

Github: github.com/Lightricks/L...

Look at that prompt example though. Need to be a proper writer to get that quality.

- use a video stream to learn a predictive model

- everything is in pixel space

- update the model less frequently and don’t use momentum optimizer

- pre training with iid improves performance

- continual learning for robots

arxiv.org/html/2312.00...

- use a video stream to learn a predictive model

- everything is in pixel space

- update the model less frequently and don’t use momentum optimizer

- pre training with iid improves performance

- continual learning for robots

arxiv.org/html/2312.00...

go.bsky.app/NFbVzrA

go.bsky.app/NFbVzrA