Working on camera pose estimation

thibautloiseau.github.io

@gbourmaud.bsky.social @vincentlepetit.bsky.social

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

It outperforms CLIP-like models (SigLip2, finetuned StreetCLIP)… and that’s shocking 🤯

Why? CLIP models have an innate advantage — they literally learn place names + images. DinoV3 doesn’t.

The model looks really promising, even though it's just 256px for now.

The model looks really promising, even though it's just 256px for now.

Check it out:

📄 Paper: arxiv.org/abs/2504.00072

🔗 Project: imagine.enpc.fr/~lucas.ventu...

💻 Code: github.com/lucas-ventur...

🤗 Demo: huggingface.co/spaces/lucas...

Check it out:

📄 Paper: arxiv.org/abs/2504.00072

🔗 Project: imagine.enpc.fr/~lucas.ventu...

💻 Code: github.com/lucas-ventur...

🤗 Demo: huggingface.co/spaces/lucas...

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

Registration is open (it's free) with priority given to authors of accepted papers: cvprinparis.github.io/CVPR2025InPa...

Big 🧵👇 with details!

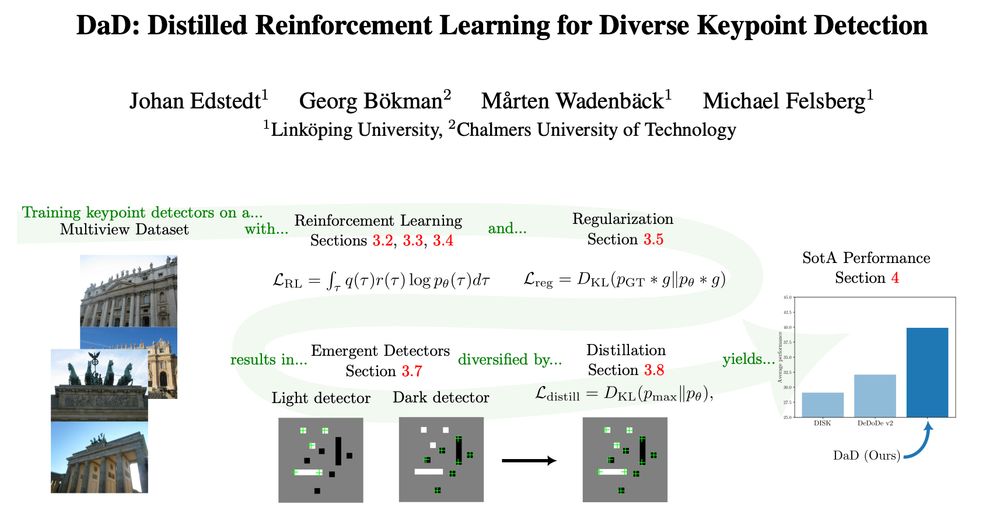

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

@gbourmaud.bsky.social @vincentlepetit.bsky.social

@gbourmaud.bsky.social @vincentlepetit.bsky.social

@thibautloiseau.bsky.social, Guillaume Bourmaud, @vincentlepetit.bsky.social

tl;dr: CroCo based; pixel in 1st image->co-visible or occluded or outside FOV in 2nd image

arxiv.org/abs/2503.07561

@thibautloiseau.bsky.social, Guillaume Bourmaud, @vincentlepetit.bsky.social

tl;dr: CroCo based; pixel in 1st image->co-visible or occluded or outside FOV in 2nd image

arxiv.org/abs/2503.07561

We have released the models from our latest paper "How far can we go with ImageNet for text-to-image generation?"

Check out the models on HuggingFace:

🤗 huggingface.co/Lucasdegeorg...

📜 arxiv.org/abs/2502.21318

We have released the models from our latest paper "How far can we go with ImageNet for text-to-image generation?"

Check out the models on HuggingFace:

🤗 huggingface.co/Lucasdegeorg...

📜 arxiv.org/abs/2502.21318

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

How far can we go with ImageNet for Text-to-Image generation? w. @arrijitghosh.bsky.social @lucasdegeorge.bsky.social @nicolasdufour.bsky.social @vickykalogeiton.bsky.social

TL;DR: Train a text-to-image model using 1000 less data in 200 GPU hrs!

📜https://arxiv.org/abs/2502.21318

🧵👇

Introducing AnySat: one model for any resolution (0.2m–250m), scale (0.3–2600 hectares), and modalities (choose from 11 sensors & time series)!

Try it with just a few lines of code:

Introducing AnySat: one model for any resolution (0.2m–250m), scale (0.3–2600 hectares), and modalities (choose from 11 sensors & time series)!

Try it with just a few lines of code:

These are 6 months projects that typically correspond to the end-of-study project in the French curriculum.

Probably more offers to come, check it regularly.

These are 6 months projects that typically correspond to the end-of-study project in the French curriculum.

Probably more offers to come, check it regularly.