gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

gershmanlab.com/textbook.html

It's a textbook called Computational Foundations of Cognitive Neuroscience, which I wrote for my class.

My hope is that this will be a living document, continuously improved as I get feedback.

If you share a single thing of my lab this year, please make it this competition.

eiko-fried.com/warn-d-machi...

If you share a single thing of my lab this year, please make it this competition.

eiko-fried.com/warn-d-machi...

🔗 doi.org/10.31222/osf...

Our aim is to equip you as early career researchers with the tools needed to lead grassroots change in research culture.

#reproducibility #openresearch #openscience #metasci #academicsky

Pre-print:

osf.io/preprints/ps...

Paper: www.sciencedirect.com/science/arti...

Pre-print:

osf.io/preprints/ps...

Paper: www.sciencedirect.com/science/arti...

www.the-geyser.com/aixiv-nothin...

www.the-geyser.com/aixiv-nothin...

#MLSky #neurojobs #compneuro

#MLSky #neurojobs #compneuro

Not just recognition responses, but also associated RTs!

And not just the semantic task, but also the structural task - where words overlap in orthography/phonology!

A thread!

Not just recognition responses, but also associated RTs!

And not just the semantic task, but also the structural task - where words overlap in orthography/phonology!

A thread!

The Department of Psychological & Brain Sciences at Johns Hopkins University (@jhu.edu) invites applications for a full-time tenured or tenure-track faculty member in Cognitive Psychology, in any area and at any rank!

Application + more info: apply.interfolio.com/178146

The Department of Psychological & Brain Sciences at Johns Hopkins University (@jhu.edu) invites applications for a full-time tenured or tenure-track faculty member in Cognitive Psychology, in any area and at any rank!

Application + more info: apply.interfolio.com/178146

Researchers propose new constructs and measures faster than anyone can track. We (@anniria.bsky.social @ruben.the100.ci) built a search engine to check what already exists and help identify redundancies; indexing 74,000 scales from ~31,500 instruments in APA PsycTests. 🧵1/3

Researchers propose new constructs and measures faster than anyone can track. We (@anniria.bsky.social @ruben.the100.ci) built a search engine to check what already exists and help identify redundancies; indexing 74,000 scales from ~31,500 instruments in APA PsycTests. 🧵1/3

My talk slides: williamngiam.github.io/talks/2025_A...

My talk slides: williamngiam.github.io/talks/2025_A...

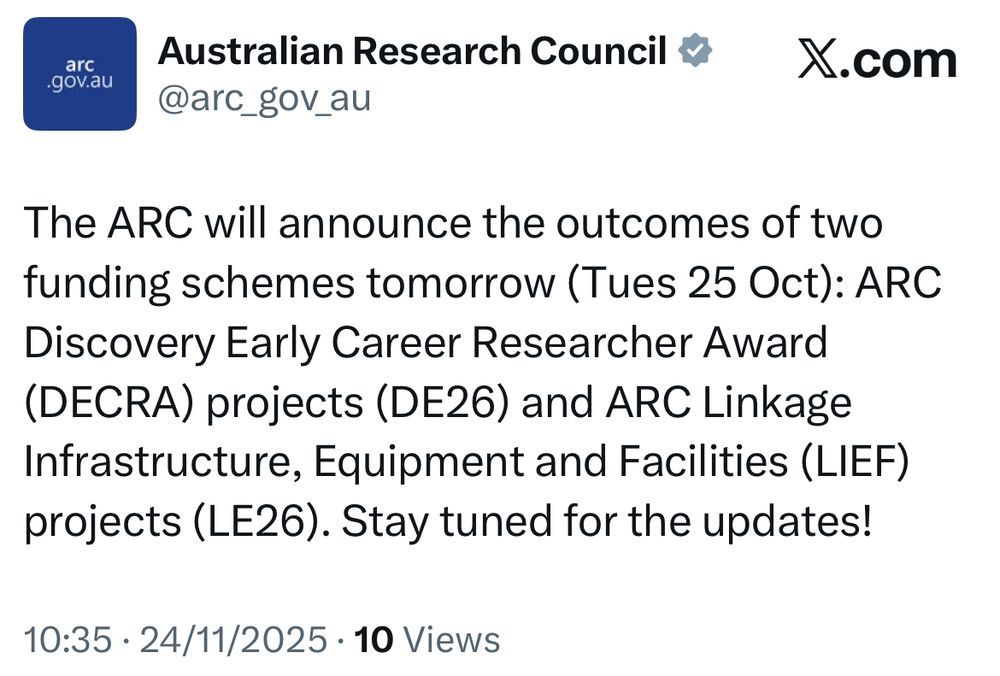

❗️Outcomes announced publicly for Discovery Early Career Researcher Award 2026❗️

See ARC's RMS for list ➡️ https://rms.arc.gov.au/RMS/Report/Download/Report/a3f6be6e-33f7-4fb5-98a6-7526aaa184cf/285

/bot

❗️Outcomes announced publicly for Discovery Early Career Researcher Award 2026❗️

See ARC's RMS for list ➡️ https://rms.arc.gov.au/RMS/Report/Download/Report/a3f6be6e-33f7-4fb5-98a6-7526aaa184cf/285

/bot

In recent times these announcements have been around 11am Canberra time. With 2 schemes on the same day, I assume they’ll announce one of them later in the day (probably DECRA first).

In recent times these announcements have been around 11am Canberra time. With 2 schemes on the same day, I assume they’ll announce one of them later in the day (probably DECRA first).