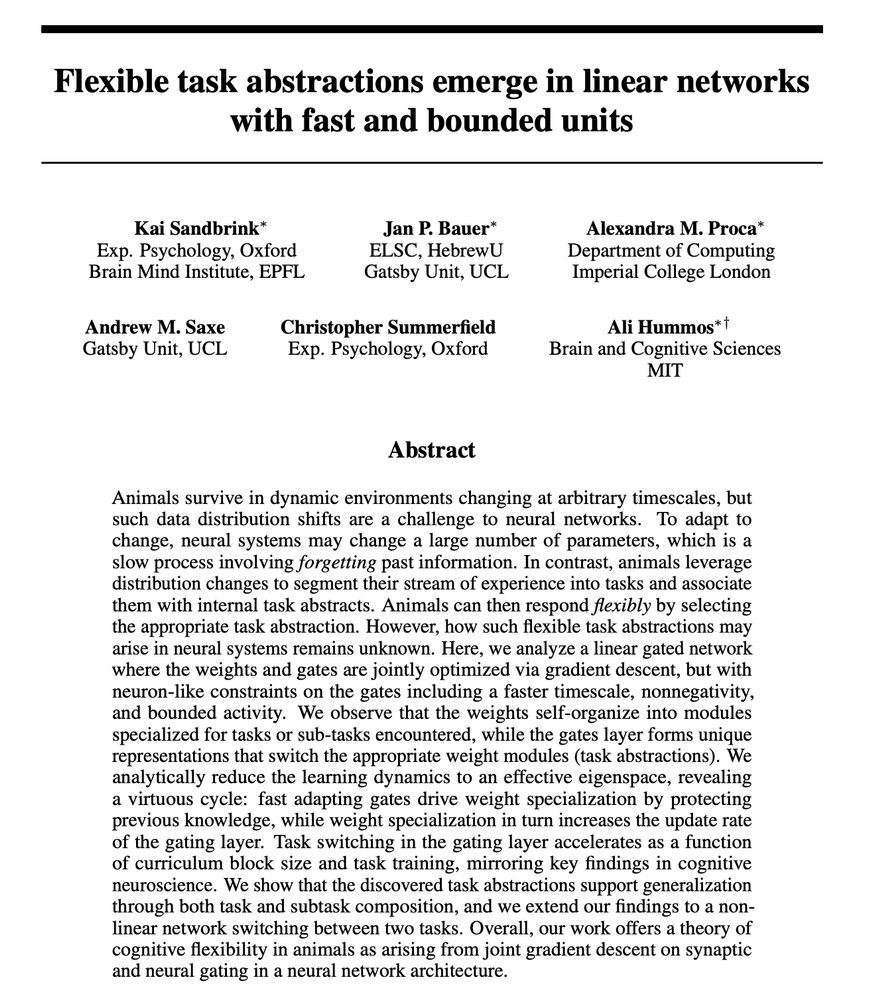

Kai Sandbrink

@ackaisa.bsky.social

880 followers

490 following

14 posts

Computational cognitive neuroscience PhD Student, Oxford & EPFL

Posts

Media

Videos

Starter Packs