I am a CS PhD in LLM Agents @imperial-nlp.bsky.social with @marekrei.bsky.social.

This is our latest work on LLM Agents:

StateAct: arxiv.org/abs/2410.02810 (outperforming ReAct by ~10%).

Feel free to reach out for collaboration.

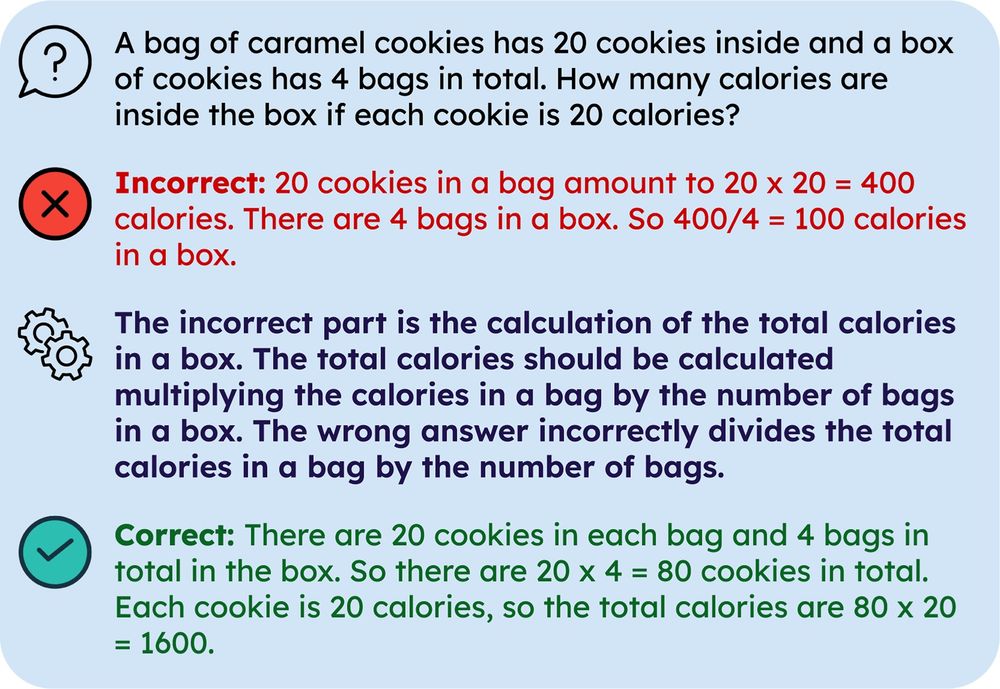

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

When LLMs learn from previous incorrect answers, they typically observe corrective feedback in the form of rationales explaining each mistake. In our new preprint, we find these rationales do not help, in fact they hurt performance!

🧵

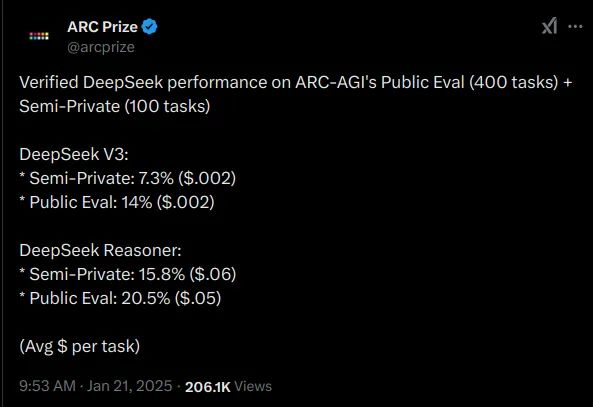

A blog post: itcanthink.substack.com/p/deepseek-r...

It's really very good at ARC-AGI for example:

A blog post: itcanthink.substack.com/p/deepseek-r...

It's really very good at ARC-AGI for example:

Paper: https://buff.ly/40I6s4d

Paper: https://buff.ly/40I6s4d

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

I have been running Webshop with older GPTs, e.g. gpt-3.5-turbo-1106 / -0125 / -instruct). On 5 different code repos (ReAct, Reflexion, ADaPT, StateAct) I am getting scores of 0%, while previously the scores where at ~15%.

Any thoughts anyone?

Feel free to mention yourself and others. :)

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

Feel free to mention yourself and others. :)

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

1/n

1/n

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

go.bsky.app/LUrLWXe

#LLMAgents #LLMReasoning

I am PhD-ing at Imperial College under @marekrei.bsky.social’s supervision. I am broadly interested in LLM/LVLM reasoning & planning 🤖 (here’s our latest work arxiv.org/abs/2411.04535)

Do reach out if you are interested in these (or related) topics!

I am PhD-ing at Imperial College under @marekrei.bsky.social’s supervision. I am broadly interested in LLM/LVLM reasoning & planning 🤖 (here’s our latest work arxiv.org/abs/2411.04535)

Do reach out if you are interested in these (or related) topics!

I am a CS PhD in LLM Agents @imperial-nlp.bsky.social with @marekrei.bsky.social.

This is our latest work on LLM Agents:

StateAct: arxiv.org/abs/2410.02810 (outperforming ReAct by ~10%).

Feel free to reach out for collaboration.

I am a CS PhD in LLM Agents @imperial-nlp.bsky.social with @marekrei.bsky.social.

This is our latest work on LLM Agents:

StateAct: arxiv.org/abs/2410.02810 (outperforming ReAct by ~10%).

Feel free to reach out for collaboration.

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb

To follow us all click 'follow all' in the starter pack below

go.bsky.app/Bv5thAb