Amr Farahat

@amr-farahat.bsky.social

150 followers

390 following

27 posts

MD/M.Sc/PhD candidate @ESI_Frankfurt and IMPRS for neural circuits @MpiBrain. Medicine, Neuroscience & AI

https://amr-farahat.github.io/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Amr Farahat

Reposted by Amr Farahat

Reposted by Amr Farahat

Reposted by Amr Farahat

Nature Neuroscience

@natneuro.nature.com

· Apr 11

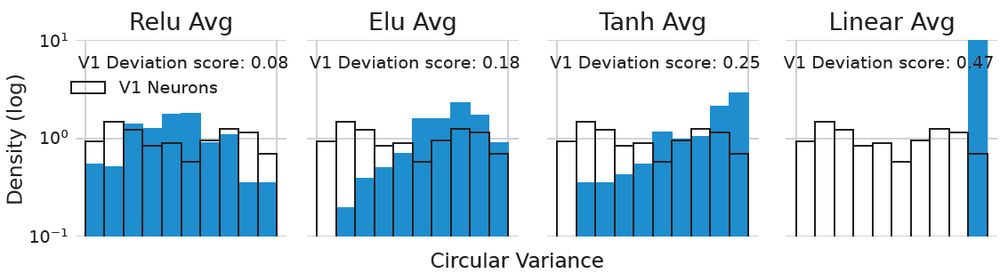

Integrating multimodal data to understand cortical circuit architecture and function - Nature Neuroscience

This paper discusses how experimental and computational studies integrating multimodal data, such as RNA expression, connectivity and neural activity, are advancing our understanding of the architectu...

www.nature.com

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 15

Amr Farahat

@amr-farahat.bsky.social

· Mar 13

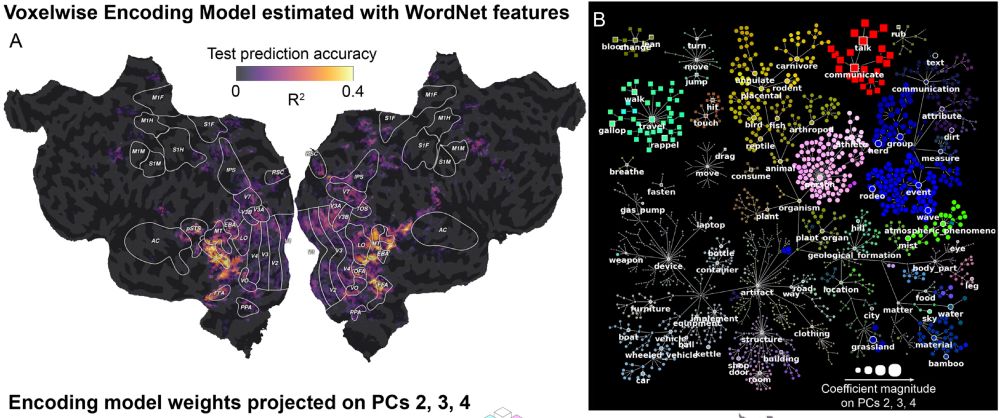

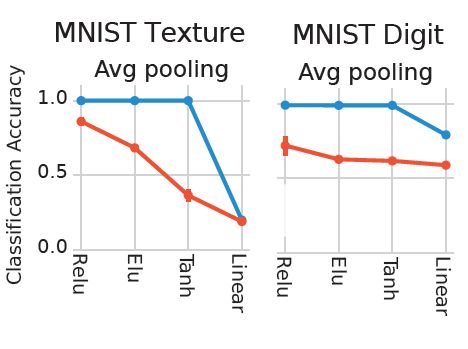

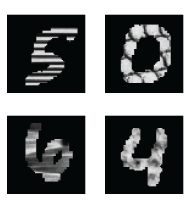

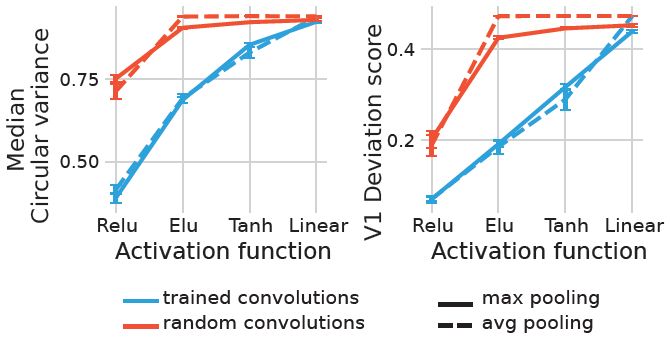

Neural responses in early, but not late, visual cortex are well predicted by random-weight CNNs with sufficient model complexity

Convolutional neural networks (CNNs) were inspired by the organization of the primate visual system, and in turn have become effective models of the visual cortex, allowing for accurate predictions of...

www.biorxiv.org

Amr Farahat

@amr-farahat.bsky.social

· Mar 13

Amr Farahat

@amr-farahat.bsky.social

· Mar 13

Amr Farahat

@amr-farahat.bsky.social

· Mar 13

Amr Farahat

@amr-farahat.bsky.social

· Mar 13

Amr Farahat

@amr-farahat.bsky.social

· Mar 13