no, they're weirdly coy about this

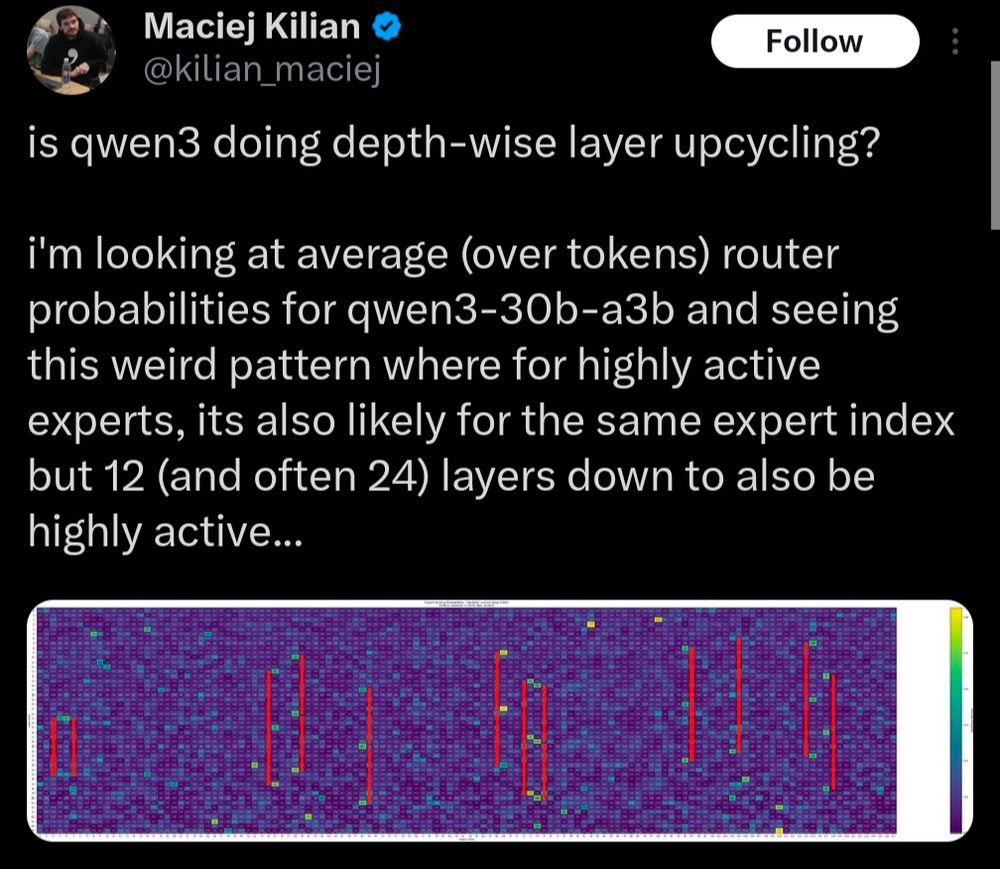

they produced a 235B, 480B, and then 1T model within a span of like 2 months though and there are some artifacts in the 30B

this is also the subject of enduring rumors which I have seen reasonably trustworthy people vouch for

they produced a 235B, 480B, and then 1T model within a span of like 2 months though and there are some artifacts in the 30B

this is also the subject of enduring rumors which I have seen reasonably trustworthy people vouch for

November 5, 2025 at 10:16 PM

no, they're weirdly coy about this

they produced a 235B, 480B, and then 1T model within a span of like 2 months though and there are some artifacts in the 30B

this is also the subject of enduring rumors which I have seen reasonably trustworthy people vouch for

they produced a 235B, 480B, and then 1T model within a span of like 2 months though and there are some artifacts in the 30B

this is also the subject of enduring rumors which I have seen reasonably trustworthy people vouch for

holy shit man (from the "Grokipedia" page on Eugenics)

![Contemporary data reveal dysgenic fertility patterns, where individuals with lower intelligence reproduce at higher rates than those with higher intelligence, resulting in a net decline in genotypic IQ. In the United States, analyses of birth cohorts from 1900 to 1979 show a consistent negative correlation between IQ and number of children, projecting a loss of 1-2 IQ points per generation if unchecked.[159] Similar trends appear in other populations, including China, where fertility inversely tracks educational attainment as a proxy for cognitive ability, despite environmental gains like the Flynn effect masking underlying genetic deterioration.[49] This reversal of natural selection—once favoring survival and reproduction of the able—arises from modern welfare systems decoupling reproduction from fitness costs, leading to cumulative societal costs in reduced innovation and increased dependency.](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:pjibmbyyshoh72bpham5zpgc/bafkreidud2p6hklitejpsyivsr6ncyo2me7dg4ofkdt4oz7ueg5vmu7k3q@jpeg)

October 28, 2025 at 3:29 AM

holy shit man (from the "Grokipedia" page on Eugenics)

xAI's grokipedia on the subject of "Hitler"

![Adolf Hitler (20 April 1889 – 30 April 1945) was an Austrian-born German politician who served as the dictator of Germany from 1933 to 1945, first as Chancellor and then as Führer und Reichskanzler after consolidating absolute power.[1][2][3] He founded and led the National Socialist German Workers' Party (NSDAP), known as the Nazi Party, transforming it into a mass movement that capitalized on post-World War I grievances, economic depression, and nationalist sentiments to seize control through legal means and subsequent purges.[4][5] Under Hitler's leadership, Nazi Germany achieved rapid economic recovery from the Great Depression through massive public works, rearmament, and deficit financing, reducing unemployment from over six million in 1933 to near full employment by 1939, though this laid the groundwork for aggressive militarization.[6] His regime enacted racial laws excluding Jews from society, escalating to the Holocaust—the systematic genocide of approximately six million Jews between 1941 and 1945, alongside millions of others including Roma, disabled individuals, and political dissidents—driven by Hitler's longstanding antisemitic ideology outlined in Mein Kampf.[7][8][9] Hitler initiated World War II in Europe by ordering the invasion of Poland on 1 September 1939, prompting declarations of war from Britain and France, and pursued expansionist policies that conquered much of Europe before ultimate defeat in 1945, resulting in an estimated 70–85 million deaths worldwide.[10][3] Facing imminent Soviet capture of Berlin, Hitler died by suicide via gunshot and cyanide in his Führerbunker on 30 April 1945.[9][11][12]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:pjibmbyyshoh72bpham5zpgc/bafkreicfn576hjhpunhfl2wrksfjqpyf3soporea5pvanpiyxhg34z5wnu@jpeg)

October 28, 2025 at 2:57 AM

xAI's grokipedia on the subject of "Hitler"

in the paper linked next to that sentence it seems that this method doesn't touch attention, rather just creating a special case in pretraining documents where tokens are presented out of order

October 26, 2025 at 6:51 PM

in the paper linked next to that sentence it seems that this method doesn't touch attention, rather just creating a special case in pretraining documents where tokens are presented out of order

finally invented The VRAM Torturer from the hit novel "The VRAM Torturer is a pretty good quality-of-life feature for your training library"

October 18, 2025 at 5:24 AM

finally invented The VRAM Torturer from the hit novel "The VRAM Torturer is a pretty good quality-of-life feature for your training library"

we love you DeepSeek

they accomplished this on an existing base model with only 1T tokens of additional continued pretraining. if you take their $6M figure for pretraining V3 at $2/H800/hr for 15T tokens (in practice much lower, they own their compute) they spent about $400K on this

they accomplished this on an existing base model with only 1T tokens of additional continued pretraining. if you take their $6M figure for pretraining V3 at $2/H800/hr for 15T tokens (in practice much lower, they own their compute) they spent about $400K on this

September 29, 2025 at 6:57 PM

we love you DeepSeek

they accomplished this on an existing base model with only 1T tokens of additional continued pretraining. if you take their $6M figure for pretraining V3 at $2/H800/hr for 15T tokens (in practice much lower, they own their compute) they spent about $400K on this

they accomplished this on an existing base model with only 1T tokens of additional continued pretraining. if you take their $6M figure for pretraining V3 at $2/H800/hr for 15T tokens (in practice much lower, they own their compute) they spent about $400K on this

arxiv.org/abs/2509.15207

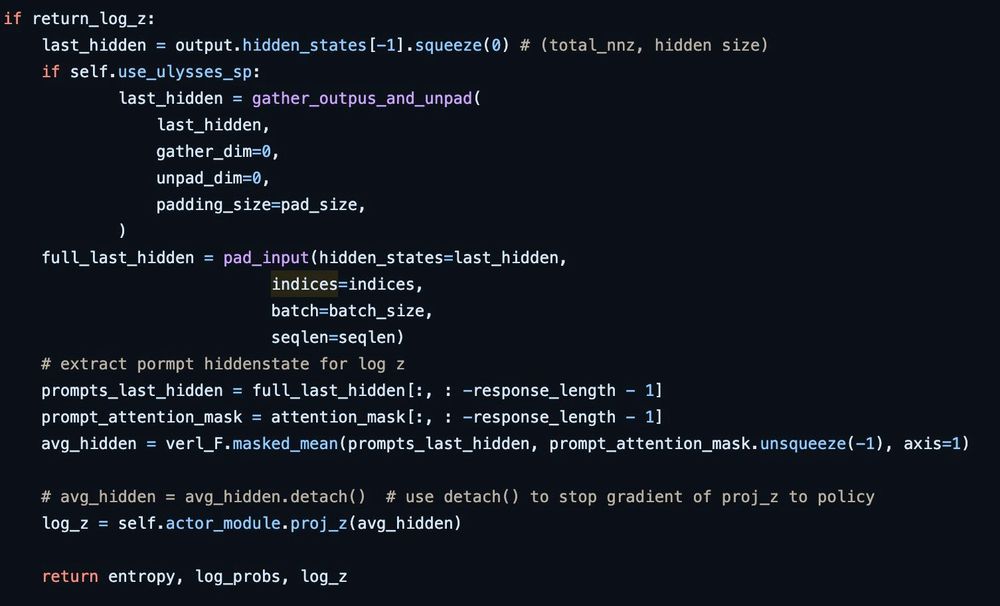

Cool paper that (seemingly) achieves better results than plain GRPO by using the model itself to approximate a computationally-intractable reward distribution.

Uses this kind of odd mean-pooling of non-detached hidden states and a 3-layer MLP to include in the final loss.

Cool paper that (seemingly) achieves better results than plain GRPO by using the model itself to approximate a computationally-intractable reward distribution.

Uses this kind of odd mean-pooling of non-detached hidden states and a 3-layer MLP to include in the final loss.

September 21, 2025 at 4:46 AM

arxiv.org/abs/2509.15207

Cool paper that (seemingly) achieves better results than plain GRPO by using the model itself to approximate a computationally-intractable reward distribution.

Uses this kind of odd mean-pooling of non-detached hidden states and a 3-layer MLP to include in the final loss.

Cool paper that (seemingly) achieves better results than plain GRPO by using the model itself to approximate a computationally-intractable reward distribution.

Uses this kind of odd mean-pooling of non-detached hidden states and a 3-layer MLP to include in the final loss.

one very fun thing about Muon is the smoothness of the loss graph

when Kimi K2 came out I remember a lot of people salivating over this

when Kimi K2 came out I remember a lot of people salivating over this

September 15, 2025 at 6:49 PM

one very fun thing about Muon is the smoothness of the loss graph

when Kimi K2 came out I remember a lot of people salivating over this

when Kimi K2 came out I remember a lot of people salivating over this

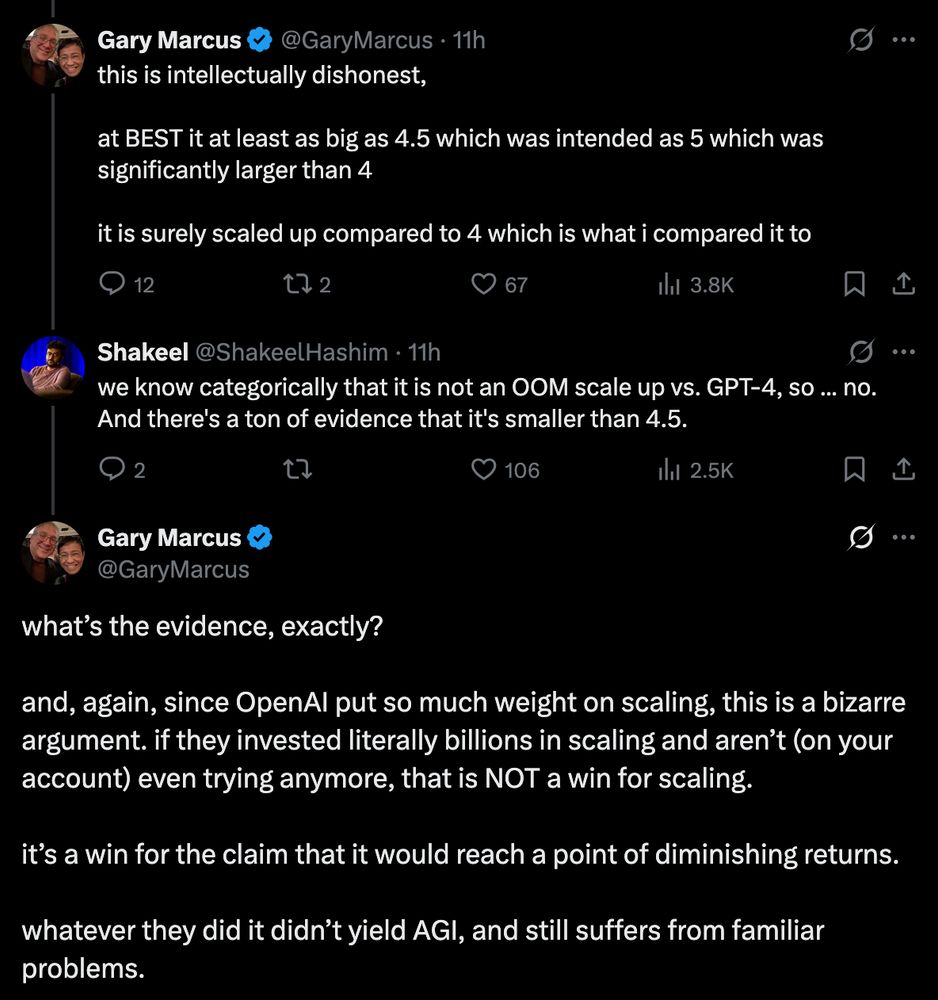

the post I merged in my head to arrive at this was these, somehow the "OOM scale-up" thing in the non-GM reply made me assign the number at 16,000B (10x GPT-4's 1600B-ish)

September 4, 2025 at 1:52 AM

the post I merged in my head to arrive at this was these, somehow the "OOM scale-up" thing in the non-GM reply made me assign the number at 16,000B (10x GPT-4's 1600B-ish)

ok i appear to have accidentally merged two posts in my mind

he does seem to believe that GPT-5 is *at least* as big as GPT-4.5, if not bigger, and that 4.5 is significantly larger than GPT-4

he does seem to believe that GPT-5 is *at least* as big as GPT-4.5, if not bigger, and that 4.5 is significantly larger than GPT-4

September 4, 2025 at 1:49 AM

ok i appear to have accidentally merged two posts in my mind

he does seem to believe that GPT-5 is *at least* as big as GPT-4.5, if not bigger, and that 4.5 is significantly larger than GPT-4

he does seem to believe that GPT-5 is *at least* as big as GPT-4.5, if not bigger, and that 4.5 is significantly larger than GPT-4

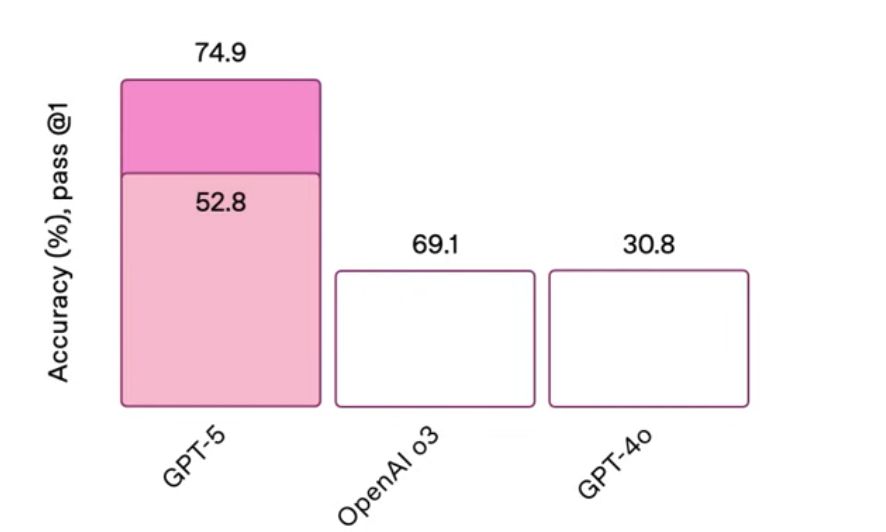

new OpenAI chart crime just dropped

August 7, 2025 at 5:17 PM

new OpenAI chart crime just dropped

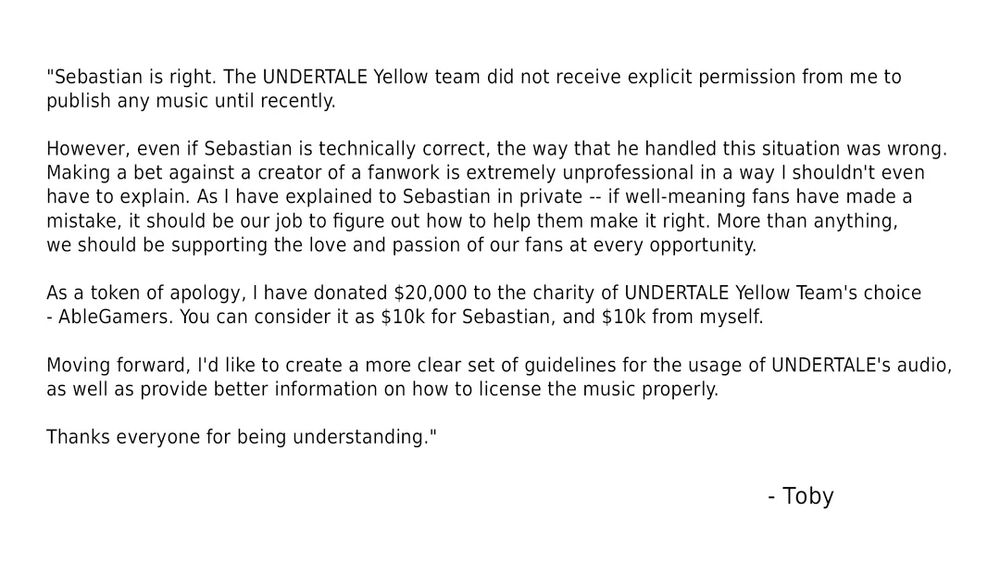

idk if you remember but when a popular fangame came out (UNDERTALE Yellow) the CEO went online and taunted the creators saying they were "probably" violating copyright and bet them $10K that they had never gotten permission

causing toby fox to release this statement scolding him

causing toby fox to release this statement scolding him

June 28, 2025 at 5:21 PM

idk if you remember but when a popular fangame came out (UNDERTALE Yellow) the CEO went online and taunted the creators saying they were "probably" violating copyright and bet them $10K that they had never gotten permission

causing toby fox to release this statement scolding him

causing toby fox to release this statement scolding him

also i saw you being unsure of whether 4o was referenced in the paper bc of the source but yeah, the paper says it was 4o

June 21, 2025 at 8:32 PM

also i saw you being unsure of whether 4o was referenced in the paper bc of the source but yeah, the paper says it was 4o

one big flaw with the paper is that they assume for some reason that "output steps required to solve problem" is a direct analogue to difficulty. their results are mostly attributable to "the number of output steps scales quadratically with complexity"

June 16, 2025 at 11:56 PM

one big flaw with the paper is that they assume for some reason that "output steps required to solve problem" is a direct analogue to difficulty. their results are mostly attributable to "the number of output steps scales quadratically with complexity"

i'm not really clear on how that works tbh. how does a car pulling into the bike lane in front of the open door prevent you from going around the door? the bike lane in question seems narrow enough that you wouldn't be able to get around an open car door without going into the car lane anyway

June 16, 2025 at 11:43 PM

i'm not really clear on how that works tbh. how does a car pulling into the bike lane in front of the open door prevent you from going around the door? the bike lane in question seems narrow enough that you wouldn't be able to get around an open car door without going into the car lane anyway

Here's Claude 3.5 Sonnet's attempt at "the bluesky logo" as an SVG. I actually really like it.

December 31, 2024 at 8:03 PM

Here's Claude 3.5 Sonnet's attempt at "the bluesky logo" as an SVG. I actually really like it.

Here's QwQ being kind of adorable and kind of stupid.

November 28, 2024 at 4:07 AM

Here's QwQ being kind of adorable and kind of stupid.

clipping stuff gives me so much joy relative to how useless it is to me

November 20, 2024 at 6:56 AM

clipping stuff gives me so much joy relative to how useless it is to me

![Fuentes' explicit critiques of Jewish influence in media, finance, and politics—framed by him as opposition to perceived anti-white agendas—along with statements questioning Holocaust death tolls and endorsing hierarchical governance over democracy, have resulted in his classification as a white supremacist and antisemite by advocacy groups like the Anti-Defamation League (ADL) and Southern Poverty Law Center (SPLC), organizations critics argue exhibit ideological bias against non-leftist dissent.[4][5] These positions, coupled with associations like dining with former President Trump and Kanye West in 2022, have amplified his visibility while prompting deplatforming from platforms including YouTube, Twitter (pre-Musk), and payment services like PayPal, reflecting broader tensions over speech boundaries in digital spaces.[6]](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:pjibmbyyshoh72bpham5zpgc/bafkreie6xp2d4g3veuxqigbmw6457hurqumz5kaw4onzrk4pk4ktyyii24@jpeg)