Berk Ustun

@berkustun.bsky.social

2.6K followers

450 following

41 posts

Assistant Prof at UCSD. I work on safety, interpretability, and fairness in machine learning. www.berkustun.com

Posts

Media

Videos

Starter Packs

Reposted by Berk Ustun

Reposted by Berk Ustun

Ben Recht

@beenwrekt.bsky.social

· Sep 8

The Actuary's Final Word on Algorithmic Decision Making

Paul Meehl's foundational work "Clinical versus Statistical Prediction," provided early theoretical justification and empirical evidence of the superiority of statistical methods over clinical judgmen...

arxiv.org

Berk Ustun

@berkustun.bsky.social

· Aug 1

Reposted by Berk Ustun

Reposted by Berk Ustun

Connor Lawless

@lawlessopt.bsky.social

· Jul 14

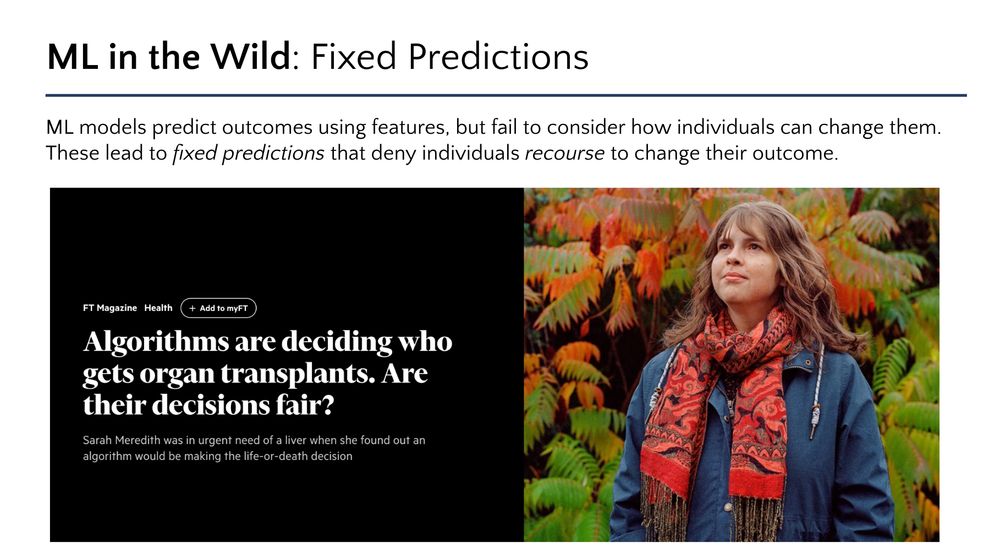

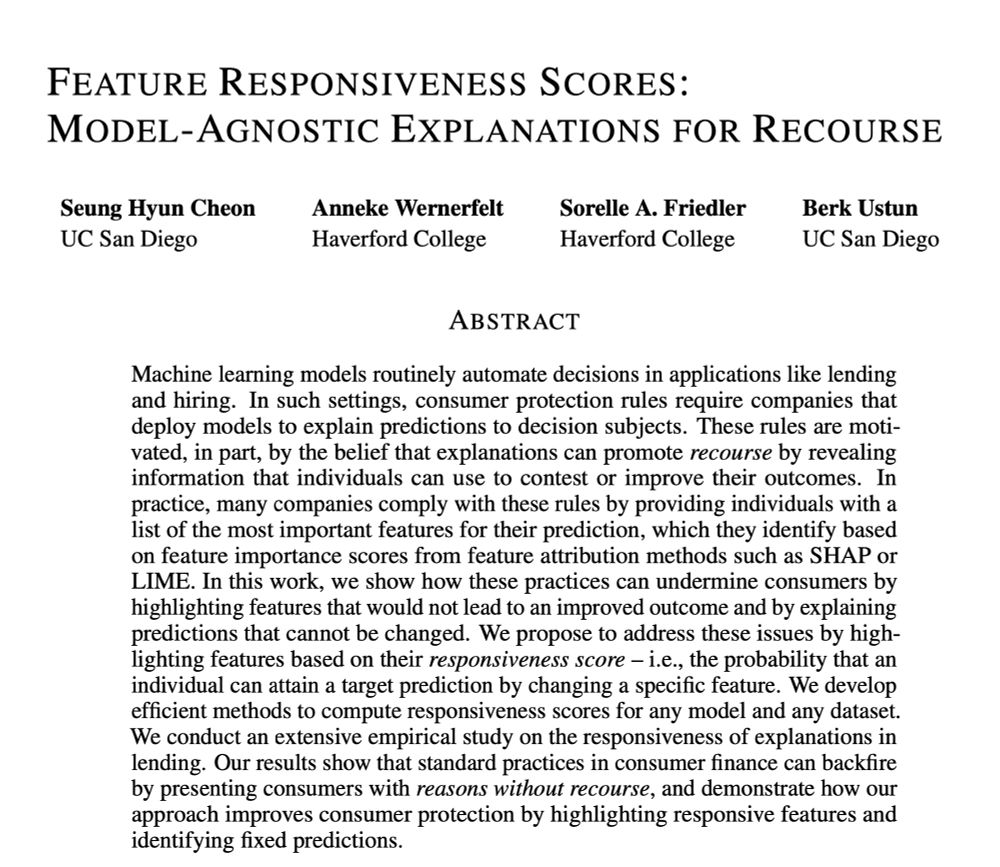

Understanding Fixed Predictions via Confined Regions

Machine learning models can assign fixed predictions that preclude individuals from changing their outcome. Existing approaches to audit fixed predictions do so on a pointwise basis, which requires ac...

arxiv.org

Reposted by Berk Ustun

Reposted by Berk Ustun

Reposted by Berk Ustun

Berk Ustun

@berkustun.bsky.social

· Apr 24

Reposted by Berk Ustun

Reposted by Berk Ustun

Hailey Joren

@haileyjoren.bsky.social

· Apr 24

Reposted by Berk Ustun

Reposted by Berk Ustun

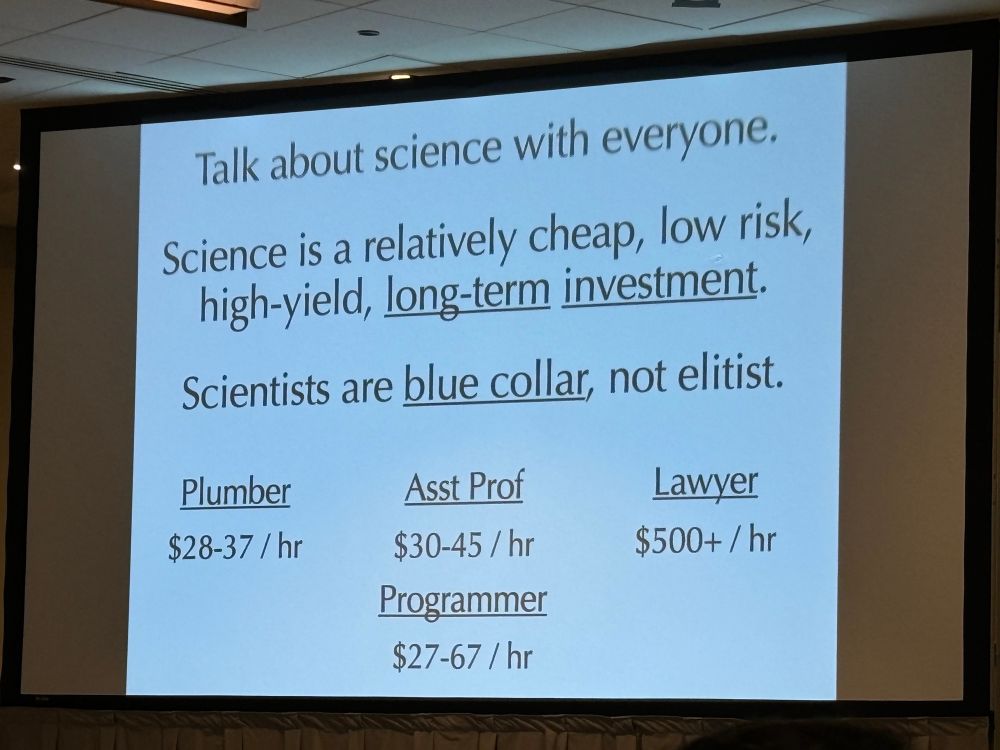

Karl Rohe

@karlrohe.bsky.social

· Apr 19

Reposted by Berk Ustun

Reposted by Berk Ustun

Berk Ustun

@berkustun.bsky.social

· Feb 28

Reposted by Berk Ustun

Reposted by Berk Ustun

Berk Ustun

@berkustun.bsky.social

· Jan 7

For a Year, They Lived Tied Together with 8 Feet of Social Distance

Artists Linda Montano and Tehching Hsieh

The promise was made on American Independence Day, 1983. "We, Linda Montano and Tehching Hsieh, plan to do a one year performance. We will stay together for...

www.messynessychic.com