Bionic Vision Lab

@bionicvisionlab.org

460 followers

170 following

130 posts

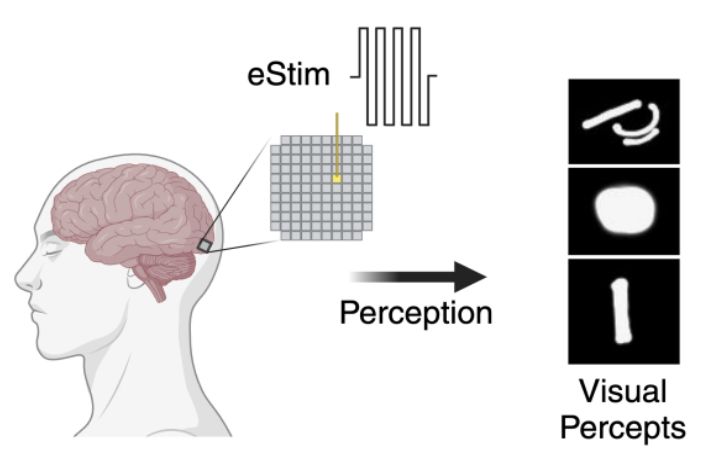

👁️🧠🖥️🧪🤖 What would the world look like with a bionic eye? Interdisciplinary research group at UC Santa Barbara. PI: @mbeyeler.bsky.social

#BionicVision #Blindness #NeuroTech #VisionScience #CompNeuro #NeuroAI

Posts

Media

Videos

Starter Packs

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Bionic Vision Lab

@bionicvisionlab.org

· Jul 13

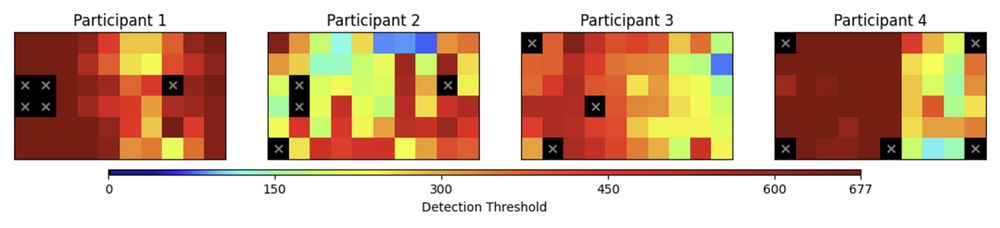

Efficient spatial estimation of perceptual thresholds for retinal implants via Gaussian process regression | Bionic Vision Lab

We propose a Gaussian Process Regression (GPR) framework to predict perceptual thresholds at unsampled locations while leveraging uncertainty estimates to guide adaptive sampling.

bionicvisionlab.org

Bionic Vision Lab

@bionicvisionlab.org

· Jul 13

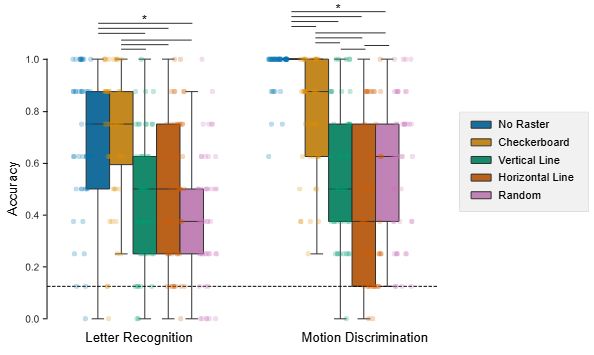

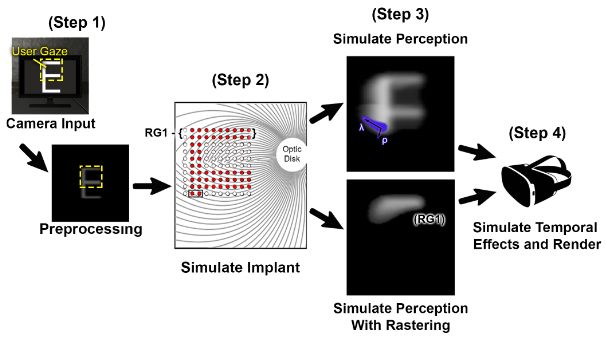

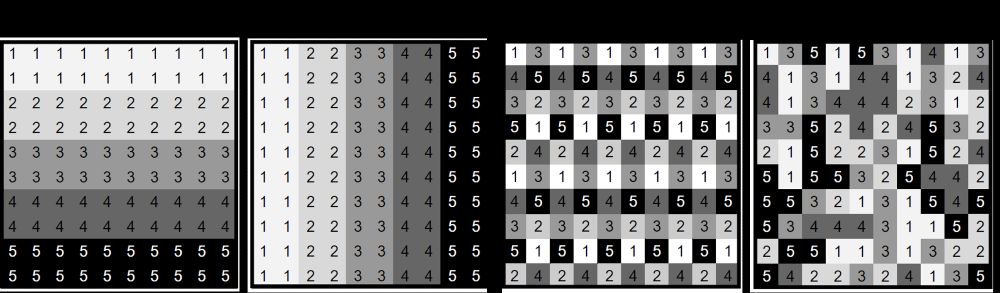

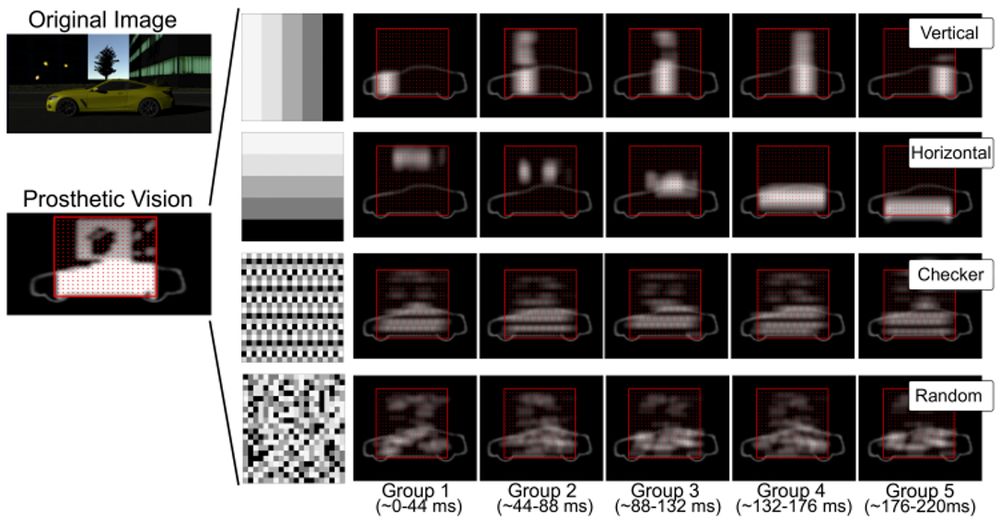

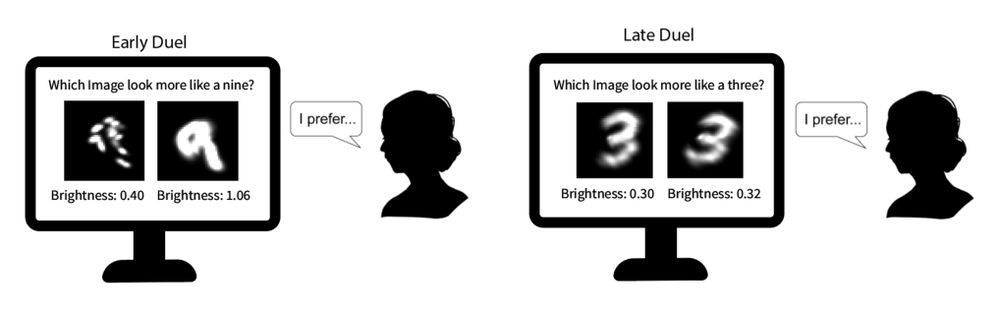

Evaluating deep human-in-the-loop optimization for retinal implants using sighted participants | Bionic Vision Lab

We evaluate HILO using sighted participants viewing simulated prosthetic vision to assess its ability to optimize stimulation strategies under realistic conditions.

bionicvisionlab.org

Reposted by Bionic Vision Lab

bionic-vision.org

@bionic-vision.org

· Jun 12

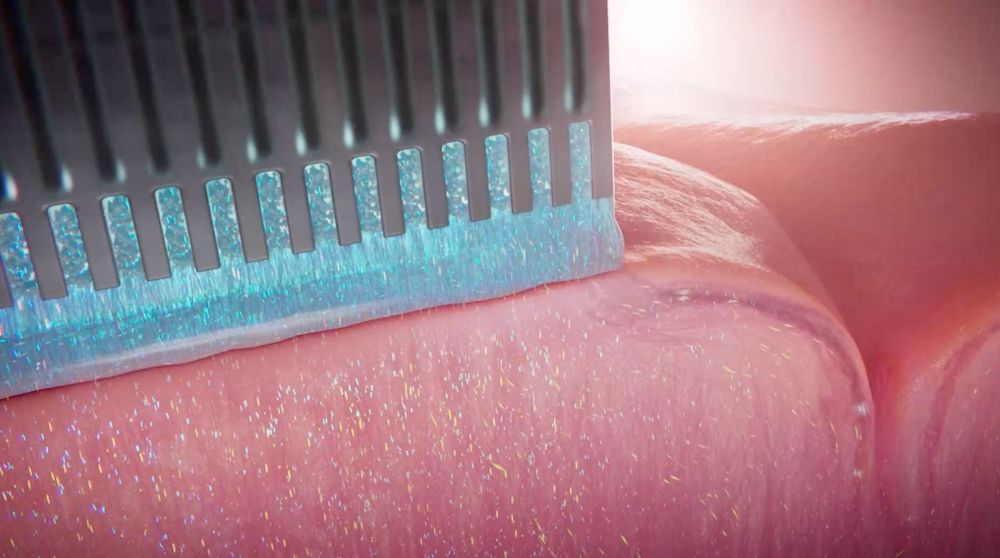

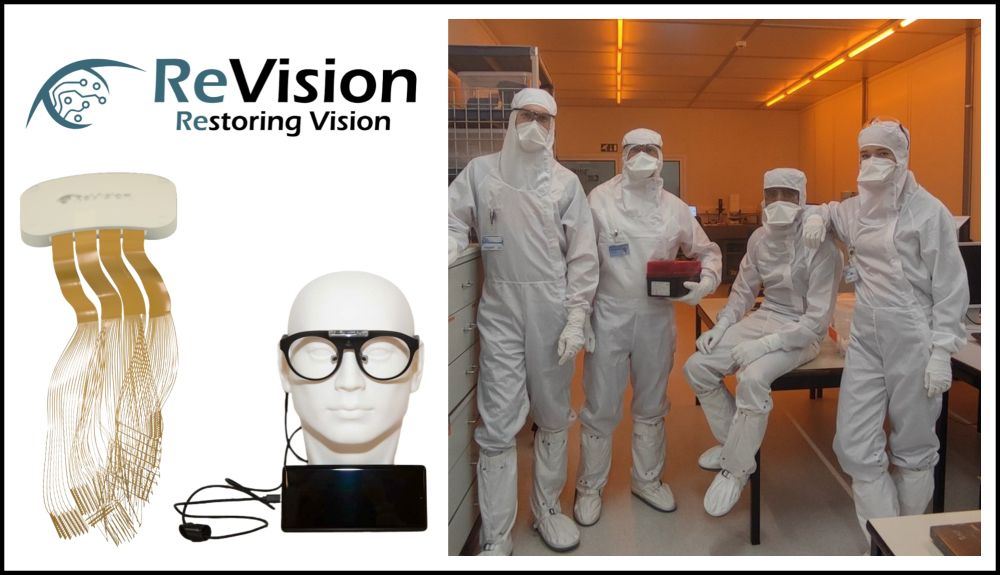

bionic-vision.org | Research Spotlights | Frederik Ceyssens, ReVision Implant

Frederik Ceyssens is Co-Founder and CEO of ReVision Implant, the company behind Occular: a next-generation cortical prosthesis designed to restore both central and peripheral vision through ultra-flex...

www.bionic-vision.org

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Reposted by Bionic Vision Lab

Bionic Vision Lab

@bionicvisionlab.org

· Dec 17

Seeing the future: Michael Beyeler’s work in neurotechnology earns him top faculty award

Recognized for outstanding contributions in research, teaching and service, as well as “his dedication to innovation, excellence and student success," the researcher behind the "bionic eye" receives o...

news.ucsb.edu