Michael Beyeler

@mbeyeler.bsky.social

1.1K followers

410 following

440 posts

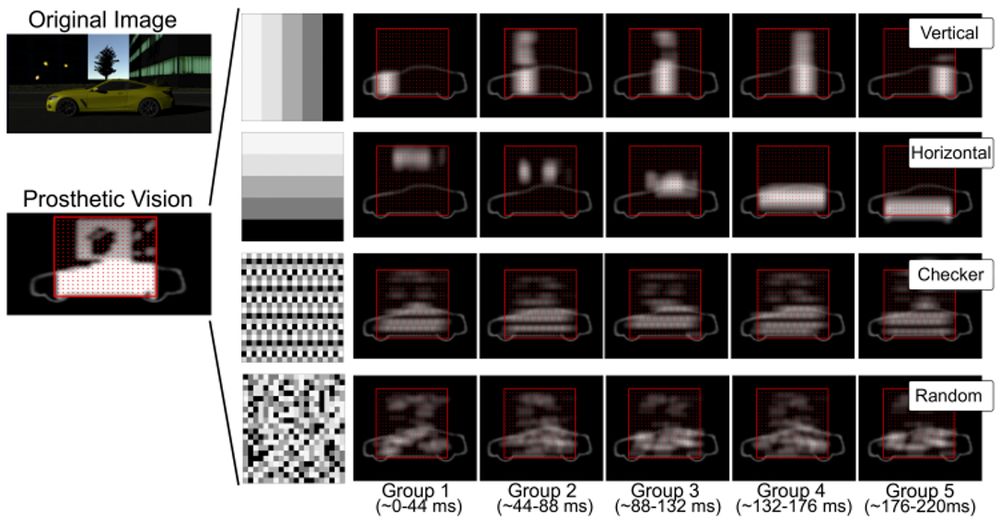

👁️🧠🖥️🧪🤖 Associate Professor in @ucsb-cs.bsky.social and Psychological & Brain Sciences at @ucsantabarbara.bsky.social. PI of @bionicvisionlab.org.

#BionicVision #Blindness #LowVision #VisionScience #CompNeuro #NeuroTech #NeuroAI

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Michael Beyeler

Michael Beyeler

@mbeyeler.bsky.social

· Aug 31

Reposted by Michael Beyeler

Reposted by Michael Beyeler

Michael Beyeler

@mbeyeler.bsky.social

· Jul 6