Ex @MetaAI, @SonyAI, @Microsoft

Egyptian 🇪🇬

Webpage: egyptians-in-cs.github.io#/en/stats

Webpage: egyptians-in-cs.github.io#/en/stats

It highlights the global Egyptian diaspora, and how widely Egyptian researchers are contributing across the world.

It highlights the global Egyptian diaspora, and how widely Egyptian researchers are contributing across the world.

What started as a short list became a much broader story about visibility and representation.

What started as a short list became a much broader story about visibility and representation.

🤝 Re-Align 2026 is made possible by an interdisciplinary team of co-organizers:

@bkhmsi.bsky.social, Brian Cheung, @dotadotadota.bsky.social, @eringrant.me, Stephanie Fu, @kushinm.bsky.social, @sucholutsky.bsky.social, and @siddsuresh97.bsky.social!

🤝 Re-Align 2026 is made possible by an interdisciplinary team of co-organizers:

@bkhmsi.bsky.social, Brian Cheung, @dotadotadota.bsky.social, @eringrant.me, Stephanie Fu, @kushinm.bsky.social, @sucholutsky.bsky.social, and @siddsuresh97.bsky.social!

🌟 Joining us at Re-Align 2026 is a fantastic lineup of invited speakers covering ML, neuroscience, and cognitive science:

David Bau, Arturo Deza, @judithfan.bsky.social, @alonaf.bsky.social, @phillipisola.bsky.social, and Danielle Perszyk!

🌟 Joining us at Re-Align 2026 is a fantastic lineup of invited speakers covering ML, neuroscience, and cognitive science:

David Bau, Arturo Deza, @judithfan.bsky.social, @alonaf.bsky.social, @phillipisola.bsky.social, and Danielle Perszyk!

📣 We invite submissions on representational alignment, spanning ML, Neuroscience, CogSci, and related fields.

📝 Tracks: Short (≤5p), Long (≤10p), Challenge (blog)

⏰ Deadline: Feb 5, 2026 for papers

🔗 representational-alignment.github.io/2026/

📣 We invite submissions on representational alignment, spanning ML, Neuroscience, CogSci, and related fields.

📝 Tracks: Short (≤5p), Long (≤10p), Challenge (blog)

⏰ Deadline: Feb 5, 2026 for papers

🔗 representational-alignment.github.io/2026/

Still grateful for what I achieved and for everyone who supported me.

Wishing us all a brighter year ahead. ✨

Still grateful for what I achieved and for everyone who supported me.

Wishing us all a brighter year ahead. ✨

📅 Saturday, December 13th

⏰ 2:00 PM (GMT+2)

Register here: docs.google.com/forms/d/e/1F...

See you all there! :)

📅 Saturday, December 13th

⏰ 2:00 PM (GMT+2)

Register here: docs.google.com/forms/d/e/1F...

See you all there! :)

I’ll be presenting our work (Oral) on Nov 5, Special Theme session, Room A106-107 at 14:30.

Let’s talk brains 🧠, machines 🤖, and everything in between :D

Looking forward to all the amazing discussions!

I’ll be presenting our work (Oral) on Nov 5, Special Theme session, Room A106-107 at 14:30.

Let’s talk brains 🧠, machines 🤖, and everything in between :D

Looking forward to all the amazing discussions!

We also wondered: if neuroscientists use functional localizers to map networks in the brain, could we do the same for MiCRo’s experts?

The answer: yes! The very same localizers successfully recovered the corresponding expert modules in our models!

We also wondered: if neuroscientists use functional localizers to map networks in the brain, could we do the same for MiCRo’s experts?

The answer: yes! The very same localizers successfully recovered the corresponding expert modules in our models!

One result I was particularly excited about is the emergent hierarchy we found across MiCRo layers:

🔺Earlier layers route tokens to Language experts.

🔻Deeper layers shift toward domain-relevant experts.

This emergent hierarchy mirrors patterns observed in the human brain 🧠

One result I was particularly excited about is the emergent hierarchy we found across MiCRo layers:

🔺Earlier layers route tokens to Language experts.

🔻Deeper layers shift toward domain-relevant experts.

This emergent hierarchy mirrors patterns observed in the human brain 🧠

We find that MiCRo matches or outperforms baselines on reasoning tasks (e.g., GSM8K, BBH) and aligns better with human behavior (CogBench), while maintaining interpretability!!

We find that MiCRo matches or outperforms baselines on reasoning tasks (e.g., GSM8K, BBH) and aligns better with human behavior (CogBench), while maintaining interpretability!!

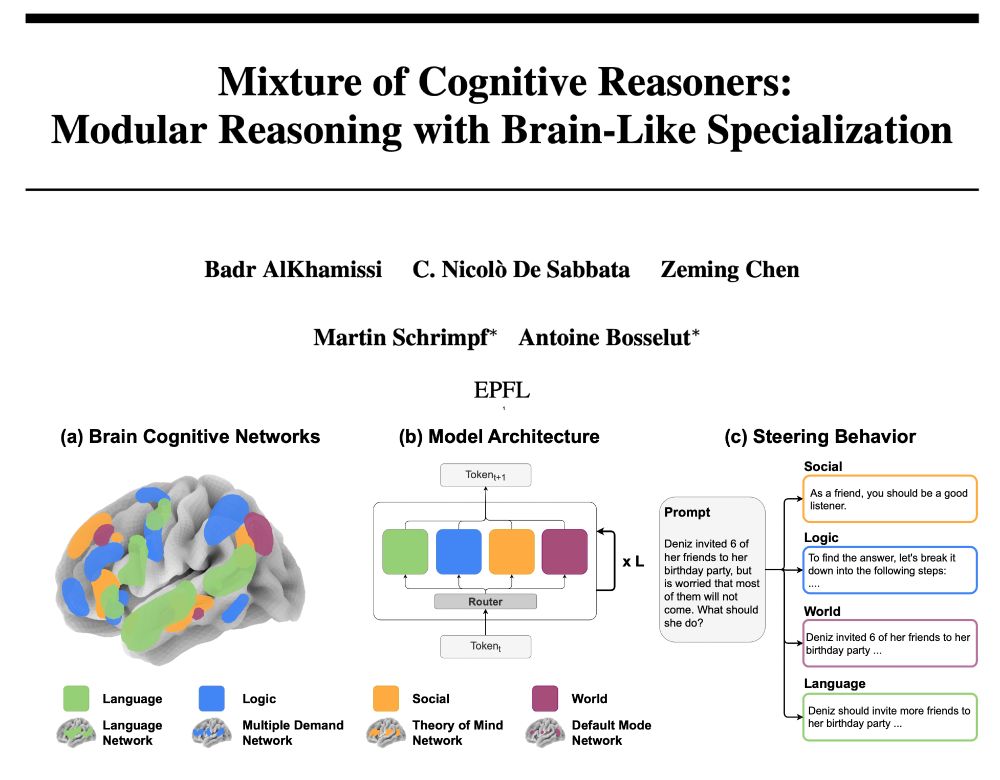

🧩 Recap:

MiCRo takes a pretrained language model and post-trains it to develop distinct, brain-inspired modules aligned with four cognitive networks:

🗣️ Language

🔢 Logic / Multiple Demand

🧍♂️ Social / Theory of Mind

🌍 World / Default Mode Network

🧩 Recap:

MiCRo takes a pretrained language model and post-trains it to develop distinct, brain-inspired modules aligned with four cognitive networks:

🗣️ Language

🔢 Logic / Multiple Demand

🧍♂️ Social / Theory of Mind

🌍 World / Default Mode Network

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

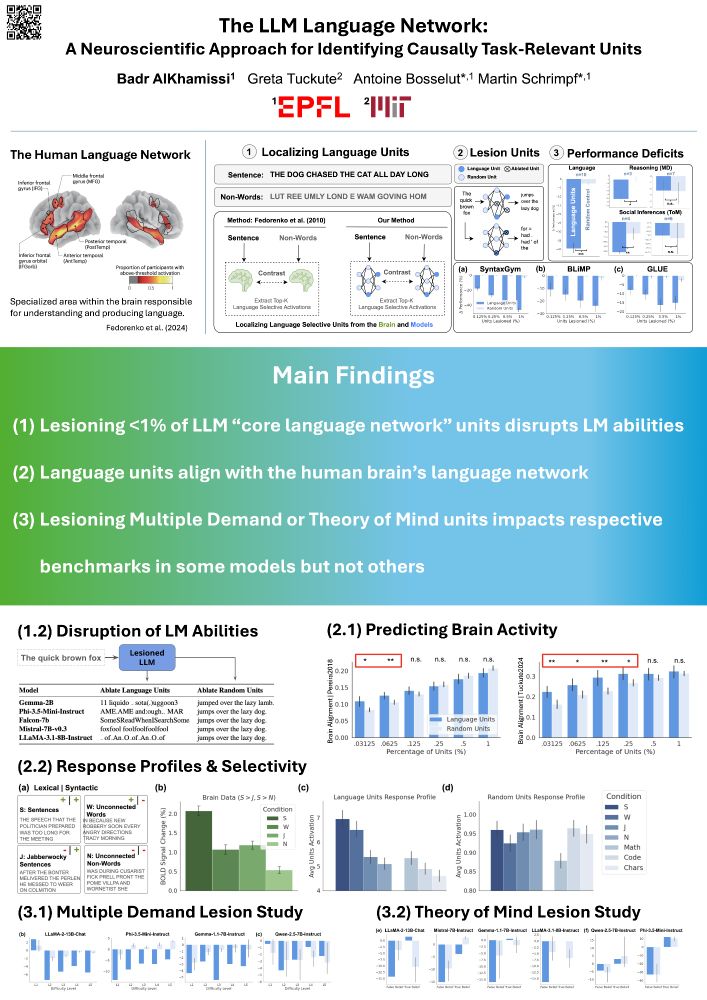

We show that by selectively targeting VLM units that mirror the brain’s visual word form area, models develop dyslexic-like reading impairments, while leaving other abilities intact!! 🧠🤖

Details in the 🧵👇

We show that by selectively targeting VLM units that mirror the brain’s visual word form area, models develop dyslexic-like reading impairments, while leaving other abilities intact!! 🧠🤖

Details in the 🧵👇

Neuroscience localizers (e.g., for language, multiple-demand) rediscover the corresponding experts in MiCRo, showing functional alignment with brain networks. However, ToM localizer fail to identify the social expert.

Figures for MiCRo-Llama & MiCRo-OLMo.

Neuroscience localizers (e.g., for language, multiple-demand) rediscover the corresponding experts in MiCRo, showing functional alignment with brain networks. However, ToM localizer fail to identify the social expert.

Figures for MiCRo-Llama & MiCRo-OLMo.

Removing or emphasizing specific experts steers model behavior: Ablating logic expert hurts math accuracy; suppressing social reasoning improves math slightly—showcasing fine-grained control.

Removing or emphasizing specific experts steers model behavior: Ablating logic expert hurts math accuracy; suppressing social reasoning improves math slightly—showcasing fine-grained control.

Early layers route most tokens to the language expert; deeper layers route to domain-relevant experts (e.g., logic expert for math), matching task semantics.

Early layers route most tokens to the language expert; deeper layers route to domain-relevant experts (e.g., logic expert for math), matching task semantics.

We evaluate on 6 reasoning benchmarks (MATH, GSM8K, MMLU, BBH…), MiCRo outperforms both dense and “general‑expert” baselines: modular models with random specialist assignment in Stage 1.

We evaluate on 6 reasoning benchmarks (MATH, GSM8K, MMLU, BBH…), MiCRo outperforms both dense and “general‑expert” baselines: modular models with random specialist assignment in Stage 1.

• Stage 1: Expert training on small curated domain-specific datasets (~3k samples)

• Stage 2: Router training, experts frozen

• Stage 3: End-to-end finetuning on large instruction corpus (939k samples)

This seeds specialization effectively.

• Stage 1: Expert training on small curated domain-specific datasets (~3k samples)

• Stage 2: Router training, experts frozen

• Stage 3: End-to-end finetuning on large instruction corpus (939k samples)

This seeds specialization effectively.

Humans rely on specialized brain networks—e.g., language, multiple-demand, ToM, default mode—for different cognitive tasks. MiCRo mimics this by dividing transformer layers into four experts.

Figure from @evfedorenko.bsky.social's review paper: www.nature.com/articles/s41...

Humans rely on specialized brain networks—e.g., language, multiple-demand, ToM, default mode—for different cognitive tasks. MiCRo mimics this by dividing transformer layers into four experts.

Figure from @evfedorenko.bsky.social's review paper: www.nature.com/articles/s41...

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

Thrilled to share with you our latest work: “Mixture of Cognitive Reasoners”, a modular transformer architecture inspired by the brain’s functional networks: language, logic, social reasoning, and world knowledge.

1/ 🧵👇

"Hire Your Anthropologist!" 🎓

Led by the amazing Mai Alkhamissi & @lrz-persona.bsky.social, under the supervision of @monadiab77.bsky.social. Don’t miss it! 😄

arXiv link coming soon!

"Hire Your Anthropologist!" 🎓

Led by the amazing Mai Alkhamissi & @lrz-persona.bsky.social, under the supervision of @monadiab77.bsky.social. Don’t miss it! 😄

arXiv link coming soon!

Looking forward to all the discussions! 🎤 🧠

Looking forward to all the discussions! 🎤 🧠