Badr AlKhamissi

@bkhmsi.bsky.social

210 followers

350 following

46 posts

PhD at EPFL 🧠💻

Ex @MetaAI, @SonyAI, @Microsoft

Egyptian 🇪🇬

Posts

Media

Videos

Starter Packs

Reposted by Badr AlKhamissi

Reposted by Badr AlKhamissi

Reposted by Badr AlKhamissi

Badr AlKhamissi

@bkhmsi.bsky.social

· Jun 17

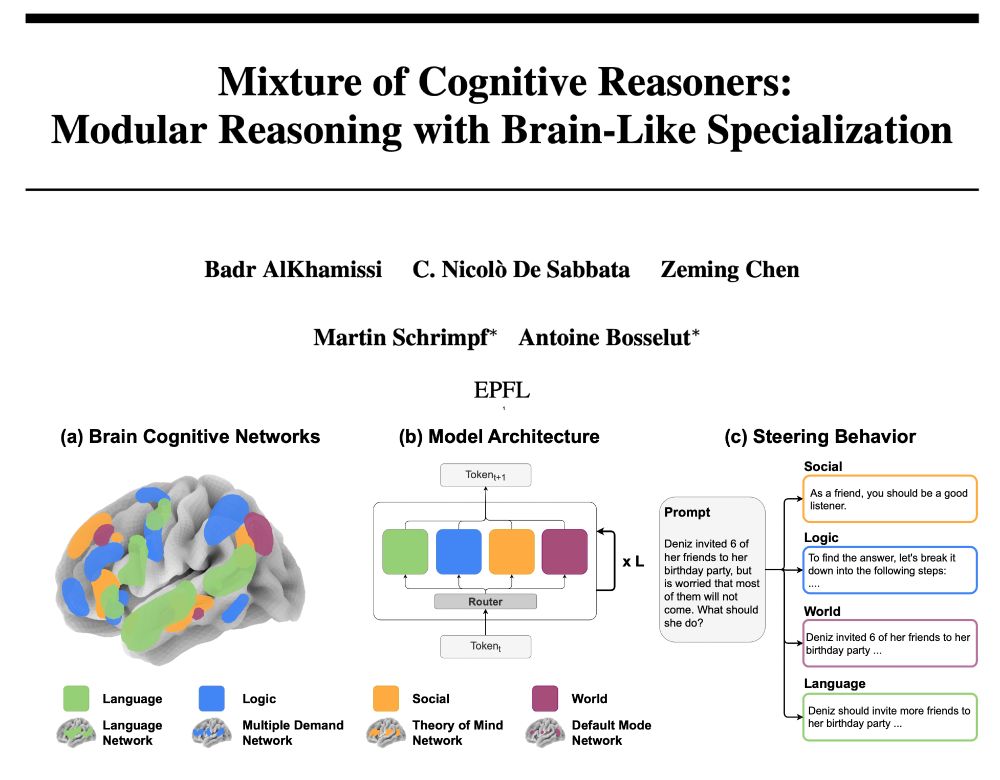

Mixture of Cognitive Reasoners: Modular Reasoning with Brain-Like Specialization

Human intelligence emerges from the interaction of specialized brain networks, each dedicated to distinct cognitive functions such as language processing, logical reasoning, social understanding, and ...

arxiv.org

Badr AlKhamissi

@bkhmsi.bsky.social

· Jun 17

Badr AlKhamissi

@bkhmsi.bsky.social

· Jun 17

Badr AlKhamissi

@bkhmsi.bsky.social

· Jun 17

Badr AlKhamissi

@bkhmsi.bsky.social

· Apr 30

Reposted by Badr AlKhamissi

Badr AlKhamissi

@bkhmsi.bsky.social

· Mar 5

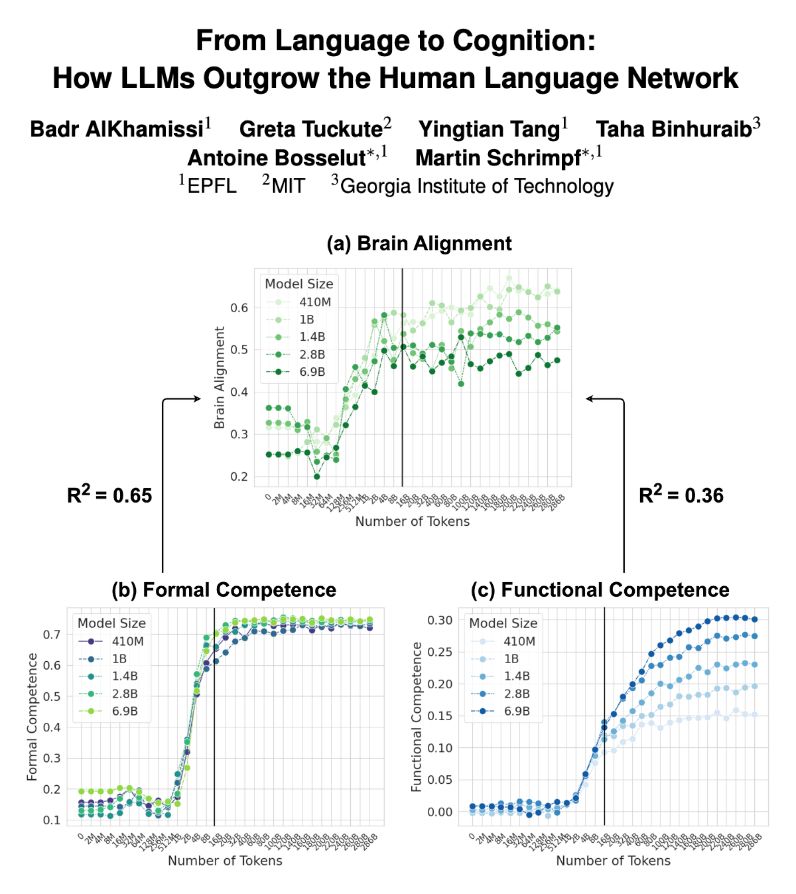

From Language to Cognition: How LLMs Outgrow the Human Language Network

Large language models (LLMs) exhibit remarkable similarity to neural activity in the human language network. However, the key properties of language shaping brain-like representations, and their evolu...

arxiv.org

Badr AlKhamissi

@bkhmsi.bsky.social

· Mar 5