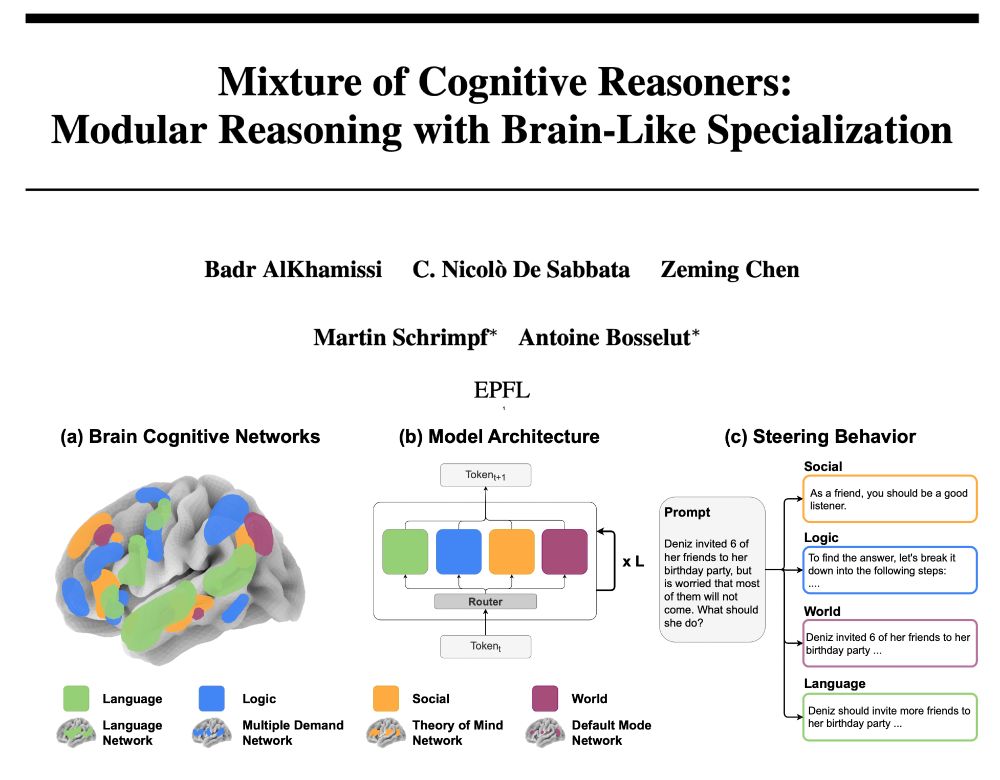

Antoine Bosselut

@abosselut.bsky.social

480 followers

130 following

57 posts

Helping machines make sense of the world. Asst Prof @icepfl.bsky.social; Before: @stanfordnlp.bsky.social @uwnlp.bsky.social AI2 #NLProc #AI

Website: https://atcbosselut.github.io/

Posts

Media

Videos

Starter Packs

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Reposted by Antoine Bosselut

Antoine Bosselut

@abosselut.bsky.social

· Jun 23