Christoph Molnar

@christophmolnar.bsky.social

6K followers

970 following

140 posts

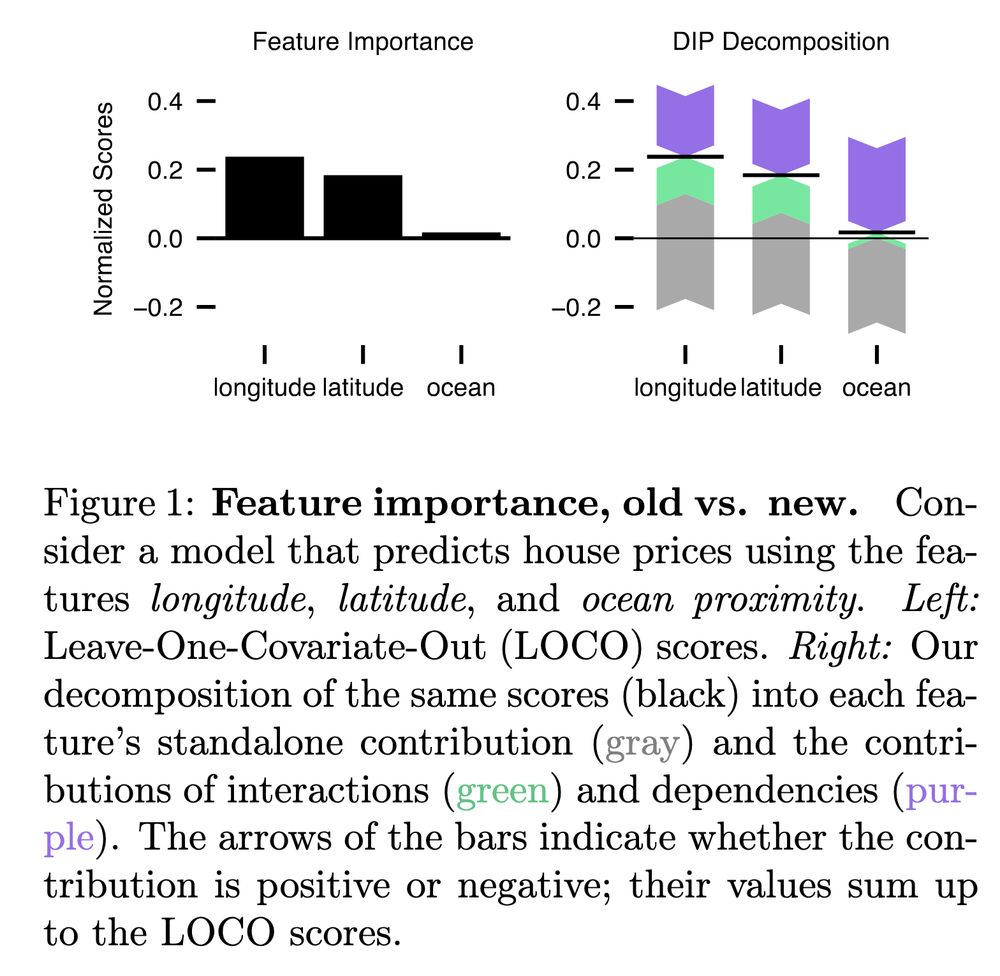

Author of Interpretable Machine Learning and other books

Newsletter: https://mindfulmodeler.substack.com/

Website: https://christophmolnar.com/

Posts

Media

Videos

Starter Packs

Pinned