Gunnar König

@gunnark.bsky.social

500 followers

200 following

12 posts

PostDoc @ Uni Tübingen

explainable AI, causality

gunnarkoenig.com

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Gunnar König

Reposted by Gunnar König

Reposted by Gunnar König

Reposted by Gunnar König

Reposted by Gunnar König

Reposted by Gunnar König

Gunnar König

@gunnark.bsky.social

· Jul 8

Gunnar König

@gunnark.bsky.social

· Jul 7

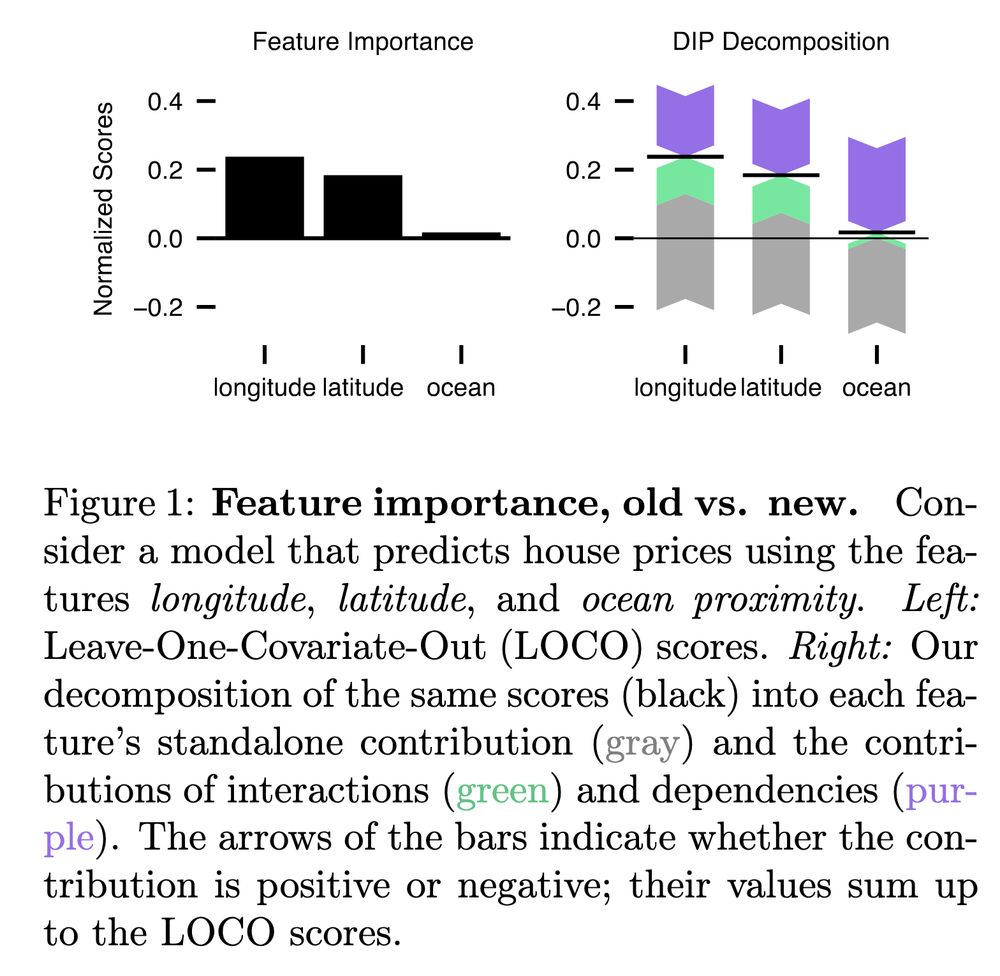

GitHub - gcskoenig/dipd: Code implementing the DIP Decomposition that disentangles standalone contributions and cooperative contributions stemming from interactions and dependencies in global loss-bas...

Code implementing the DIP Decomposition that disentangles standalone contributions and cooperative contributions stemming from interactions and dependencies in global loss-based feature attribution...

github.com

Gunnar König

@gunnark.bsky.social

· Jul 7

Gunnar König

@gunnark.bsky.social

· Jul 7

Gunnar König

@gunnark.bsky.social

· Jul 7

Gunnar König

@gunnark.bsky.social

· Jul 7

Reposted by Gunnar König

Reposted by Gunnar König

Reposted by Gunnar König

Tim van Erven

@timvanerven.nl

· May 6

Gunnar König

@gunnark.bsky.social

· Nov 27

Reposted by Gunnar König

Federico Adolfi

@fedeadolfi.bsky.social

· Nov 14

Reposted by Gunnar König