Sebastian Dziadzio

@dziadzio.bsky.social

610 followers

890 following

56 posts

ELLIS PhD student in machine learning at IMPRS-IS. Continual learning at scale.

sebastiandziadzio.com

Posts

Media

Videos

Starter Packs

Reposted by Sebastian Dziadzio

Sebastian Dziadzio

@dziadzio.bsky.social

· May 15

Sebastian Dziadzio

@dziadzio.bsky.social

· Feb 10

Sebastian Dziadzio

@dziadzio.bsky.social

· Jan 15

Sebastian Dziadzio

@dziadzio.bsky.social

· Dec 17

Reposted by Sebastian Dziadzio

Sebastian Dziadzio

@dziadzio.bsky.social

· Dec 11

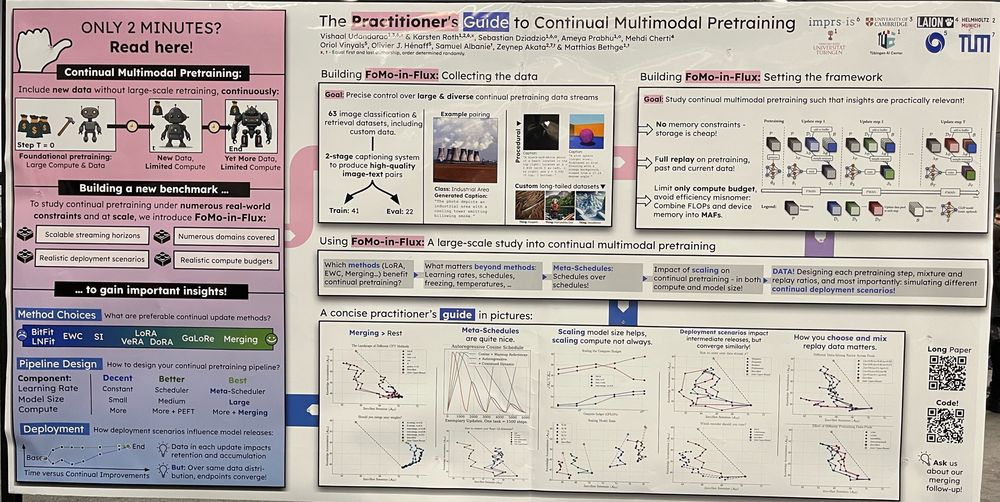

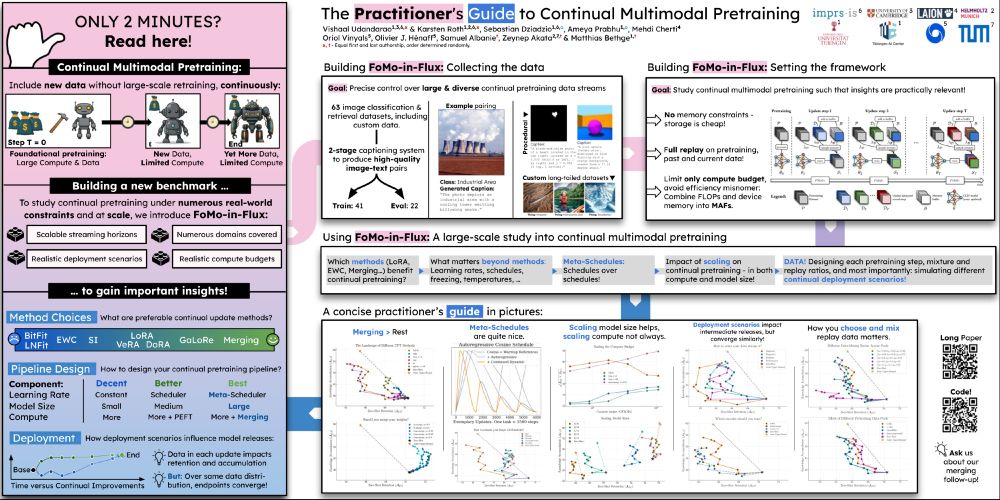

GitHub - ExplainableML/fomo_in_flux: Code and benchmark for the paper: "A Practitioner's Guide to Continual Multimodal Pretraining" [NeurIPS'24]

Code and benchmark for the paper: "A Practitioner's Guide to Continual Multimodal Pretraining" [NeurIPS'24] - ExplainableML/fomo_in_flux

github.com

Sebastian Dziadzio

@dziadzio.bsky.social

· Dec 11

Sebastian Dziadzio

@dziadzio.bsky.social

· Dec 11

Sebastian Dziadzio

@dziadzio.bsky.social

· Dec 11

How to Merge Your Multimodal Models Over Time?

Model merging combines multiple expert models - finetuned from a base foundation model on diverse tasks and domains - into a single, more capable model. However, most existing model merging approaches...

arxiv.org

Reposted by Sebastian Dziadzio

Sebastian Dziadzio

@dziadzio.bsky.social

· Nov 30

Sebastian Dziadzio

@dziadzio.bsky.social

· Nov 30

Sebastian Dziadzio

@dziadzio.bsky.social

· Nov 30

Sebastian Dziadzio

@dziadzio.bsky.social

· Nov 29

Sebastian Dziadzio

@dziadzio.bsky.social

· Nov 29