Griffiths Computational Cognitive Science Lab

@cocoscilab.bsky.social

2.4K followers

220 following

10 posts

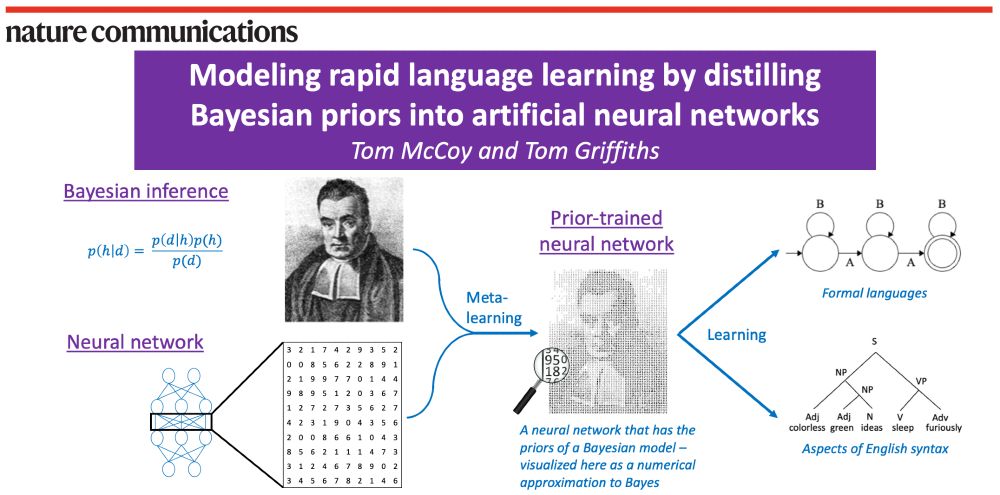

Tom Griffiths' Computational Cognitive Science Lab at Princeton. Studying the computational problems human minds have to solve.

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Griffiths Computational Cognitive Science Lab

Reposted by Griffiths Computational Cognitive Science Lab

Reposted by Griffiths Computational Cognitive Science Lab

Reposted by Griffiths Computational Cognitive Science Lab

Reposted by Griffiths Computational Cognitive Science Lab