Wow

Wow

My money is on one of the ai asic startup companies making a programming language that sticks in the near future.

My money is on one of the ai asic startup companies making a programming language that sticks in the near future.

Thought provoking idea: the most studied pure functions are ones which operate on numeric input and produce a numeric output.

This is an incredibly small portion of all possible functions and operations that could be programmed.

Thought provoking idea: the most studied pure functions are ones which operate on numeric input and produce a numeric output.

This is an incredibly small portion of all possible functions and operations that could be programmed.

People will certainly be affected, but only those made aware will voice their opinions.

www.niemanlab.org/2024/12/the-...

People will certainly be affected, but only those made aware will voice their opinions.

arcprize.org

arcprize.org

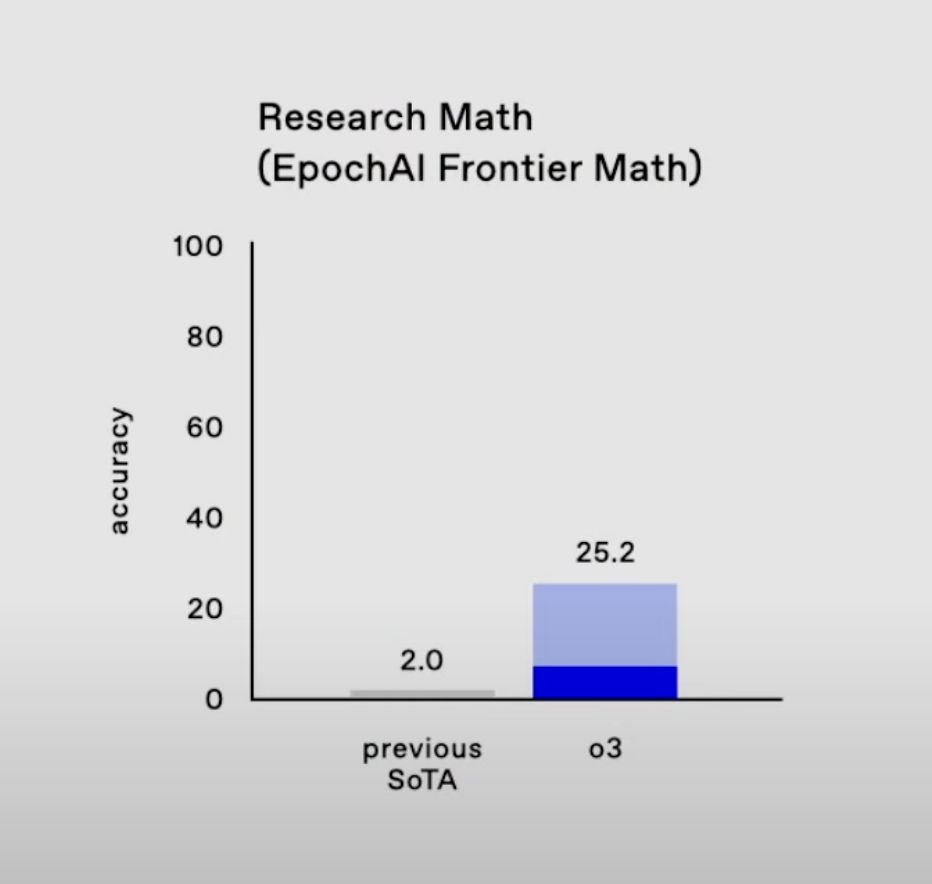

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Rip to people who say any of "progress is done," "scale is done," or "llms cant reason"

2024 was awesome. I love my job.

Can nueral networks learn the optimal strength of connections in order to improve performance?

Excellent question by ByteDance and even better answer in the paper:

arxiv.org/abs/2409.19606

Can nueral networks learn the optimal strength of connections in order to improve performance?

Excellent question by ByteDance and even better answer in the paper:

arxiv.org/abs/2409.19606

A new large video foundation model with open source weights, training, and model code

github.com/Tencent/Huny...

A new large video foundation model with open source weights, training, and model code

github.com/Tencent/Huny...

github.com/Albiemc1303/...

github.com/Albiemc1303/...

thetourney.github.io/adia-report/

thetourney.github.io/adia-report/

I can easily imagine a future where software 2.0 (model-based software) has its own frameworks, languages, and tooling where these pieces will be foundational.

github.com/facebookrese...

I can easily imagine a future where software 2.0 (model-based software) has its own frameworks, languages, and tooling where these pieces will be foundational.

github.com/facebookrese...

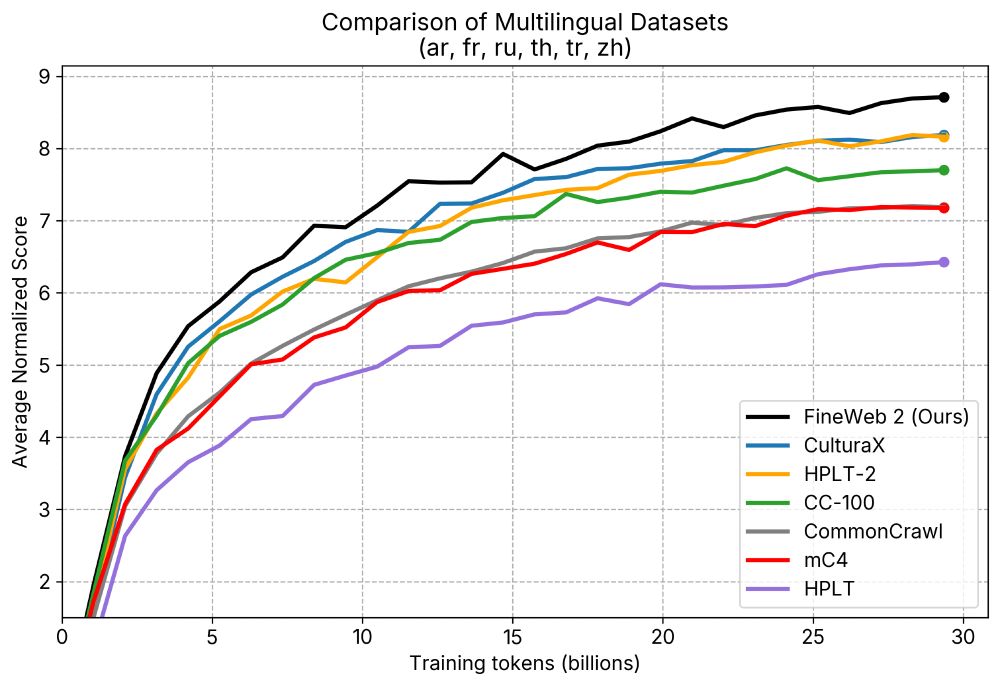

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

In this paper we have a benchmark to hill-climb with agentic systems.

arxiv.org/abs/2411.15114

In this paper we have a benchmark to hill-climb with agentic systems.

arxiv.org/abs/2411.15114