danielsc4.it

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

📄Paper: arxiv.org/abs/2510.11170

💻Code: github.com/DanielSc4/EA...

✨Huge thanks to my mentors and collaborators @leozotos.bsky.social E. Fersini @malvinanissim.bsky.social A. Üstün

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

As M scales, EAGer consistently:

🚀 Achieves HIGHER Pass@k,

✂️ Uses FEWER tokens than baseline,

🕺 Shifts the Pareto frontier favorably across all tasks.

🧵5/

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

Full EAGer uses labels to catch failing prompts, lowering threshold to branch or add sequences. Great for verifiable pipelines!

🧵4/

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

We cap at M sequences/prompt, saving budget on easy ones without regen. Training-free!

🧵3/

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

It wastes compute on redundant, predictable tokens, esp. for easy prompts. Hard prompts need more exploration but get the same budget. Enter EAGER🧠!

🧵2/

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

🔗 Code: github.com/DanielSc4/st...

Thanks to my amazing co-authors:

@gsarti.com , @arianna-bis.bsky.social , Elisabetta Fersini, @malvinanissim.bsky.social

7/7

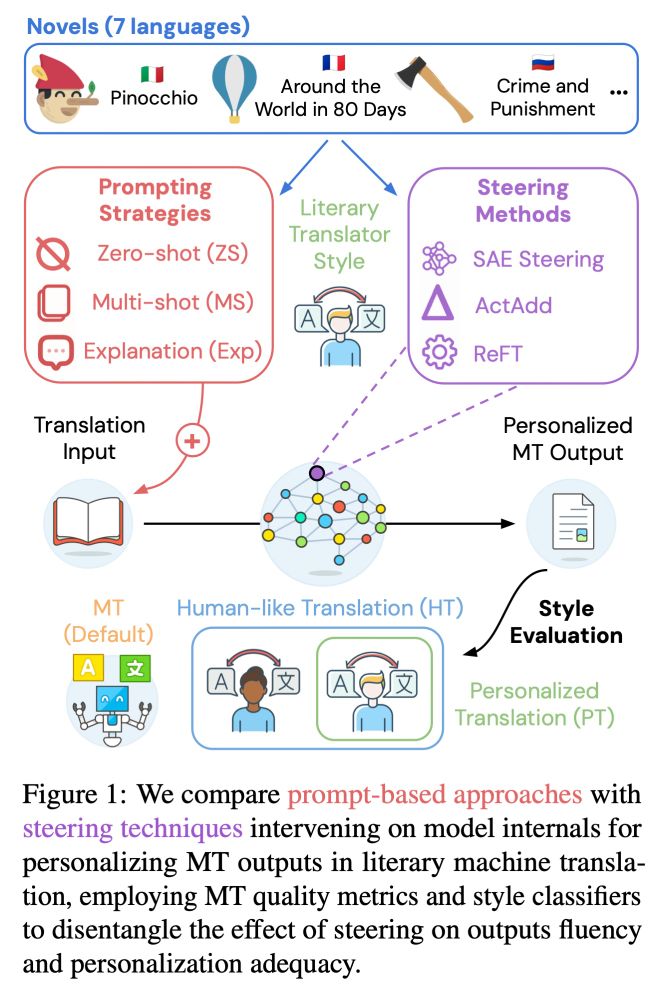

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

We find that SAE steering and multi-shot prompting impact internal representations similarly, suggesting insight from user examples are summarized with extra interpretability potential (look at latents) and better efficiency (no long context) 6/

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

Following SpARE (@yuzhaouoe.bsky.social @alessiodevoto.bsky.social), we propose ✨ contrastive SAE steering ✨ with mutual info to personalize literary MT by tuning latent features 4/

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

✓ Classifiers can find styles with high acc. (humans kinda don’t)

✓ Multi-shot prompting boosts style a lot

✓ We can detect strong style traces in activations (esp. mid layers) 3/

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/

Can we personalize LLM’s MT when few examples are available, without further tuning? 🔍 2/