David Haydock

@davidghaydock.bsky.social

42 followers

220 following

20 posts

Post-doc doing Neuroimaging @ucl.ac.uk

Interested in Neurophenomenology, and how we can develop analysis methods that benefit it

https://linktr.ee/davidghaydock

Posts

Media

Videos

Starter Packs

David Haydock

@davidghaydock.bsky.social

· Mar 20

Rory Cooper

@rorylcooper.bsky.social

· Mar 20

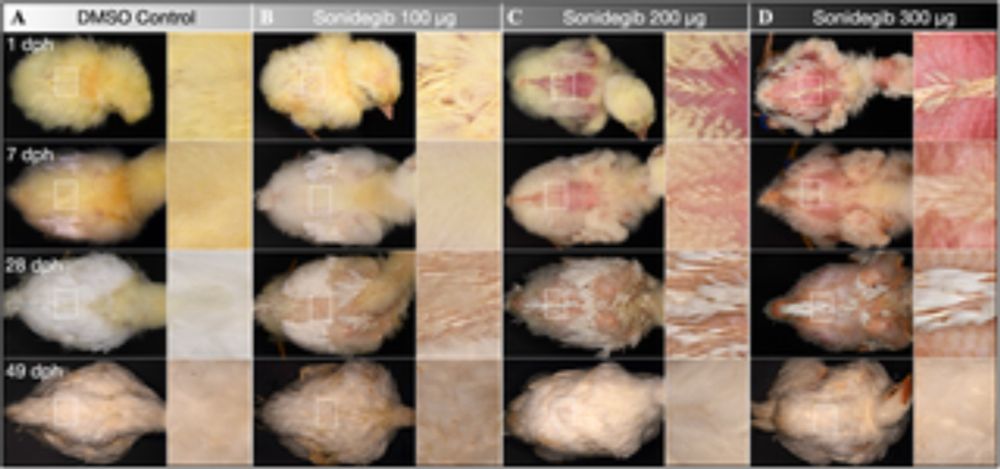

In vivo sonic hedgehog pathway antagonism temporarily results in ancestral proto-feather-like structures in the chicken

The Sonic Hedgehog (Shh) pathway is a key regulator of feather development. These authors show that in vivo Shh inhibition during early chicken embryogenesis temporarily results in unbranched and non-...

journals.plos.org

Reposted by David Haydock

David Haydock

@davidghaydock.bsky.social

· Feb 21

EEG microstate syntax analysis: A review of methodological challenges and advances

Electroencephalography (EEG) microstates are “quasi-stable” periods of electrical potential distribution in multichannel EEG derived from peaks in Glo…

www.sciencedirect.com

David Haydock

@davidghaydock.bsky.social

· Feb 21

David Haydock

@davidghaydock.bsky.social

· Feb 21

David Haydock

@davidghaydock.bsky.social

· Feb 21

David Haydock

@davidghaydock.bsky.social

· Feb 21

David Haydock

@davidghaydock.bsky.social

· Dec 26

David Haydock

@davidghaydock.bsky.social

· Dec 26

David Haydock

@davidghaydock.bsky.social

· Dec 26

David Haydock

@davidghaydock.bsky.social

· Dec 15

David Haydock

@davidghaydock.bsky.social

· Dec 15

David Haydock

@davidghaydock.bsky.social

· Dec 15

David Haydock

@davidghaydock.bsky.social

· Dec 15

Reposted by David Haydock

Reposted by David Haydock

Reposted by David Haydock