dylan

@dylnbkr.bsky.social

530 followers

340 following

170 posts

Lead research engineer @dairinstitute.bsky.social, social dancer, aspirational post-apocalyptic gardener 🏳️🌈😷

I run workshops @dairfutures.bsky.social. Always imagining otherwise.

dylanbaker.com

they/he

Posts

Media

Videos

Starter Packs

dylan

@dylnbkr.bsky.social

· 15h

dylan

@dylnbkr.bsky.social

· 15h

dylan

@dylnbkr.bsky.social

· 15h

dylan

@dylnbkr.bsky.social

· 15h

Reposted by dylan

Reposted by dylan

Bookshop.org

@bookshop.org

· 19d

dylan

@dylnbkr.bsky.social

· 22d

dylan

@dylnbkr.bsky.social

· 22d

dylan

@dylnbkr.bsky.social

· 27d

Reposted by dylan

Anthony Moser

@anthonymoser.com

· Sep 8

dylan

@dylnbkr.bsky.social

· 28d

dylan

@dylnbkr.bsky.social

· 28d

dylan

@dylnbkr.bsky.social

· 28d

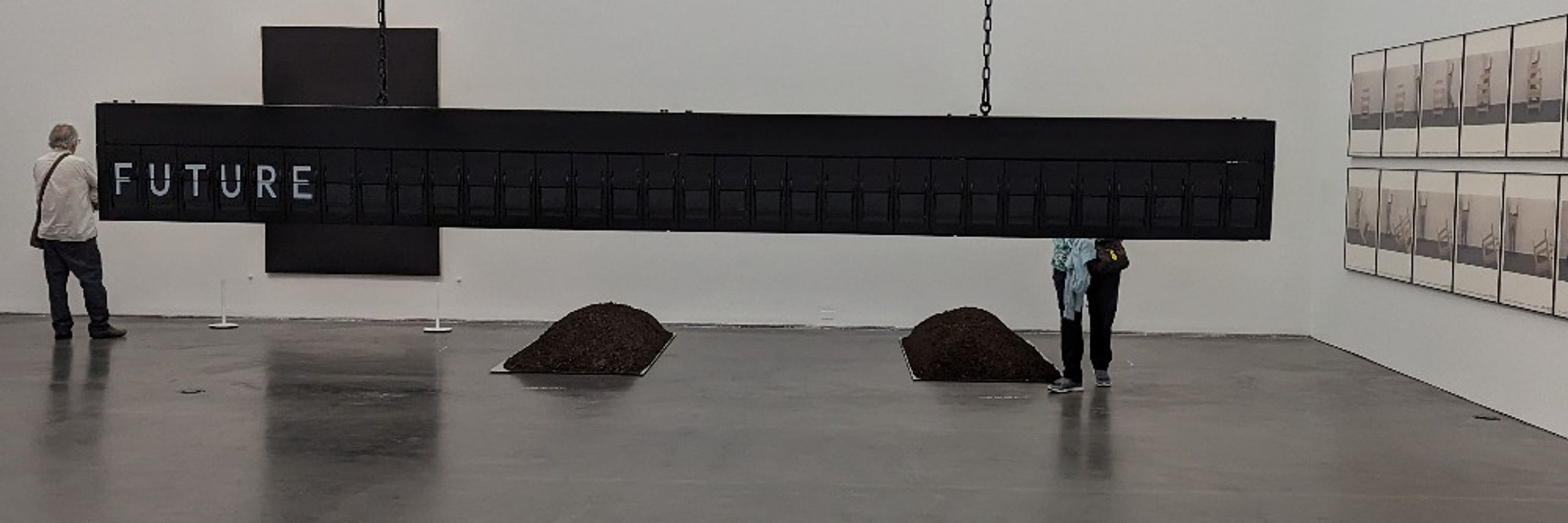

![A screenshot from the Verge article. It reads

[Block quote]: X doesn’t currently support the standard, but Elon Musk has previously said the platform “should probably do it”

[Main text]: A cornerstone of this plan involves getting online platforms to adopt the standard. X, which has attracted regulatory scrutiny as a hotbed for spreading misinformation, isn’t a member of the C2PA initiative and seemingly offers no alternative. But X owner Elon Musk does appear willing to get behind it.

[Highlighted portion]: “That sounds like a good idea, we should probably do it,” Musk said when pitched by Parsons at the 2023 AI Safety Summit. “Some way of authenticating would be good.”](https://cdn.bsky.app/img/feed_thumbnail/plain/did:plc:k6j2f6adzynuvph7wqvffup6/bafkreiazfiulbwvxcjbqot426rjjfbk76tl7pguuxq6plcd5b4ekplrruu@jpeg)